My coworkers and I created this free and open-source library. Anyone can now transfer entire clicks folders, tickets, and comments to AWS S3. When we switched from Clickup to JIRA software, this task was essentially done to backup the entire Clickup system. This threaded script employs the maximum number of threads to use ClickUP APIS and migrate all data into Data Lake S3 so that users or teams can use Athena to query using regular SQL if necessary.

Project description

ClickUp to Data Lake (S3) Migration and backup All Data Scripts

[

- My coworkers and I created this free and open-source library. Anyone can now transfer entire clicks folders, tickets, and comments to AWS S3. When we switched from Clickup to JIRA software, this task was essentially done to backup the entire Clickup system. This threaded script employs the maximum number of threads to use ClickUP APIS and migrate all data into Data Lake S3 so that users or teams can use Athena to query using regular SQL if necessary.

Authors

- Soumil Nitin Shah

- April Love Ituhat

- Divyansh Patel

NOTE:

- This is migration script which will move all the data from ClickUp we will add more features and methods in case if you want to just backup a given workspace or Folde. But For now this will Back up Everything feel free to add more functionality and submit Merge Request so other people can use the functionality. Note this is more than 500 Lines of code I will cleanup and add some amazing functionality during my free time

clickup_to_s3_migration

Installation

pip install clickup_to_s3_migration

Usage

import sys

from ClickUptoS3Migration import ClickUptoS3Migration

def main():

helper = ClickUptoS3Migration(

aws_access_key_id="<ACCESS KEY>",

aws_secret_access_key="<SECRET KEY GOES HERE>",

region_name="<AWS REGION>",

bucket="<BUCKET NAME >",

clickup_api_token="<CLICKUP API KEY>"

)

ressponse = helper.run()

main()

It’s really that easy this will iterate over all Workspaces For Each Workspaces it will call all Spaces and For Each Space it will get all Folders and For Each Folder it will Call List and For Each list call Tickets and Each Tickets call Comments

Explanation on the code works and Flow

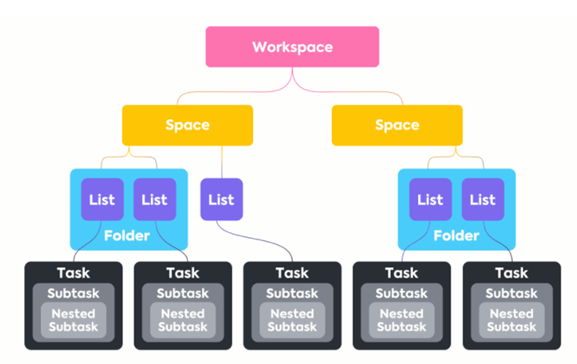

- Company can have several WorkSpace. The Code calls API and get all workspaces. Each work spaces can have several spaces and each spaces can have several folders and Each folder has many Lists and Each list has many tickets and Each tickets can have several Comments

Figure Shows the structure how click up stores data and shows how we need to iterate and get all data

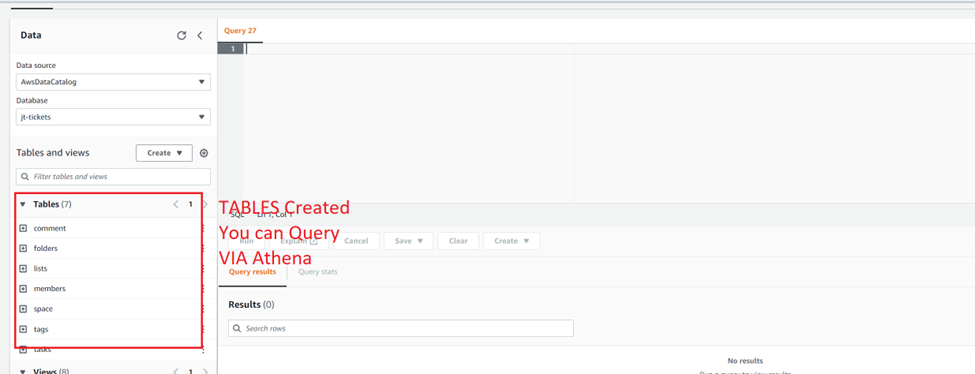

End Goal

- Once the Script is complete running which might take 1 or 2 days depending upon how much data you have

- you can Then create glue crawler and then Query the data using Athena (SQL Query)

License

This project is licensed under the MIT License - see the LICENSE.md file for details

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distributions

Built Distribution

File details

Details for the file clickup_to_s3_migration-1.1.1-py3-none-any.whl.

File metadata

- Download URL: clickup_to_s3_migration-1.1.1-py3-none-any.whl

- Upload date:

- Size: 18.8 kB

- Tags: Python 3

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/4.0.1 CPython/3.10.7

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

03293d40ccae969f85345209cfcd524d636e8637a9c94c740cfe002dbeb68cbf

|

|

| MD5 |

fe6112a9f39ed90ac4d2a1093bb8ebb7

|

|

| BLAKE2b-256 |

21d584e760439894453d51080c10a75399ebecdd5636b724cf77723b78866c4d

|