Class to load exported DeepLabCut networks and perform pose estimation on single frames (from a camera feed)

Project description

DeepLabCut-live! SDK

This package contains a DeepLabCut inference pipeline for real-time applications that has minimal (software) dependencies. Thus, it is as easy to install as possible (in particular, on atypical systems like NVIDIA Jetson boards).

If you've used DeepLabCut-Live with TensorFlow models and want to try the PyTorch version, take a look at Switching from TensorFlow to PyTorch

Performance of TensorFlow models: If you would like to see estimates on how your model should perform given different video sizes, neural network type, and hardware, please see: deeplabcut.github.io/DLC-inferencespeed-benchmark/ . We're working on getting these benchmarks for PyTorch architectures as well.

If you have different hardware, please consider submitting your results too!

What this SDK provides: This package provides a DLCLive class which enables pose

estimation online to provide feedback. This object loads and prepares a DeepLabCut

network for inference, and will return the predicted pose for single images.

To perform processing on poses (such as predicting the future pose of an animal given

its current pose, or to trigger external hardware like send TTL pulses to a laser for

optogenetic stimulation), this object takes in a Processor object. Processor objects

must contain two methods: process and save.

- The

processmethod takes in a pose, performs some processing, and returns processed pose. - The

savemethod saves any valuable data created by or used by the processor

For more details and examples, see documentation here.

🔥🔥🔥🔥🔥 Note :: alone, this object does not record video or capture images from a camera. This must be done separately, i.e. see our DeepLabCut-live GUI.🔥🔥🔥🔥🔥

News!

- WIP 2025: DeepLabCut-Live is implemented for models trained with the PyTorch engine!

- March 2022: DeepLabCut-Live! 1.0.2 supports poetry installation

poetry install deeplabcut-live, thanks to PR #60. - March 2021: DeepLabCut-Live! version 1.0 is released, with support for tensorflow 1 and tensorflow 2!

- Feb 2021: DeepLabCut-Live! was featured in Nature Methods: "Real-time behavioral analysis"

- Jan 2021: full eLife paper is published: "Real-time, low-latency closed-loop feedback using markerless posture tracking"

- Dec 2020: we talked to RTS Suisse Radio about DLC-Live!: "Capture animal movements in real time"

Installation

DeepLabCut-live can be installed from PyPI with PyTorch or Tensorflow directly:

# With PyTorch (recommended)

pip install deeplabcut-live[pytorch]

# Or with TensorFlow

pip install deeplabcut-live[tf]

# Or using uv

uv pip install deeplabcut-live[pytorch] # or [tf]

Please see our instruction manual for more elaborate information on how to install on a Windows or Linux machine or on a NVIDIA Jetson Development Board. Note, this code works with PyTorch, TensorFlow 1 or TensorFlow 2 models, but whatever engine you exported your model with, you must import with the same version (i.e., export a PyTorch model, then install PyTorch, export with TF1.13, then use TF1.13 with DlC-Live; export with TF2.3, then use TF2.3 with DLC-live).

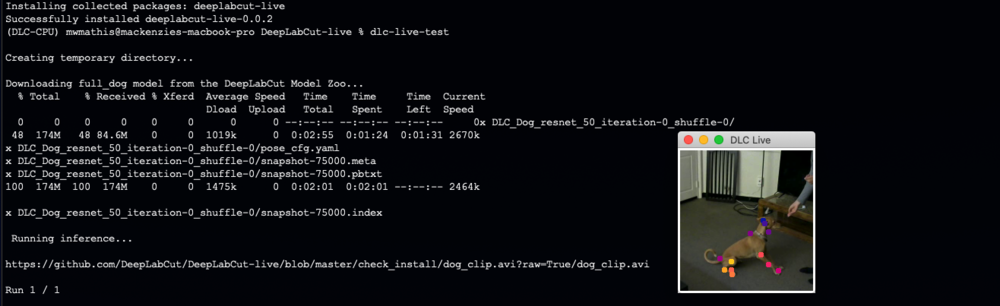

Note, you can test your installation by running:

dlc-live-test

If installed properly, this script will i) create a temporary folder ii) download the full_dog model from the DeepLabCut Model Zoo, iii) download a short video clip of a dog, and iv) run inference while displaying keypoints. v) remove the temporary folder.

PyTorch and TensorFlow can be installed as extras with deeplabcut-live - though be

careful with the versions you install!

# Install deeplabcut-live and PyTorch

`pip install deeplabcut-live[pytorch]`

# Install deeplabcut-live and TensorFlow

`pip install deeplabcut-live[tf]`

Quick Start: instructions for use

- Initialize

Processor(if desired) - Initialize the

DLCLiveobject - Perform pose estimation!

from dlclive import DLCLive, Processor

dlc_proc = Processor()

dlc_live = DLCLive(<path to exported model directory>, processor=dlc_proc)

dlc_live.init_inference(<your image>)

dlc_live.get_pose(<your image>)

DLCLive parameters:

path= string; full path to the exported DLC model directorymodel_type= string; the type of model to use for inference. Types include:pytorch= the base PyTorch DeepLabCut modelbase= the base TensorFlow DeepLabCut modeltensorrt= apply tensor-rt optimizations to modeltflite= use tensorflow lite inference (in progress...)

cropping= list of int, optional; cropping parameters in pixel number: [x1, x2, y1, y2]dynamic= tuple, optional; defines parameters for dynamic cropping of imagesindex 0= use dynamic cropping, boolindex 1= detection threshold, floatindex 2= margin (in pixels) around identified points, int

resize= float, optional; factor by which to resize image (resize=0.5 downsizes both width and height of image by half). Can be used to downsize large images for faster inferenceprocessor= dlc pose processor object, optionaldisplay= bool, optional; display processed image with DeepLabCut points? Can be used to troubleshoot cropping and resizing parameters, but is very slow

DLCLive inputs:

<path to exported model>=- For TensorFlow models: path to the folder that has the

.pbfiles that you acquire after runningdeeplabcut.export_model - For PyTorch models: path to the

.ptfile that is generated after runningdeeplabcut.export_model

- For TensorFlow models: path to the folder that has the

<your image>= is a numpy array of each frame

DLCLive - PyTorch Specific Guide

This guide is for users who trained a model with the PyTorch engine with

DeepLabCut 3.0.

Once you've trained your model in DeepLabCut and you are happy with its performance, you can export the model to be used for live inference with DLCLive!

Switching from TensorFlow to PyTorch

This section is for users who have already used DeepLabCut-Live with TensorFlow models (through DeepLabCut 1.X or 2.X) and want to switch to using the PyTorch Engine. Some quick notes:

- You may need to adapt your code slightly when creating the DLCLive instance.

- Processors that were created for TensorFlow models will function the same way with

PyTorch models. As multi-animal models can be used with PyTorch, the shape of the

posearray given to the processor may be(num_individuals, num_keypoints, 3). Just callDLCLive(..., single_animal=True)and it will work.

Benchmarking/Analyzing your exported DeepLabCut models

DeepLabCut-live offers some analysis tools that allow users to perform the following operations on videos, from python or from the command line:

Test inference speed across a range of image sizes

Downsizing images can be done by specifying the resize or pixels parameter. Using

the pixels parameter will resize images to the desired number of pixels, without

changing the aspect ratio. Results will be saved (along with system info) to a pickle

file if you specify an output directory.

Inside a python shell or script, you can run:

dlclive.benchmark_videos(

"/path/to/exported/model",

["/path/to/video1", "/path/to/video2"],

output="/path/to/output",

resize=[1.0, 0.75, '0.5'],

)

From the command line, you can run:

dlc-live-benchmark /path/to/exported/model /path/to/video1 /path/to/video2 -o /path/to/output -r 1.0 0.75 0.5

Display keypoints to visually inspect the accuracy of exported models on different image sizes (note, this is slow and only for testing purposes):

Inside a python shell or script, you can run:

dlclive.benchmark_videos(

"/path/to/exported/model",

"/path/to/video",

resize=0.5,

display=True,

pcutoff=0.5,

display_radius=4,

cmap='bmy'

)

From the command line, you can run:

dlc-live-benchmark /path/to/exported/model /path/to/video -r 0.5 --display --pcutoff 0.5 --display-radius 4 --cmap bmy

Analyze and create a labeled video using the exported model and desired resize parameters.

This option functions similar to deeplabcut.benchmark_videos and

deeplabcut.create_labeled_video (note, this is slow and only for testing purposes).

Inside a python shell or script, you can run:

dlclive.benchmark_videos(

"/path/to/exported/model",

"/path/to/video",

resize=[1.0, 0.75, 0.5],

pcutoff=0.5,

display_radius=4,

cmap='bmy',

save_poses=True,

save_video=True,

)

From the command line, you can run:

dlc-live-benchmark /path/to/exported/model /path/to/video -r 0.5 --pcutoff 0.5 --display-radius 4 --cmap bmy --save-poses --save-video

License:

This project is licensed under the GNU AGPLv3. Note that the software is provided "as is", without warranty of any kind, express or implied. If you use the code or data, we ask that you please cite us! This software is available for licensing via the EPFL Technology Transfer Office (https://tto.epfl.ch/, info.tto@epfl.ch).

Community Support, Developers, & Help:

This is an actively developed package, and we welcome community development and involvement.

- If you want to contribute to the code, please read our guide here, which is provided at the main repository of DeepLabCut.

- We are a community partner on the

. Please post help and support questions on the forum with the tag DeepLabCut. Check out their mission statement Scientific Community Image Forum: A discussion forum for scientific image software.

- If you encounter a previously unreported bug/code issue, please post here (we encourage you to search issues first): github.com/DeepLabCut/DeepLabCut-live/issues

- For quick discussions here:

Reference:

If you utilize our tool, please cite Kane et al, eLife 2020. The preprint is available here: https://www.biorxiv.org/content/10.1101/2020.08.04.236422v2

@Article{Kane2020dlclive,

author = {Kane, Gary and Lopes, Gonçalo and Sanders, Jonny and Mathis, Alexander and Mathis, Mackenzie},

title = {Real-time, low-latency closed-loop feedback using markerless posture tracking},

journal = {eLife},

year = {2020},

}

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

Filter files by name, interpreter, ABI, and platform.

If you're not sure about the file name format, learn more about wheel file names.

Copy a direct link to the current filters

File details

Details for the file deeplabcut_live-1.1.0.tar.gz.

File metadata

- Download URL: deeplabcut_live-1.1.0.tar.gz

- Upload date:

- Size: 126.9 kB

- Tags: Source

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/6.2.0 CPython/3.14.3

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

d522a1f4b3b7c5283e6e3b9c75f694a2fd096d61a0ef66b0b85d1403e9f41e2f

|

|

| MD5 |

56a82d12efe3e8474f04b376d40edb82

|

|

| BLAKE2b-256 |

2f1acee2fd610e9fd7206187a7958b1d2e5a4efbadf1b7f973c6b44c63c5650c

|

File details

Details for the file deeplabcut_live-1.1.0-py3-none-any.whl.

File metadata

- Download URL: deeplabcut_live-1.1.0-py3-none-any.whl

- Upload date:

- Size: 156.2 kB

- Tags: Python 3

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/6.2.0 CPython/3.14.3

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

3bc7e596d46c60de769af0239945b88f649faa07f4e9a2fb42c5ab3f6b360cd4

|

|

| MD5 |

7c20e41d18f21ac70a7ca69a506c47b9

|

|

| BLAKE2b-256 |

a9be8a284098b5937cb89df5bd8a03d22dc180d6d6c8675a8bc97b66f9a05960

|