Exhaustive Feature Selection

Project description

ExhauFS

Exhaustive feature selection for classification and survival analysis.

Table of Contents

Introduction

Installation

Running ExhauFS

Functions and classes

Tutorials

etc

Introduction

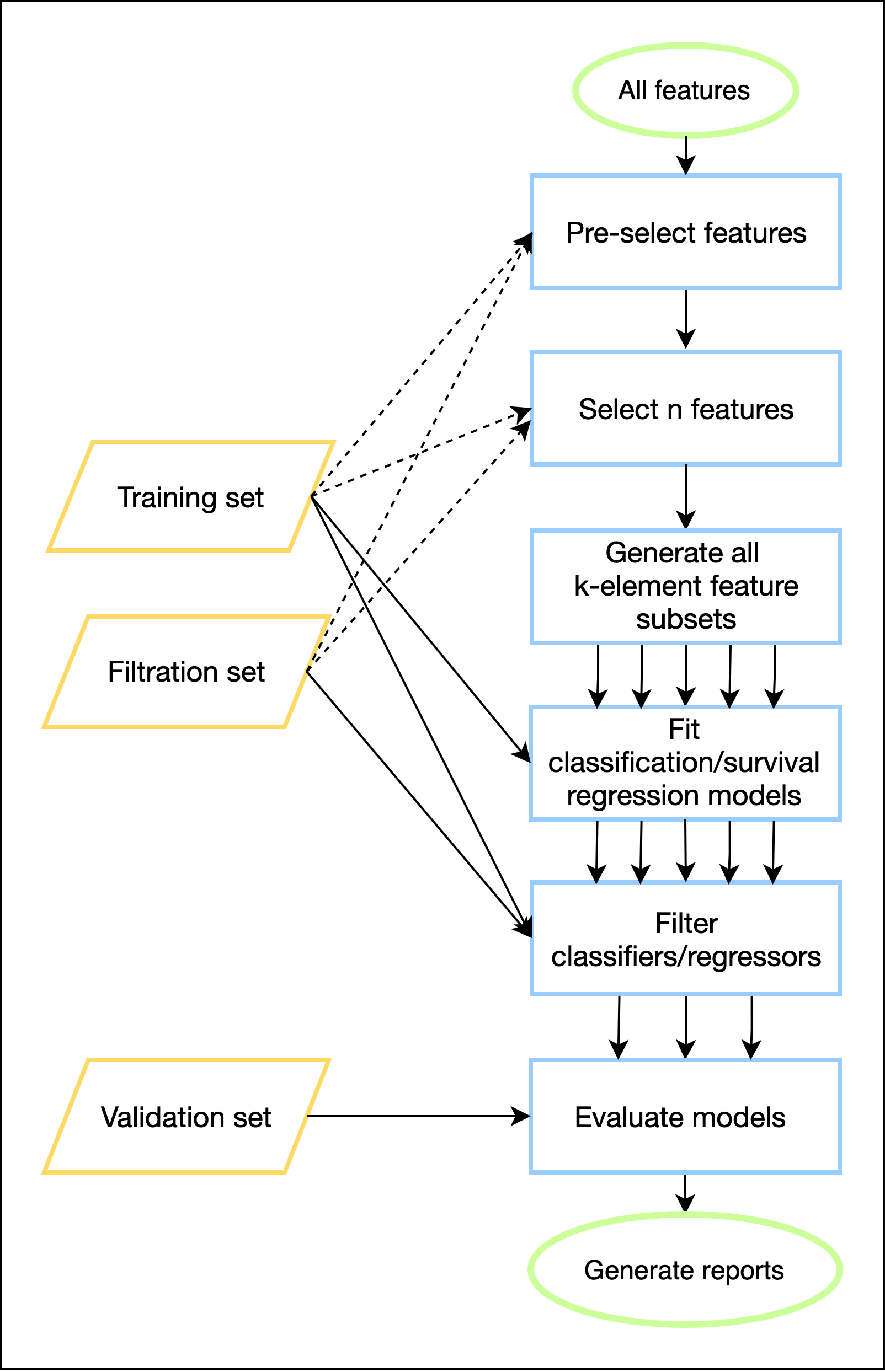

The main idea behind ExhauFS is the exhaustive search of feature subsets to construct the most powerful classification and survival regression models. Since computational complexity of such approach grows exponentially with respect to combination length, we first narrow down features list in order to make the search practically feasible. Briefly, a pipeline is implemented as follows:

- Feature pre-selection: filter features by specified method.

- Feature selection: select "best" n features for exhaustive search.

- Exhaustive search: iterate through all possible k-element feature subsets and fit classification/regression models.

- Evaluation: evaluate each model and make summary of all passed feature subsets

Input data can consist from different batches (datasets), and each dataset should be labeled by one of the following types:

- Training set: samples from training datasets will be used for tuning classification/regression models. At least one such dataset is required; if multiple given, the union will be used.

- Filtration set: all tuned models will be first evaluated on training and filtration sets. If specified thresholds for accuracy are reached, model will be evaluated on validation (test) sets. The use of filtration sets is optional.

- Validation (test) set: performance of models that passed filtration thresholds then evaluated on validation sets. At least one such dataset is required; if multiple given, model will be evaluated on all test sets independently.

Installation

Prerequisites:

Make sure you have installed all of the following prerequisites on your development machine:

- python3.6+

- pip3

ExhauFS installation:

pip3 install exhaufs

Running ExhauFS

Step 1: data preparation

Before running the tool, you should prepare three csv tables containing actual data, its annotation and n / k grid. Both for classification and survival analysis data table should contain numerical values associated with samples (rows) and features (columns):

Example

| Feature 1 | Feature 2 | |

|---|---|---|

| Sample 1 | 17.17 | 365.1 |

| Sample 2 | 56.99 | 123.9 |

| ... | ||

| Sample 98 | 22.22 | 123.4 |

| Sample 99 | 23.23 | 567.8 |

| ... | ||

| Sample 511 | 10.82 | 665.8 |

| Sample 512 | 11.11 | 200.2 |

Annotation table format is different for classification and survival analysis. For classification it should contain binary indicator of sample class (e.g., 1 for recurrent tumor and 0 for non-recurrent), dataset (batch) label and dataset type (Training/Filtration/Validation).

It is important that Class = 1 represents "Positives" and Class = 0 are "negatives", otherwise accuracy scores may be calculated incorrectly.

Note that annotation should be present for each sample listed in the data table in the same order:

Example

| Class | Dataset | Dataset type | |

|---|---|---|---|

| Sample 1 | 1 | GSE3494 | Training |

| Sample 2 | 0 | GSE3494 | Training |

| ... | |||

| Sample 98 | 0 | GSE12093 | Filtration |

| Sample 99 | 0 | GSE12093 | Filtration |

| ... | |||

| Sample 511 | 1 | GSE1456 | Validation |

| Sample 512 | 1 | GSE1456 | Validation |

For survival analysis, annotation table should contain binary event indicator and time to event:

Example

| Event | Time to event | Dataset | Dataset type | |

|---|---|---|---|---|

| Sample 1 | 1 | 100.1 | GSE3494 | Training |

| Sample 2 | 0 | 500.2 | GSE3494 | Training |

| ... | ||||

| Sample 98 | 0 | 623.9 | GSE12093 | Filtration |

| Sample 99 | 0 | 717.1 | GSE12093 | Filtration |

| ... | ||||

| Sample 511 | 1 | 40.5 | GSE1456 | Validation |

| Sample 512 | 1 | 66.7 | GSE1456 | Validation |

Table with n / k grid for exhaustive feature selection:

n is a number of selected features, k is a length of each features subset.

If you are not sure what values for n k to use, see Step 3: defining a n, k grid

Example

| n | k |

|---|---|

| 100 | 1 |

| 100 | 2 |

| ... | ... |

| 20 | 5 |

| 20 | 10 |

| 20 | 15 |

Step 2: creating configuration file

Configuration file is a json file containing all customizable parameters for the model (classification and survival analysis)

Available parameters

🔴!NOTE! - All paths to files / directories can be either relative to the configuration file directory or absolute paths

-

data_pathPath to csv table of the data. -

annotation_pathPath to csv table of the data annotation. -

n_k_pathPath to a n/k grid file. -

output_dirPath to directory for output files. If it doesn't exist, it will be created. -

feature_pre_selector

Name of feature pre-selection function from feature pre-selectors section. -

feature_pre_selector_kwargs

Object/Dictionary of keyword arguments for feature pre-selector function. -

feature_selector

Name of feature selection function from feature selectors section. -

feature_selector_kwargs

Object/Dictionary of keyword arguments for feature selector function. Booleanuse_filtrationindicates whether to use Filtration dataset besides Training dataset for the selector function. -

preprocessorName of class for data preprocessing from sklearn.preprocessing. -

preprocessor_kwargsObject/Dictionary of keyword arguments for preprocessor class initialization.

If you are usingsklearnmodel, usekwargsparameters from the documentation of the model. -

model

Name of class for classification / survival analysis from Classifiers / Regressors section. -

model_kwargsObject/Dictionary of keyword arguments for model initialization.

If you are usingsklearnmodel, usekwargsparameters from the documentation of the model. -

model_CV_rangesObject/Dictionary defining model parameters which should be cross-validated. Keys are parameter names, values are lists for grid search. -

model_CV_foldsNumber of folds for K-Folds cross-validation. -

scoring_functionsList with names for scoring functions (from Accuracy scores section) which will be calculated for each model. If you need to pass parameters to the function (e.g.yearin dynamic auc score), you can use object {"name":function name, "kwargs":parameters object}. -

main_scoring_functionKey from scoring_functions dict defining the "main" scoring function which will be optimized during cross-validation and will be used for model filtering. -

main_scoring_thresholdA number defining threshold for model filtering: models with score below this threshold on training/filtration sets will not be further evaluated. -

n_processesNumber of processes / threads to run on. -

random_stateRandom seed (set to an arbitrary integer for reproducibility). -

verboseIf true, print running time for each pair of n, k.

Step 3: defining a n, k grid

To estimate running time of the exhaustive pipeline and define adequate n / k values you can run:

exhaufs estimate regressors|classifiers -c <config_file> --max_k <max_k> --max_estimated_time <max_estimated_time>

where

config_fileis the path to json configuration file.max_kis the maximum length of each features subset.max_estimated_timeis the time constraint (in hours) for a pipeline running time on one pair of (n, k).

Above script calculates maximum possible values n / k for each k=1...max_k such that pipeline running time for each pair (n, k) is less then max_estimated_time

Step 4: running the exhaustive pipeline

When input data, configuration file and n / k grid are ready,

the exhaustive pipeline can be executed as follows -

- Classifiers:

exhaufs build classifiers -c <config_file>

- Regressors:

exhaufs build regressors -c <config_file>

This will generate multiple files in the specified output folder:

models.csv: this file contains all models (classifiers or regressors) which passed the filtration together with their quality metrics.summary_n_k.csv: for each pair of n, k three numbers are given: number of models which passed the filtration, number of models which showed reliable performance (i.e., passed quality thresholds) on the validation set and their ratio (in %). Low percentage of validation-reliable models together with high number of filtration-reliable models is usually associated with overfitting.summary_features.csv: for each pair (n, k), for each feature, percentage of models carrying this feature is listed (only models which passed the filtration are considered).

Step 5: generating report for a single model

To get detailed report on the specific model (== specific set of features):

- Create configuration file (use ./examples/make_(classifier | regressor)_summary/config.json as a template) and set following key parameters:

data_path- path to dataset used for search of classifiers or regressorsannotation_path- path to annotation fileoutput_dir- path to output directory for detailed reportfeatures_subset- set of features belonging to the classifier or regressor of interest;saving_format- either "tiff" or "pdf": format of the saved plots documents;

-

- For classifier run

exhaufs summary classifiers -c <config_file> - For regressor run

exhaufs summary regressors -c <config_file>

- For classifier run

- Check the detailed report in the

output_dir

If you also have time-to-event data for classification problem, you can make Kaplan-Meier plots based on the classifier predictions.

To do so you can run exhaufs km_plot -c <config_file> and check the output_dir directory.

You can also specify KM_x_label and KM_y_label in the config to change plot axis names.

Functions and classes

Feature pre-selectors

-

from_file

Pre-select features from a given file

name: from_file

kwargs:{ "sep": " " }

-

f_test

Pre-select features without difference between different datasets and types

name: f_test

Feature selectors

-

from_file

Select first n features from a given file

name: from_file

kwargs:{ "sep": " " }

-

t_test

Select n features with the lowest p-values according to t-test

name: t_test

kwargs:{ "use_filtration": false // whether to use filtration dataset with training dataset }

-

spearman_correlation

Select n features with the highest correlation with target label

name: spearman_correlation

kwargs:{ "use_filtration": false // whether to use filtration dataset with training dataset }

-

median

Select n features with the highest median value

name: median

kwargs:{ "use_filtration": false // whether to use filtration dataset with training dataset }

Regression specific selectors:

-

cox_concordance

Select n features with the highest concordance index on one-factor Cox regression.

name: cox_concordance

kwargs:{ "use_filtration": false // whether to use filtration dataset with training dataset }

-

cox_dynamic_auc

Select n features with the highest time-dependent auc on one-factor Cox regression.

name: cox_dynamic_auc

kwargs:{ "year": 3, // time at which to calculate auc "use_filtration": false // whether to use filtration dataset with training dataset }

-

cox_hazard_ratio

Select n features with the highest hazard ratio on one-factor Cox regression.

name: cox_hazard_ratio kwargs:

{ "use_filtration": false // whether to use filtration dataset with training dataset }

cox_likelihood

Select n features with the highest log-likelihood on one-factor Cox regression.

name: cox_likelihood

kwargs:{ "use_filtration": false // whether to use filtration dataset with training dataset }

Classifiers

As a model_kwargs value - use parameters from the documentation of chosen model.

Accuracy scores

- TPR

- FPR

- TNR

- min_TPR_TNR

Regressors

- CoxRegression

Accuracy scores

- concordance_index

- dynamic_auc

- hazard_ratio

- logrank

Tutorials

Toy example

As a toy example of how the ExhauFS works we used a small cervical cancer dataset with 19 features and 72 samples.

Transformed data and config used for pipeline can be found in OneDrive.

The purpose of the toy example is to show that exhaustive search over all triples of features

can yield better results than by using a standard approach of training classifier on all the features

and then select the most important ones.

By executing exhaufs build classifiers -c <config path> command we are getting results files in the specified output directory:

models.csv

In this file, by ranking all models by their performance on the "Training" set, we can see that almost all models have accuracy score of 1.0.

And among these models there are multiple cases with particularly high accuracy on "Validation" set:

| features | Validation;min_TPR_TNR | Training;min_TPR_TNR | n | k |

|---|---|---|---|---|

| ... | ... | ... | ... | ... |

| behavior_eating;norm_fulfillment;empowerment_knowledge | 0.9 | 1.0 | 19 | 3 |

| ... | ... | ... | ... | ... |

To get a full summary of a particular model (in our case - constructed on above three features),

we need to add features_subset with those features to the config file and run exhaufs summary classifiers -c <config path>

which will, again, produce multiple files in the specified output directory, the most important of which are:

report.txt(contains detailed accuracy scores for all datasets)ROC_Training.pdf(contains roc-auc curve for training set)ROC_Validation.pdf(contains roc-auc curve for validation set)

Breast cancer classification

TODO: add correct links

As a real-life example of the classification part of the tool we used breast cancer dataset.

Transformed data and config used for pipeline can be found in OneDrive.

The main objective was to analyse contribution of different pre-processing and feature [pre]selection techniques.

By using z-score as a normalization, t-test as a feature selector and KBinsDiscretizer(binarization) as a pre-processor we achieved good results in terms of number of models passing threshold on validation set relative to the number of models passing threshold on training and filtration sets which indicates that there is no randomness and all of the models are actually "good".

First of all, we need to calculate appropriate grid for n/k values, so the pipeline knows what features and their subsets to use.

To do so, we need to define the maximum time we want for the pipeline to work for a single pair of (n, k).

In our case, we chose 12 hours. And since we don't want to analyse classifiers with more than 20 features, we set max_k as 20.

By executing exhaufs estimate classifiers -c <config path> --max_estimated_time 12 --max_k 20 we are getting n/k grid table in the output directory, which looks like this:

| n | k | Estimated time |

|---|---|---|

| ... | ... | ... |

| 59 | 4 | 2.9192129150403865 |

| 37 | 5 | 2.8854977554500105 |

| 28 | 6 | 2.5242263025045393 |

| 24 | 7 | 2.3660491471767426 |

We can use path to the above file as a n_k_path value in the config and then by executing exhaufs build classifiers -c <config path> command we get pipeline results files in the specified output directory:

summary_n_k.csv

Shows that above certain values of k, almost 100% of the classifiers passed the threshold of 0.65 for minimum of TPR and TNR.

TODO: add real table

| n | k | num_training_reliable | num_validation_reliable | percentage_reliable |

|---|---|---|---|---|

| 19 | 2 | 137 | 41 | 29.927007299270077 |

| 19 | 3 | 925 | 258 | 29.927007299270077 |

| 19 | 4 | 3859 | 1252 | 32.44363824825084 |

models.csv

In this file, by ranking all models by their performance on the "Training" set we can see that almost all models have accuracy score of 1.0

And among these models there are multiple cases with particularly high accuracy on "Validation" set

| features | Validation;min_TPR_TNR | Training;min_TPR_TNR | n | k |

|---|---|---|---|---|

| ... | ... | ... | ... | ... |

| behavior_eating;norm_fulfillment;empowerment_knowledge | 0.9 | 1.0 | 19 | 3 |

| ... | ... | ... | ... | ... |

Then, to get a full summary of a particular model (in our case - constructed on above three features),

we need to add features_subset with those features to the config file and run exhaufs summary classifiers -c <config path>

which will, again, produce multiple files in the specified output directory, the most important of which are:

report.txt(contains detailed accuracy scores for all datasets)ROC_Training.pdf(contains roc-auc curve for training set)ROC_Validation.pdf(contains roc-auc curve for validation set)

Colorectal cancer survival regression

TODO: add correct links As a real-life example of the regression part of the tool we used colorectal cancer dataset.

Transformed data and config used for pipeline can be found in OneDrive.

Same with classification, the main objective was to analyse contribution of different feature [pre]selection techniques and accuracy scores using Cox Regression as a main model.

We achieved best results using concordance_index as a feature selector and as a main scoring function.

Again, same with classification, firstly we need to make n/k grid table for the pipeline.

After choosing maximum time and k values (in this case - maximum time is 3 hours and maximum k is 20) we can run exhaufs estimate regressors -c <config path> --max_estimated_time 3 --max_k 20 and use the resulting table as a n/k grid for the pipeline.

By executing exhaufs build regressors -c <config path> command we are getting results files in the specified output directory:

summary_n_k.csv

Shows that above certain values of k, close to 95% of the regressors passed the threshold of 0.6 for concordance index.

| n | k | ... | percentage_reliable |

|---|---|---|---|

| ... | ... | ... | ... |

| 21 | 9 | ... | 79.94548176605181 |

| 20 | 10 | ... | 88.44062562932133 |

| 20 | 11 | ... | 93.06034157506852 |

| 20 | 12 | ... | 96.4579532546212 |

| 20 | 13 | ... | 98.52712732293884 |

| 21 | 14 | ... | 98.68958543983824 |

| 22 | 15 | ... | 98.8608905764584 |

| 22 | 16 | ... | 99.55598455598457 |

| ... | ... | ... | ... |

models.csv

If we take only models with k=7 and sort them by average between concordance index on training and filtration sets

we find one model with quite high scores: concordance index = 0.71, hazard ratio = 3, 3-year AUC = 0.67, logrank = 3.1.

TODO: add features

Then, to get a full summary of this model, we need to add features_subset with those features to the config file and run exhaufs summary regressors -c <config path> which will, again, produce multiple files in the specified output directory, the most important of which are:

report.txt(contains detailed accuracy scores for all datasets)KM_Training.pdf(contains Kaplan-Meier curve for training set)KM_Filtration.pdf(contains Kaplan-Meier curve for filtration set)KM_Validation.pdf(contains Kaplan-Meier curve for validation set)

etc

Breast and colorectal cancer microarray datasets: OneDrive.

Project details

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distributions

Built Distribution

File details

Details for the file exhaufs-0.8-py3-none-any.whl.

File metadata

- Download URL: exhaufs-0.8-py3-none-any.whl

- Upload date:

- Size: 44.9 kB

- Tags: Python 3

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/3.3.0 pkginfo/1.7.0 requests/2.25.1 setuptools/47.1.0 requests-toolbelt/0.9.1 tqdm/4.58.0 CPython/3.8.4

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

0d05d95b7808277da1eb1099ad260ad055edcc176b724bd34015d33321b7572a

|

|

| MD5 |

c3f44b72814f2dcddc71b4cc6fafb940

|

|

| BLAKE2b-256 |

741c92f2d3045f9ecdcf3877ce1e16ba1cb5b24642719c58025ec4fc2ba42d62

|