Neural Question Answering & Semantic Search at Scale. Use modern transformer based models like BERT to find answers in large document collections

This project has been archived.

The maintainers of this project have marked this project as archived. No new releases are expected.

Project description

Haystack is an end-to-end framework that enables you to build powerful and production-ready pipelines for different search use cases. Whether you want to perform Question Answering or semantic document search, you can use the State-of-the-Art NLP models in Haystack to provide unique search experiences and allow your users to query in natural language. Haystack is built in a modular fashion so that you can combine the best technology from other open-source projects like Huggingface's Transformers, Elasticsearch, or Milvus.

What to build with Haystack

- Ask questions in natural language and find granular answers in your documents.

- Perform semantic search and retrieve documents according to meaning, not keywords

- Use off-the-shelf models or fine-tune them to your domain.

- Use user feedback to evaluate, benchmark, and continuously improve your live models.

- Leverage existing knowledge bases and better handle the long tail of queries that chatbots receive.

- Automate processes by automatically applying a list of questions to new documents and using the extracted answers.

Core Features

- Latest models: Utilize all latest transformer-based models (e.g., BERT, RoBERTa, MiniLM) for extractive QA, generative QA, and document retrieval.

- Modular: Multiple choices to fit your tech stack and use case. Pick your favorite database, file converter, or modeling framework.

- Open: 100% compatible with HuggingFace's model hub. Tight interfaces to other frameworks (e.g., Transformers, FARM, sentence-transformers)

- Scalable: Scale to millions of docs via retrievers, production-ready backends like Elasticsearch / FAISS, and a fastAPI REST API

- End-to-End: All tooling in one place: file conversion, cleaning, splitting, training, eval, inference, labeling, etc.

- Developer friendly: Easy to debug, extend and modify.

- Customizable: Fine-tune models to your domain or implement your custom DocumentStore.

- Continuous Learning: Collect new training data via user feedback in production & improve your models continuously

| :ledger: Docs | Usage, Guides, API documentation ... |

| :beginner: Quick Demo | Quickly see what Haystack offers |

| :floppy_disk: Installation | How to install Haystack |

| :art: Key Components | Overview of core concepts |

| :mortar_board: Tutorials | Jupyter/Colab Notebooks & Scripts |

| :eyes: How to use Haystack | Basic explanation of concepts, options and usage |

| :heart: Contributing | We welcome all contributions! |

| :vulcan_salute: Community | Slack, Twitter, Stack Overflow, GitHub Discussions |

| :bar_chart: Benchmarks | Speed & Accuracy of Retriever, Readers and DocumentStores |

| :telescope: Roadmap | Public roadmap of Haystack |

| :newspaper: Blog | Read our articles on Medium |

Quick Demo

The quickest way to see what Haystack offers is to start a Docker Compose demo application:

1. Update/install Docker and Docker Compose, then launch Docker

apt-get update && apt-get install docker && apt-get install docker-compose

service docker start

2. Clone Haystack repository

git clone https://github.com/deepset-ai/haystack.git

3. Pull images & launch demo app

cd haystack

docker-compose pull

docker-compose up

# Or on a GPU machine: docker-compose -f docker-compose-gpu.yml up

You should be able to see the following in your terminal window as part of the log output:

..

ui_1 | You can now view your Streamlit app in your browser.

..

ui_1 | External URL: http://192.168.108.218:8501

..

haystack-api_1 | [2021-01-01 10:21:58 +0000] [17] [INFO] Application startup complete.

4. Open the Streamlit UI for Haystack by pointing your browser to the "External URL" from above.

You should see the following:

You can then try different queries against a pre-defined set of indexed articles related to Game of Thrones.

Note: The following containers are started as a part of this demo:

- Haystack API: listens on port 8000

- DocumentStore (Elasticsearch): listens on port 9200

- Streamlit UI: listens on port 8501

Please note that the demo will publish the container ports to the outside world. We suggest that you review the firewall settings depending on your system setup and the security guidelines.

Installation

If you're interested in learning more about Haystack and using it as part of your application, we offer several options.

1. Installing from a package

You can install Haystack by using pip.

pip3 install farm-haystack

Please check our page on PyPi for more information.

2. Installing from GitHub

You can also clone it from GitHub — in case you'd like to work with the master branch and check the latest features:

git clone https://github.com/deepset-ai/haystack.git

cd haystack

pip install --editable .

To update your installation, do a git pull. The --editable flag will update changes immediately.

3. Installing on Windows

On Windows, you might need:

pip install farm-haystack -f https://download.pytorch.org/whl/torch_stable.html

Key Components

- FileConverter: Extracts pure text from files (pdf, docx, pptx, html, and many more).

- PreProcessor: Cleans and splits the text into smaller chunks.

- DocumentStore: Database storing the documents, metadata, and vectors for our search. We recommend Elasticsearch or FAISS but also have more light-weight options for fast prototyping (SQL or In-Memory).

- Retriever: Fast algorithms that identify candidate documents for a given query from a large collection of documents. Retrievers narrow down the search space significantly and are therefore crucial for scalable QA. Haystack supports sparse methods (TF-IDF, BM25, custom Elasticsearch queries) and state of the art dense methods (e.g., sentence-transformers and Dense Passage Retrieval)

- Ranker: Neural network (e.g., BERT or RoBERTA) that re-ranks top-k retrieved documents. The Ranker is an optional component and uses a TextPairClassification model under the hood. This model calculates semantic similarity of each of the top-k retrieved documents with the query.

- Reader: Neural network (e.g., BERT or RoBERTA) that reads through texts in detail to find an answer. The Reader takes multiple passages of text as input and returns top-n answers. Models are trained via FARM or Transformers on SQuAD like tasks. You can load a pre-trained model from Hugging Face's model hub or fine-tune it on your domain data.

- Generator: Neural network (e.g., RAG) that generates an answer for a given question conditioned on the retrieved documents from the retriever.

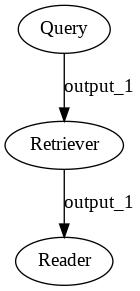

- Pipeline: Stick building blocks together to highly custom pipelines that are represented as Directed Acyclic Graphs (DAG). Think of it as "Apache Airflow for search".

- REST API: Exposes a simple API based on fastAPI for running QA search, uploading files, and collecting user feedback for continuous learning.

- Haystack Annotate: Create custom QA labels to improve the performance of your domain-specific models. Hosted version or Docker images.

It's quite simple to begin experimenting with Haystack. We'd recommend going through the Tutorials section below, but here's an example code structure describing how to approach Haystack with the DocumentStore based on Elasticsearch.

# Run elasticsearch, e.g. via docker run -d -p 9200:9200 -e "discovery.type=single-node" elasticsearch:7.6.2

# DB to store your docs

document_store = ElasticsearchDocumentStore(host="localhost", username="", password="",

index="document", embedding_dim=768,

embedding_field="embedding")

# Index your docs

# (Options: Convert text from PDFs etc. via FileConverter; Split and clean docs with the PreProcessor)

docs = [Document(text="Arya accompanies her father Ned and her sister Sansa to King's Landing. Before their departure ...", meta={}),

...]

document_store.write_documents([doc])

# Init Retriever: Fast algorithm to identify most promising candidate docs

# (Options: DPR, TF-IDF, Elasticsearch, Plain Embeddings ..)

retriever = DensePassageRetriever(document_store=document_store,

query_embedding_model="facebook/dpr-question_encoder-single-nq-base",

passage_embedding_model="facebook/dpr-ctx_encoder-single-nq-base",

)

document_store.update_embeddings(retriever)

# Init Reader: Powerful but slower neural model

# (Options: FARM or Transformers Framework; Extractive or generative models)

reader = FARMReader(model_name_or_path="deepset/roberta-base-squad2", use_gpu=True)

# The Pipeline sticks together Reader + Retriever to a DAG

# There are many different pipeline types, and you can easily build your own

pipeline = ExtractiveQAPipeline(reader, retriever)

# Voilá! Ask a question!

prediction = pipeline.run(query="Who is the father of Arya Stark?", top_k_retriever=10,top_k_reader=3)

print_answers(prediction, details="minimal")

[ { 'answer': 'Eddard',

'context': """... She travels with her father, Eddard, to

King's Landing when he is made Hand of the King ..."""},

{ 'answer': 'Ned',

'context': """... girl disguised as a boy all along and is surprised

to learn she is Arya, Ned Stark's daughter ..."""},

{ 'answer': 'Ned',

'context': """... Arya accompanies her father Ned and her sister Sansa to

King's Landing. Before their departure ..."""}

]

Tutorials

If you'd like to learn more about Haystack, feel free to go through the tutorials below. All tutorials include both .ipynb and .py code.

- Tutorial 1 - Basic QA Pipeline: Jupyter notebook | Colab | Python

- Tutorial 2 - Fine-tuning a model on own data: Jupyter notebook | Colab | Python

- Tutorial 3 - Basic QA Pipeline without Elasticsearch: Jupyter notebook | Colab | Python

- Tutorial 4 - FAQ-style QA: Jupyter notebook | Colab | Python

- Tutorial 5 - Evaluation of the whole QA-Pipeline: Jupyter noteboook | Colab | Python

- Tutorial 6 - Better Retrievers via "Dense Passage Retrieval": Jupyter noteboook | Colab | Python

- Tutorial 7 - Generative QA via "Retrieval-Augmented Generation": Jupyter noteboook | Colab | Python

- Tutorial 8 - Preprocessing: Jupyter noteboook | Colab | Python

- Tutorial 9 - DPR Training: Jupyter noteboook | Colab | Python

- Tutorial 10 - Knowledge Graph: Jupyter noteboook | Colab | Python

- Tutorial 11 - Pipelines: Jupyter noteboook | Colab | Python

- Tutorial 12 - Long-Form Question Answering: Jupyter noteboook | Colab | Python

- Tutorial 13 - Question Generation: Jupyter noteboook | Colab | Python

- Tutorial 14 - Query Classifier: Jupyter noteboook | Colab | Python

How to use Haystack

Below you'll find more detailed descriptions of various Haystack components along with quick examples.

File Conversion | Preprocessing | DocumentStores | Retrievers | Readers | Pipelines | REST API | Labeling Tool

Please also refer to our documentation.

1) File Conversion

What

Different converters to extract text from your original files (pdf, docx, txt, md, html). While it's almost impossible to cover all types, layouts, and special cases (especially in PDFs), we cover the most common formats (incl. multi-column) and extract meta-information (e.g., page splits). The converters are easily extendable so that you can customize them for your files if needed. We also provide an OCR based approach for converting images or PDFs.

Available options

- Txt

- PDF (incl. OCR)

- Docx

- Apache Tika (Supports > 340 file formats)

- Markdown

- Images

Example

#PDF

from haystack.file_converter.pdf import PDFToTextConverter

converter = PDFToTextConverter(remove_numeric_tables=True, valid_languages=["de","en"])

doc = converter.convert(file_path=file, meta=None)

# => {"text": "text first page \f text second page ...", "meta": None}

#DOCX

from haystack.file_converter.docx import DocxToTextConverter

converter = DocxToTextConverter(remove_numeric_tables=True, valid_languages=["de","en"])

doc = converter.convert(file_path=file, meta=None)

# => {"text": "some text", "meta": None}

2) Preprocessing

What

Cleaning and splitting your texts are crucial steps that will directly impact the speed and accuracy of your search. The splitting of larger texts is especially important for achieving fast query speed. The longer the texts that the retriever passes to the reader, the slower your queries.

Available Options

We provide a basic PreProcessor class that allows:

- clean whitespace, headers, footer, and empty lines

- split by words, sentences, or passages

- option for "overlapping" splits

- option to never split within a sentence

You can easily extend this class to your custom requirements.

Example

converter = PDFToTextConverter(remove_numeric_tables=True, valid_languages=["en"])

processor = PreProcessor(clean_empty_lines=True,

clean_whitespace=True,

clean_header_footer=True,

split_by="word",

split_length=200,

split_respect_sentence_boundary=True)

docs = []

for f_name, f_path in zip(filenames, filepaths):

# Optional: Supply any meta data here

# the "name" field will be used by DPR if embed_title=True, rest is custom and can be named arbitrarily

cur_meta = {"name": f_name, "category": "a" ...}

# Run the conversion on each file (PDF -> 1x doc)

d = converter.convert(f_path, meta=cur_meta)

# clean and split each dict (1x doc -> multiple docs)

d = processor.process(d)

docs.extend(d)

# at this point docs will be [{"text": "some", "meta":{"name": "myfilename", "category":"a"}},...]

document_store.write_documents(docs)

3) DocumentStores

What

- Store your texts, metadata, and optionally embeddings

- Documents should be chunked into smaller units (e.g., paragraphs) before indexing to make the results returned by the Retriever more granular and accurate.

Available Options

- Elasticsearch

- FAISS

- SQL

- InMemory

- Milvus

- Weaviate

Example

# Run elasticsearch, e.g. via docker run -d -p 9200:9200 -e "discovery.type=single-node" elasticsearch:7.6.2

# Connect

document_store = ElasticsearchDocumentStore(host="localhost", username="", password="", index="document")

# Get all documents

document_store.get_all_documents()

# Query

document_store.query(query="What is the meaning of life?", filters=None, top_k=5)

document_store.query_by_embedding(query_emb, filters=None, top_k=5)

-> See docs for details

4) Retrievers

What

The Retriever is a fast "filter" that can quickly go through the entire document store and pass a set of candidate documents to the Reader. It is a tool for sifting out the obvious negative cases, saving the Reader from doing more work than it needs to, and speeding up the querying process. There are two fundamentally different categories of retrievers: sparse (e.g., TF-IDF, BM25) and dense (e.g., DPR, sentence-transformers).

Available Options

- DensePassageRetriever

- ElasticsearchRetriever

- EmbeddingRetriever

- TfidfRetriever

Example

retriever = DensePassageRetriever(document_store=document_store,

query_embedding_model="facebook/dpr-question_encoder-single-nq-base",

passage_embedding_model="facebook/dpr-ctx_encoder-single-nq-base",

use_gpu=True,

batch_size=16,

embed_title=True)

retriever.retrieve(query="Why did the revenue increase?")

# returns: [Document, Document]

-> See docs for details

5) Readers

What

Neural networks (i.e., mostly Transformer-based) that read through texts in detail to find an answer. Use diverse models like BERT, RoBERTa or XLNet trained via FARM or on SQuAD-like datasets. The Reader takes multiple passages of text as input and returns top-n answers with corresponding confidence scores. Both readers can load either a local model or any public model from Hugging Face's model hub

Available Options

- FARMReader: Reader based on FARM incl. extensive configuration options and speed optimizations

- TransformersReader: Reader based on the

pipelineclass of HuggingFace's Transformers.

Both Readers can load models directly from HuggingFace's model hub.

Example

reader = FARMReader(model_name_or_path="deepset/roberta-base-squad2",

use_gpu=False, no_ans_boost=-10, context_window_size=500,

top_k_per_candidate=3, top_k_per_sample=1,

num_processes=8, max_seq_len=256, doc_stride=128)

# Optional: Training & eval

reader.train(...)

reader.eval(...)

# Predict

reader.predict(question="Who is the father of Arya Starck?", documents=documents, top_k=3)

-> See docs for details

6) Pipelines

What

To build modern search pipelines, you need two things: powerful building blocks and a flexible way to stick them together.

The Pipeline class is built exactly for this purpose and enables many search scenarios beyond QA. The core idea: you can make a Directed Acyclic Graph (DAG) where each node is one "building block" (Reader, Retriever, Generator, and so on).

Available Options

- Standard nodes: Reader, Retriever, Generator, etc.

- Join nodes: For example, combine results of multiple retrievers via the

JoinDocumentsnode - Decision Nodes: For example, classify an incoming query and, depending on the results, execute only a particular branch of your graph

Example

A minimal Open-Domain QA Pipeline:

p = Pipeline()

p.add_node(component=retriever, name="ESRetriever1", inputs=["Query"])

p.add_node(component=reader, name="QAReader", inputs=["ESRetriever1"])

res = p.run(query="What did Einstein work on?", params={"retriever": {"top_k": 1}})

You can draw the DAG to inspect better what you are building:

p.draw(path="custom_pipe.png")

-> See docs for details and example of more complex pipelines

7) REST API

What

A simple REST API based on FastAPI to:

- search answers in texts (extractive QA)

- search answers by comparing user question to existing questions (FAQ-style QA)

- collect & export user feedback on answers to gain domain-specific training data (feedback)

- allow basic monitoring of requests (currently via APM in Kibana)

Example

To serve the API, adjust the values in rest_api/config.py and run:

gunicorn rest_api.application:app -b 0.0.0.0:8000 -k uvicorn.workers.UvicornWorker -t 300

You will find the Swagger API documentation at http://127.0.0.1:8000/docs

8) Labeling Tool

- Use the hosted version (Beta) or deploy it yourself with the Docker Images.

- Create labels with different techniques: Come up with questions (+ answers) while reading passages (SQuAD style) or have a set of pre-defined questions and look for answers in the document (~ Natural Questions).

- Structure your work via organizations, projects, users

- Upload your documents or import labels from an existing SQuAD-style dataset

:heart: Contributing

We are very open to the community's contributions - be it a quick fix of a typo, or a completely new feature! You don't need to be a Haystack expert to provide meaningful improvements. To avoid any extra work on either side, please check our Contributor Guidelines first.

We'd also like to invite you to our Slack community channels. Please join here!

Tests

Tests will automatically run in our CI for every commit you push to your PR. You can also run them locally by executing pytest in your terminal from the root folder of this repository. If you want to run all tests locally, you'll need all document stores running in the background. You can launch them like this:

docker run -d -p 9200:9200 -e "discovery.type=single-node" -e "ES_JAVA_OPTS=-Xms128m -Xmx128m" elasticsearch:7.9.2

docker run -d -p 19530:19530 -p 19121:19121 milvusdb/milvus:1.1.0-cpu-d050721-5e559c

docker run -d -p 8080:8080 --name haystack_test_weaviate --env AUTHENTICATION_ANONYMOUS_ACCESS_ENABLED='true' --env PERSISTENCE_DATA_PATH='/var/lib/weaviate' semitechnologies/weaviate:1.7.0

docker run -d -p 7200:7200 --name haystack_test_graphdb deepset/graphdb-free:9.4.1-adoptopenjdk11

docker run -d -p 9998:9998 -e "TIKA_CHILD_JAVA_OPTS=-JXms128m" -e "TIKA_CHILD_JAVA_OPTS=-JXmx128m" apache/tika:1.24.1

Then run all tests:

cd test

pytest

To just run individual tests:

pytest -v test_retriever.py::test_dpr_embedding

To just select a logical subset of tests via markers and the optional "not" keyword:

pytest -m not elasticsearch

pytest -m elasticsearch

pytest -m generator

pytest -m tika

pytest -m not slow

...

If you want to run all test cases but not with all document store variants, you can make use of our --document_store:

pytest -v test_retriever.py::test_dpr_embedding --document_store_type="faiss"

pytest -v test_retriever.py::test_dpr_embedding --document_store_type="faiss, memory"

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

Filter files by name, interpreter, ABI, and platform.

If you're not sure about the file name format, learn more about wheel file names.

Copy a direct link to the current filters

File details

Details for the file farm-haystack-0.10.0.tar.gz.

File metadata

- Download URL: farm-haystack-0.10.0.tar.gz

- Upload date:

- Size: 209.0 kB

- Tags: Source

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/3.2.0 pkginfo/1.5.0.1 requests/2.24.0 setuptools/49.1.0 requests-toolbelt/0.9.1 tqdm/4.60.0 CPython/3.7.6

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

97783a8d9a42afc6637b657d283b1a5aeb9c2e86358f516dc83ce7bb97f0f8c8

|

|

| MD5 |

7481004487aee4f2f2f31bafe964d388

|

|

| BLAKE2b-256 |

e0cb92f0ba4c5defc831fe64d61d30909b245b968a587d536fc978087b60103e

|

File details

Details for the file farm_haystack-0.10.0-py3-none-any.whl.

File metadata

- Download URL: farm_haystack-0.10.0-py3-none-any.whl

- Upload date:

- Size: 200.2 kB

- Tags: Python 3

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/3.2.0 pkginfo/1.5.0.1 requests/2.24.0 setuptools/49.1.0 requests-toolbelt/0.9.1 tqdm/4.60.0 CPython/3.7.6

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

521e8cb1289ab28c5d07a51b8bbcc85e40e346a1bfaabf6bbecc5c991c148e5b

|

|

| MD5 |

62de80edeccacaa83029ef06919feeb4

|

|

| BLAKE2b-256 |

1bb91b2890dbbe914eeba7132ed1dea3c1697af0640791a4d64f3a30f98bbefd

|