Taking the pain out of choosing a Python global optimizer

Project description

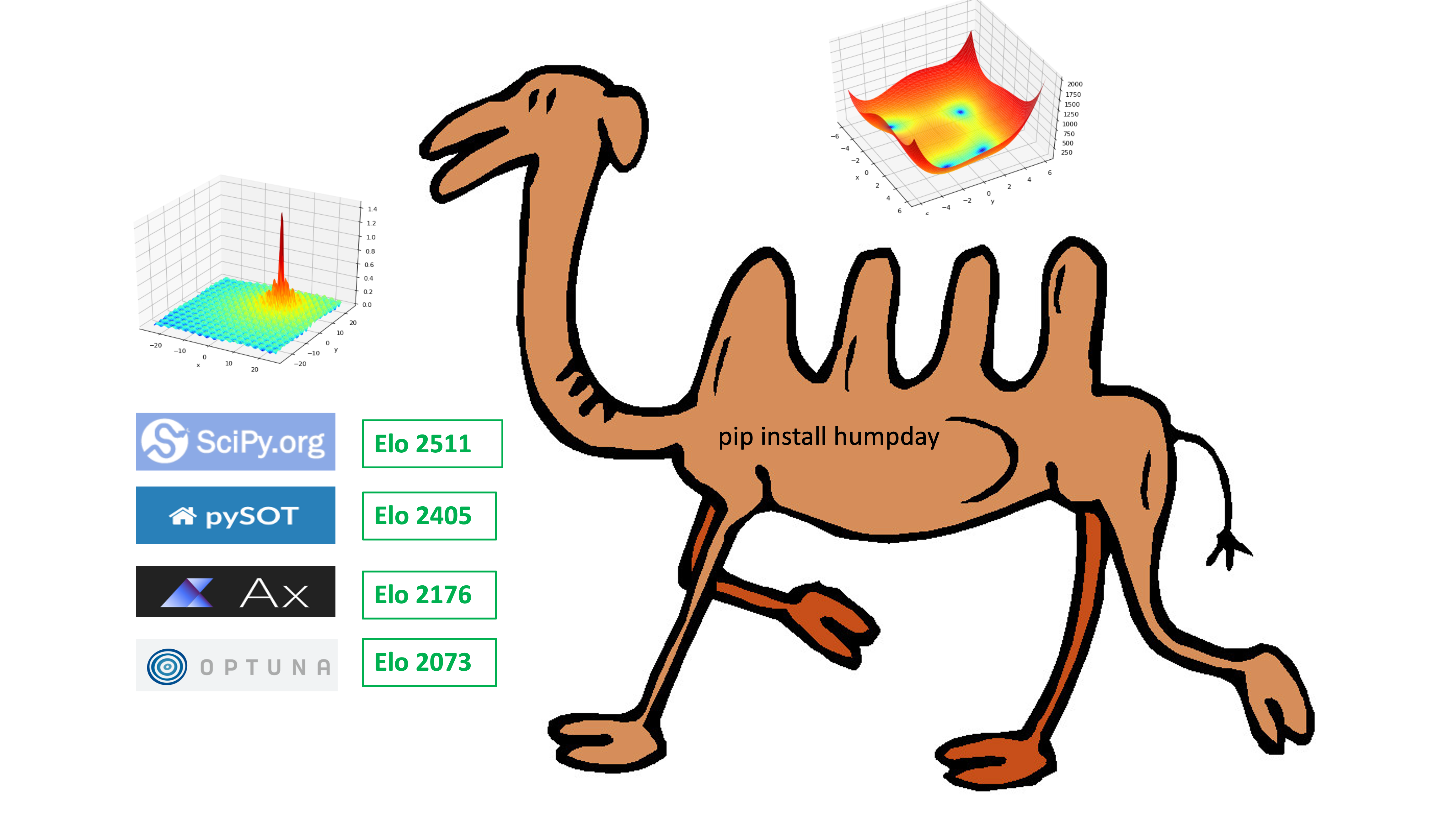

humpday optimizer Elo ratings

TLDR: Deriv-free optimizers from many packages in a common syntax

-

There's a colab notebook that recommends a black-box derivative-free optimizer for your objective function.

-

About fifty strategies drawn from various open source packages are assigned Elo ratings depending on dimension of the problem and number of function evaluations allowed.

What's HumpDay?

Hello and welcome to HumpDay, a package that helps you choose a Python global optimizer package, and strategy therein, from Ax-Platform, bayesian-optimization, DLib, HyperOpt, NeverGrad, Optuna, Platypus, PyMoo, PySOT, Scipy classic and shgo, Skopt, nlopt, Py-Bobyaq, UltraOpt and maybe others by the time you read this. It also presents some of their functionality in a common calling syntax.

Cite or be cited

Pull requests at CITE.md are welcome. If your package is benchmarked here I'd like to get this bit right.

Install

Pick one of:

pip install humpday

pip install humpday[full]

The full option will try to install a slew of optim packages. You may prefer to do that piecemeal. See below.

Bleeding edge:

pip install git+https://github.com/microprediction/humpday

File an issue if you have problems. See this thread if you have issues on mac silicon M1.

This might help some of you sometimes

pip install cython pybind11

brew install openblas

export OPENBLAS=/opt/homebrew/opt/openblas/lib/

Installing one optimizer at a time

pip install scikit-optimize

pip install optuna

pip install platypus-opt

pip install poap

pip install pysot

Some of these are really good, but not 100% stable on all platforms we've used.

pip install cmake

pip install ultraopt

pip install dlib

pip install ax-platform

pip install py-bobyqa

pip install hebo

pip install nlopt

pip install freelunch

Broken pending issue:

pip install bayesian-optimization

pip install nevergrad

Recommendations

Pass the dimensions of the problem, function evaluation budget and time budget to receive suggestions that are independent of your problem set,

from pprint import pprint

from humpday import suggest

pprint(suggest(n_dim=5, n_trials=130,n_seconds=5*60))

where n_seconds is the total computation budget for the optimizer (not the objective function) over all 130 function evaluations. Or simply pass your objective function, and it will time it and do something sensible:

from humpday import recommend

def my_objective(u):

time.sleep(0.01)

return u[0]*math.sin(u[1])

recommendations = recommend(my_objective, n_dim=21, n_trials=130)

Points race

If you have more time, call points_race on a list of your own objective functions:

from humpday import points_race

points_race(objectives=[my_objective]*2,n_dim=5, n_trials=100)

See the colab notebook.

How it works

In the background, 50+ strategies are assigned Elo ratings by sister repo optimizer-elo-ratings. Oh I said that already. Never mind.

Contribute

By all means contribute more to optimizers.

Articles

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.