Organize Machine Learning Experiments

Project description

🔥 Features

- Monitor running experiments from mobile phone or laptop

- Monitor hardware usage on any computer with a single command

- Integrate with just 2 lines of code (see examples below)

- Keeps track of experiments including infomation like git commit, configurations and hyper-parameters

- API for custom visualizations

- Pretty logs of training progress

- Open source!

Hosting the experiments server

Prerequisites

To install MongoDB, refer to the official

documentation here.

Installation

Install the package using pip:

pip install labml-app

Starting the server

# Start the server on the default port (5005)

labml app-server

# To start the server on a different port, use the following command

labml app-server --port PORT

Optional: to setup and configure Nginx in your server, please refer to this.

You can access the user interface either by visiting http://localhost:{port} or, if configured on a separate machine,

by navigating to http://{server-ip}:{port}.

Monitor Experiments

Installation

- Install the package using pip.

pip install labml

- Create a file named

.labml.yamlat the top level of your project folder, and add the following line to the file:

app_url: http://localhost:{port}/api/v1/default

# If you are setting up the project on a different machine, include the following line instead,

app_url: http://{server-ip}:{port}/api/v1/default

PyTorch example

from labml import tracker, experiment

with experiment.record(name='sample', exp_conf=conf):

for i in range(50):

loss, accuracy = train()

tracker.save(i, {'loss': loss, 'accuracy': accuracy})

Distributed training example

from labml import tracker, experiment

uuid = experiment.generate_uuid() # make sure to sync this in every machine

experiment.create(uuid=uuid,

name='distributed training sample',

distributed_rank=0,

distributed_world_size=8,

)

with experiment.start():

for i in range(50):

loss, accuracy = train()

tracker.save(i, {'loss': loss, 'accuracy': accuracy})

📚 Documentation

Guides

- API to create experiments

- Track training metrics

- Monitored training loop and other iterators

- API for custom visualizations

- Configurations management API

- Logger for stylized logging

🖥 Screenshots

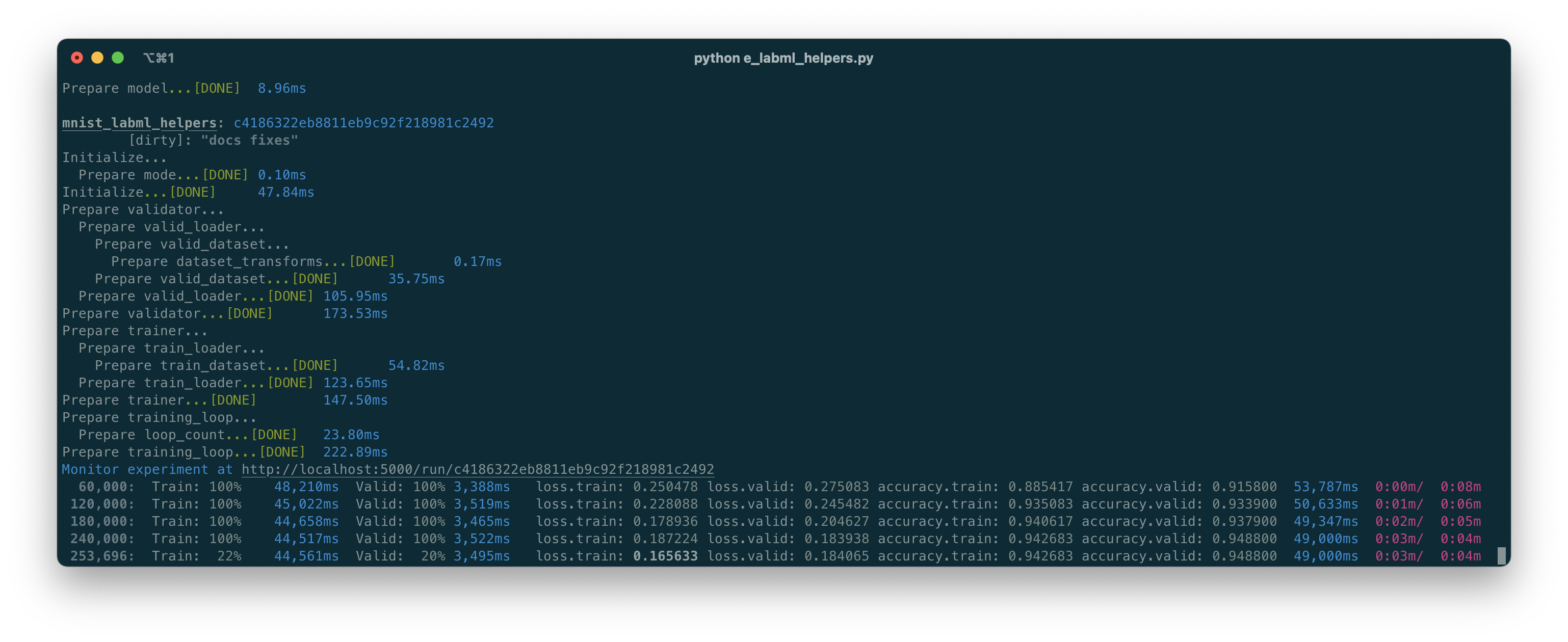

Formatted training loop output

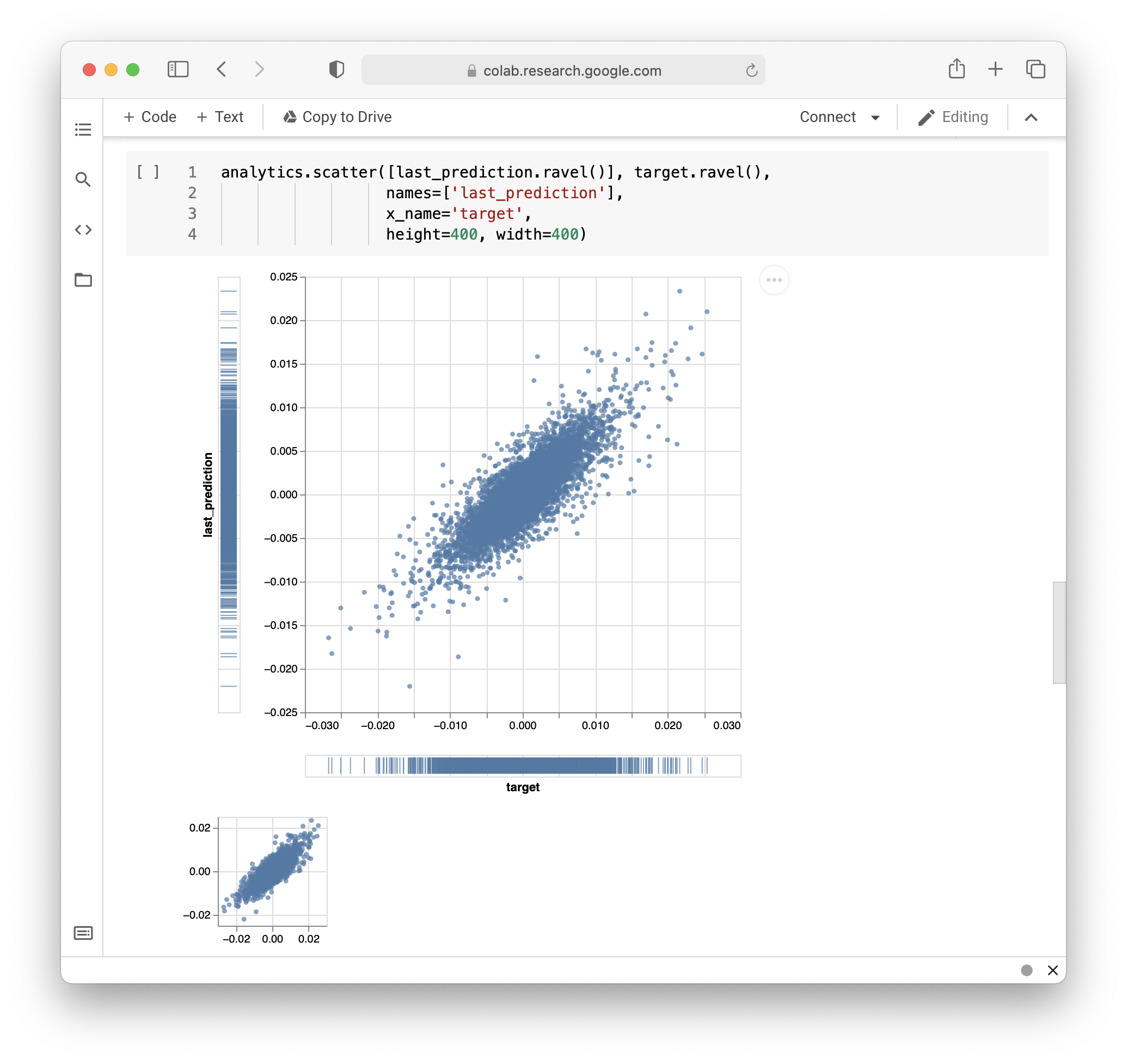

Custom visualizations based on Tensorboard logs

Monitoring hardware usage

# Install packages and dependencies

pip install labml psutil py3nvml

# Start monitoring

labml monitor

Citing

If you use LabML for academic research, please cite the library using the following BibTeX entry.

@misc{labml,

author = {Varuna Jayasiri, Nipun Wijerathne, Adithya Narasinghe, Lakshith Nishshanke},

title = {labml.ai: A library to organize machine learning experiments},

year = {2020},

url = {https://labml.ai/},

}

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

Filter files by name, interpreter, ABI, and platform.

If you're not sure about the file name format, learn more about wheel file names.

Copy a direct link to the current filters

File details

Details for the file labml-0.5.3.tar.gz.

File metadata

- Download URL: labml-0.5.3.tar.gz

- Upload date:

- Size: 72.3 kB

- Tags: Source

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/4.0.2 CPython/3.10.11

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

a04ad3a2d45cab9bde59f70f9897b43f3b55dbe690fdcef2461d11d2dc1b005a

|

|

| MD5 |

6610c8aa05b6177f52e71784f42a78c9

|

|

| BLAKE2b-256 |

0eeaedd3fdfc99a4a67eb0618107c8033a6a5501455f6998894793a4b6405de3

|

File details

Details for the file labml-0.5.3-py3-none-any.whl.

File metadata

- Download URL: labml-0.5.3-py3-none-any.whl

- Upload date:

- Size: 94.6 kB

- Tags: Python 3

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/4.0.2 CPython/3.10.11

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

f62647a1ba18086de0f297e25801260aa59614edb51aa62b5a52c9a7666554d6

|

|

| MD5 |

d4098dbb40910410bbf015acfdafaea4

|

|

| BLAKE2b-256 |

72d3bbde1e96161837122700228bca6b0a829795cfdf7b74609170e4948e99d0

|