Federated Learning for the Edge

Project description

OpenFL is a Python framework for Federated Learning. It enables organizations to train and validate machine learning models on sensitive data. It increases privacy by allowing collaborative model training or validation across local private datasets without ever sharing that data with a central server. OpenFL is hosted by The Linux Foundation.

Overview

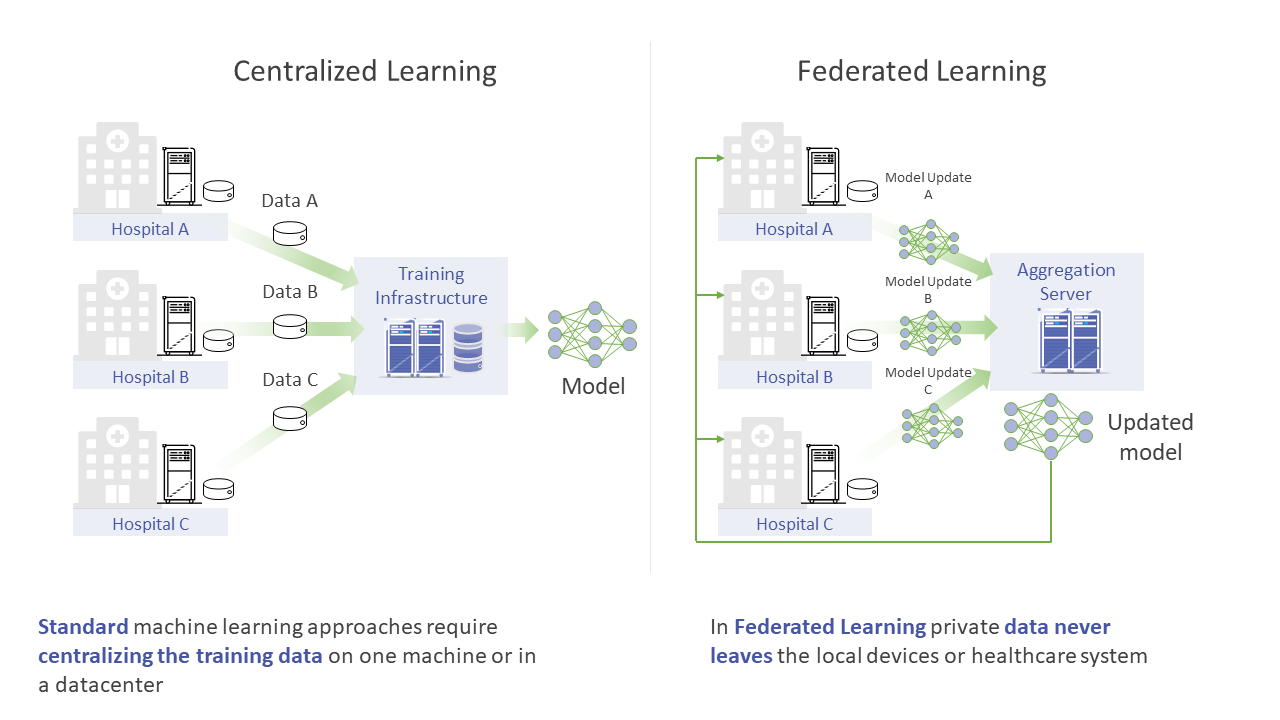

Federated Learning is a distributed machine learning approach that enables collaborative training and evaluation of models without sharing sensitive data such as, personal information, patient records, financial data, or classified information. The minimum data movement needed across a Federated Training experiment, is solely the model parameters and their updates. This is in contrast to a Centralized Learning regime, where all data needs to be moved to a central server or a datacenter for massively parallel training.

OpenFL builds on a collaboration between Intel and the Bakas lab at the University of Pennsylvania (UPenn) to develop the Federated Tumor Segmentation (FeTS) platform (grant award number: U01-CA242871).

The grant for FeTS was awarded from the Informatics Technology for Cancer Research (ITCR) program of the National Cancer Institute (NCI) of the National Institutes of Health (NIH), to Dr. Spyridon Bakas (Principal Investigator) when he was affiliated with the Center for Biomedical Image Computing and Analytics (CBICA) at UPenn and now heading the Division of Computational Pathology at Indiana University (IU).

FeTS is a real-world medical federated learning platform with international collaborators. The original OpenFederatedLearning project and OpenFL are designed to serve as the backend for the FeTS platform, and OpenFL developers and researchers continue to work very closely with IU on the FeTS project. An example is the FeTS-AI/Front-End, which integrates the group’s medical AI expertise with OpenFL framework to create a federated learning solution for medical imaging.

Although initially developed for use in medical imaging, OpenFL designed to be agnostic to the use-case, the industry, and the machine learning framework.

For more information, here is a list of relevant publications.

Installation

Install via PyPI (latest stable release):

pip install -U openfl

Or via conda:

conda install conda-forge::openfl

For more installation options, checkout the installation guide.

Features

Ways to set up an FL experiment

OpenFL supports two ways to set up a Federated Learning experiment:

-

TaskRunner API: This API uses short-lived components like the

AggregatorandCollaborator, which terminate at the end of an FL experiment. TaskRunner supports mTLS-based secure communication channels, and TEE-based confidential computing environments. -

Workflow API: This API allows for experiments beyond the traditional horizontal federated learning paradigm using a pythonic interface. It allows an experiment to be simulated locally, and then to be seamlessly scaled to a federated setting by switching from a local runtime to a distributed, federated runtime.

Note: This is experimental capability.

Framework Compatibility

OpenFL is backend-agnostic. It comes with support for popular NumPy-based ML frameworks like TensorFlow, PyTorch and Jax which should be installed separately. Users may extend the list of supported backends if needed.

Aggregation Algorithms

OpenFL supports popular aggregation algorithms out-of-the-box, with more algorithms coming soon.

| Reference | PyTorch backend | TensorFlow backend | NumPy backend | |

|---|---|---|---|---|

| FedAvg | McMahan et al., 2017 | yes | yes | yes |

| FedOpt | Reddi et al., 2020 | yes | yes | yes |

| FedProx | Li et al., 2020 | yes | yes | - |

| FedCurv | Shoham et al., 2019 | yes | - | - |

Contributing

We welcome contributions! Please refer to the contributing guidelines.

The OpenFL community is expanding, and we encourage you to join us. Connect with other enthusiasts, share knowledge, and contribute to the advancement of federated learning by joining our Slack channel.

Stay updated by subscribing to the OpenFL mailing list: openfl-announce@lists.lfaidata.foundation.

License

This project is licensed under Apache License Version 2.0. By contributing to the project, you agree to the license and copyright terms therein and release your contribution under these terms.

Citation

@article{openfl_citation,

author={Foley, Patrick and Sheller, Micah J and Edwards, Brandon and Pati, Sarthak and Riviera, Walter and Sharma, Mansi and Moorthy, Prakash Narayana and Wang, Shi-han and Martin, Jason and Mirhaji, Parsa and Shah, Prashant and Bakas, Spyridon},

title={OpenFL: the open federated learning library},

journal={Physics in Medicine \& Biology},

url={http://iopscience.iop.org/article/10.1088/1361-6560/ac97d9},

year={2022},

doi={10.1088/1361-6560/ac97d9},

publisher={IOP Publishing}

}

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

Filter files by name, interpreter, ABI, and platform.

If you're not sure about the file name format, learn more about wheel file names.

Copy a direct link to the current filters

File details

Details for the file openfl-1.9.tar.gz.

File metadata

- Download URL: openfl-1.9.tar.gz

- Upload date:

- Size: 4.8 MB

- Tags: Source

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/6.1.0 CPython/3.11.12

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

012658aff8142afcd4fcef661c628f08369c3e97c9be084f71860ccdfb888059

|

|

| MD5 |

cc747facfbaf81f8dca925e838c2fa09

|

|

| BLAKE2b-256 |

f226230d6d112abce73cd2f0fdf20e18457d93e230ddd54f52f68a97eb7e3c81

|

File details

Details for the file openfl-1.9-py3-none-any.whl.

File metadata

- Download URL: openfl-1.9-py3-none-any.whl

- Upload date:

- Size: 5.1 MB

- Tags: Python 3

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/6.1.0 CPython/3.11.12

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

1bc858790c84166b5c8b9d05ce22cda13323023c58b920488576033669f5a77d

|

|

| MD5 |

1db97292042048fc3f93602f62f4e21f

|

|

| BLAKE2b-256 |

84f79994f28b64848fb2428ff2493995fc40ad19a730e27a38783587eac0ca4a

|