Prompt based automatic annotation

Project description

pbaa : Prompt-Based Automatic Annotation

Easy inference implementation of Grounded-SAM for Prompt-based automatic annotation

Table of Contents

Installation

Docker (Recommend)

git clone https://github.com/dh031200/pbaa.git

docker build docker -t pbaa:latest

docker run --gpus all -it --ipc=host -v `pwd`:/workspace -p 7860:7860 pbaa:latest

Without docker

The code requires python>=3.8, CUDA==11.7.

pip install pbaa

Usage

Options

Usage: pbaa [OPTIONS]

Options:

--version Show the version and exit.

-s, --src TEXT Source image or directory path

-p, --prompt <TEXT TEXT>... Space-separated a pair of prompt and target

classe. (Multi)

-b, --box_threshold FLOAT Threshold for Object Detection (default: 0.25)

-n, --nms_threshold FLOAT Threshold for NMS (default: 0.8)

-o, --output_dir TEXT Path to result data (default: 'outputs')

-g, --gradio Launch gradio app

-h, --help Show this message and exit.

CLI

# pbaa -s <Source> -p <prompt> <class> -p <prompt> <class> ...

pbaa -s source_image.jpg -p "black dog" dog

pbaa -s source_image.jpg -p "black dog" dog -p "white cat" cat

Python

from pbaa import PBAA

annotator = PBAA()

# inference(<Source path>, <prompt:class dict>, box_threshold=0.25, nms_threshold=0.8, save=None, output_dir="outputs")

annotator("path/to/source_image.jpg", {"black dog": "dog", "white cat": "cat"})

Gradio

Run the gradio demo with a simple command

pbaa -g

Output

Launch gradio app

Running on local URL: http://0.0.0.0:7860

You can now access Gradio demos using your browser. localhost:7860

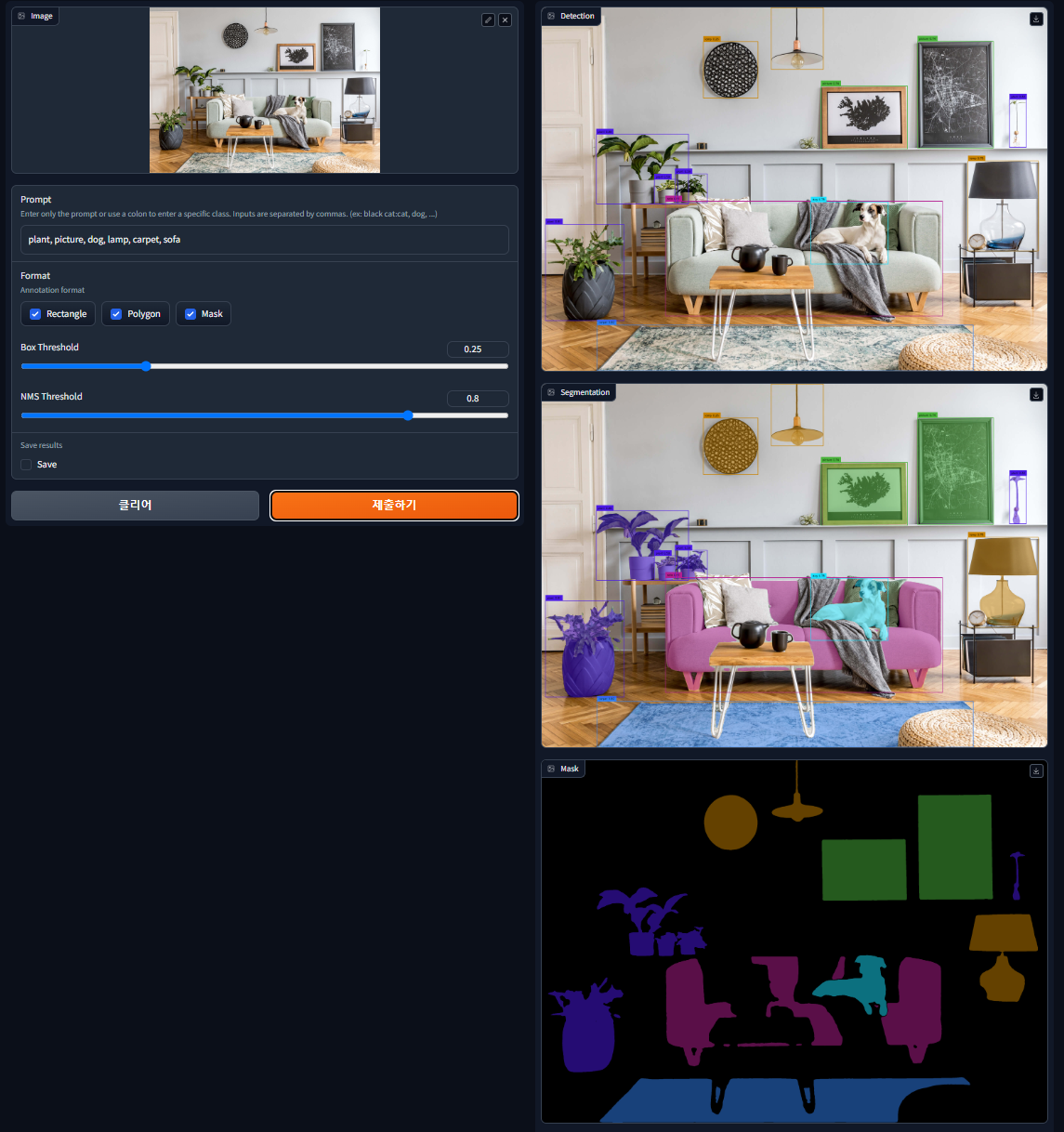

Demo

# Source : assets/demo9.jpg

# prompts : {"plant" : "plant", "picture" : "picture", "dog": "dog", "lamp" : "lamp", "carpet" : "carpet", "sofa" : "sofa"}

pbaa -s assets/demo9.jpg -p plant plant -p picture picture -p dog dog -p lamp lamp -p carpet carpet -p sofa sofa

| Origin | Detection | Segmentation |

|---|---|---|

|

|

|

Result data

json structure

filename

prompt

index

├ cls : class name

├ conf : confidence score

├ box : bounding box coordinates

└ poly : polygon coordinates

License

pbaa is distributed under the terms of the Apache-2.0 license.

Acknowledgements

Grounded-Segment-Anything : https://github.com/IDEA-Research/Grounded-Segment-Anything

Grounding DINO : https://github.com/IDEA-Research/GroundingDINO

Segment-anything : https://github.com/facebookresearch/segment-anything

Project details

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

Filter files by name, interpreter, ABI, and platform.

If you're not sure about the file name format, learn more about wheel file names.

Copy a direct link to the current filters

File details

Details for the file pbaa-1.0.4.tar.gz.

File metadata

- Download URL: pbaa-1.0.4.tar.gz

- Upload date:

- Size: 9.0 MB

- Tags: Source

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/4.0.2 CPython/3.10.11

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

d5f599de03f5c3a7603f676af431264920e2df08fee3fc4927a15e2de60f5366

|

|

| MD5 |

d71ae386338f07fe0a0fb6b536e1d0ca

|

|

| BLAKE2b-256 |

920131d452675f8a7fc35238554a1bcc706c87644c516178711f39a8191e702d

|

File details

Details for the file pbaa-1.0.4-py3-none-any.whl.

File metadata

- Download URL: pbaa-1.0.4-py3-none-any.whl

- Upload date:

- Size: 15.2 kB

- Tags: Python 3

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/4.0.2 CPython/3.10.11

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

d3e528fa2d2dee15a350dfde6c2c0feda6f1755ec4d9bdbd80f052b57aa19315

|

|

| MD5 |

d483a88be670a7ca9ff8c4886035dd37

|

|

| BLAKE2b-256 |

a5a759955ecd6545dccb7277c76015bdddd8bc2dbecc1083f325bdbe594c4b91

|