To connect classic robotics with modern learning methods.

Project description

PyPose: A Library for Robot Learning with Physics-based Optimization

Deep learning has had remarkable success in robotic perception, but its data-centric nature suffers when it comes to generalizing to ever-changing environments. By contrast, physics-based optimization generalizes better, but it does not perform as well in complicated tasks due to the lack of high-level semantic information and the reliance on manual parametric tuning. To take advantage of these two complementary worlds, we present PyPose: a robotics-oriented, PyTorch-based library that combines deep perceptual models with physics-based optimization techniques. Our design goal for PyPose is to make it user-friendly, efficient, and interpretable with a tidy and well-organized architecture. Using an imperative style interface, it can be easily integrated into real-world robotic applications.

Current Features

LieTensor

Modules

SystemIMUPreintegration- ......

Second-order Optimizers

GaussNewtonLevenbergMarquardt- ......

Want more features? Create an issue here to requst new features.

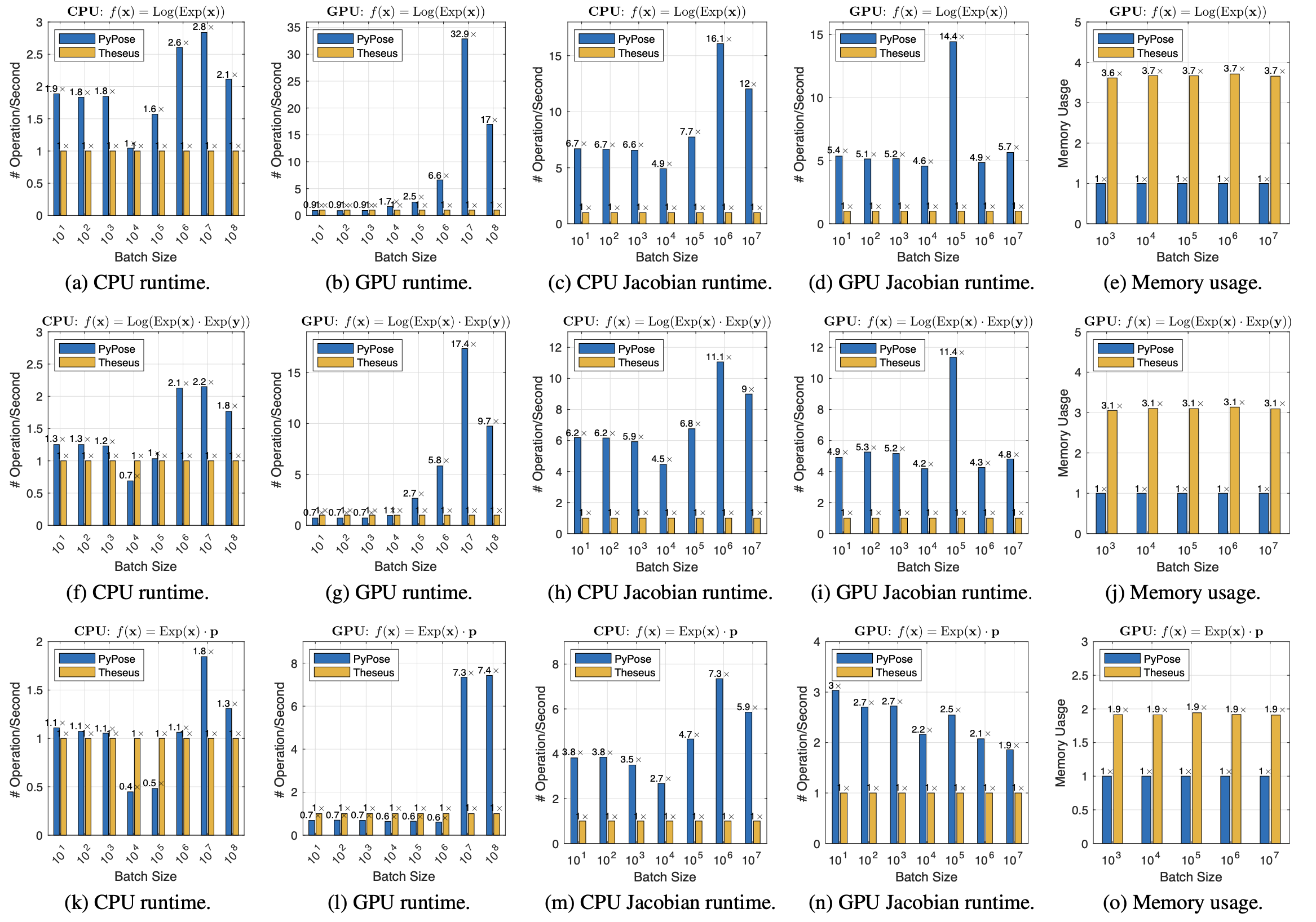

PyPose is highly efficient and supports parallel computing for Jacobian of Lie group and Lie algebra. See following comparison.

Efficiency and memory comparison of batched Lie group operations (we take Theseus performance as 1×).

More information about efficiency comparison goes to our paper for PyPose.

Getting Started

Installation

Install from pypi

pip install pypose

Install from source

- Requirement:

On Ubuntu, MasOS, or Windows, install PyTorch, then run:

pip install -r requirements/main.txt

- Install locally:

git clone https://github.com/pypose/pypose.git

cd pypose && python setup.py develop

- Run tests

pytest

For contributors

-

Make sure the above installation is correct.

-

Go to CONTRIBUTING.md

Examples

- The following code sample shows how to rotate random points and compute the gradient of batched rotation.

>>> import torch, pypose as pp

>>> # A random so(3) LieTensor

>>> r = pp.randn_so3(2, requires_grad=True)

so3Type LieTensor:

tensor([[ 0.1606, 0.0232, -1.5516],

[-0.0807, -0.7184, -0.1102]], requires_grad=True)

>>> R = r.Exp() # Equivalent to: R = pp.Exp(r)

SO3Type LieTensor:

tensor([[ 0.0724, 0.0104, -0.6995, 0.7109],

[-0.0395, -0.3513, -0.0539, 0.9339]], grad_fn=<AliasBackward0>)

>>> p = R @ torch.randn(3) # Rotate random point

tensor([[ 0.8045, -0.8555, 0.5260],

[ 0.3502, 0.8337, 0.9154]], grad_fn=<ViewBackward0>)

>>> p.sum().backward() # Compute gradient

>>> r.grad # Print gradient

tensor([[-0.7920, -0.9510, 1.7110],

[-0.2659, 0.5709, -0.3855]])

- This example shows how to estimate batched inverse of transform by a second-order optimizer. Two usage options for a

schedulerare provided, each of which can work independently.

>>> import torch, pypose as pp

>>> from pp.optim import LM

>>> from pp.optim.strategy import Constant

>>> from pp.optim.scheduler import StopOnPlateau

>>> class InvNet(nn.Module):

def __init__(self, *dim):

super().__init__()

init = pp.randn_SE3(*dim)

self.pose = pp.Parameter(init)

def forward(self, input):

error = (self.pose @ input).Log()

return error.tensor()

>>> device = torch.device("cuda")

>>> input = pp.randn_SE3(2, 2, device=device)

>>> invnet = InvNet(2, 2).to(device)

>>> strategy = Constant(damping=1e-4)

>>> optimizer = LM(invnet, strategy=strategy)

>>> scheduler = StopOnPlateau(optimizer, steps=10, patience=3, decreasing=1e-3, verbose=True)

>>> # 1st option, full optimization

>>> scheduler.optimize(input=input)

>>> # 2nd option, step optimization

>>> while scheduler.continual:

loss = optimizer.step(input)

scheduler.step(loss)

>>> # Note: remove one of the above options for usage!

For more usage, see Documentation. For more applications, see Examples.

Citing PyPose

If you use PyPose, please cite the paper below. You may also download it here.

@article{wang2022pypose,

title = {{PyPose}: A Library for Robot Learning with Physics-based Optimization},

author = {Wang, Chen and Gao, Dasong and Xu, Kuan and Geng, Junyi and Hu, Yaoyu and Qiu, Yuheng and Li, Bowen and Yang, Fan and Moon, Brady and Pandey, Abhinav and Aryan and Xu, Jiahe and Wu, Tianhao and He, Haonan and Huang, Daning and Ren, Zhongqiang and Zhao, Shibo and Fu, Taimeng and Reddy, Pranay and Lin, Xiao and Wang, Wenshan and Shi, Jingnan and Talak, Rajat and Cao, Kun and Du, Yi and Wang, Han and Yu, Huai and Wang, Shanzhao and Chen, Siyu and Kashyap, Ananth and Bandaru, Rohan and Dantu, Karthik and Wu, Jiajun and Xie, Lihua and Carlone, Luca and Hutter, Marco and Scherer, Sebastian},

journal = {arXiv preprint arXiv:2209.15428},

year = {2022}

}

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

Filter files by name, interpreter, ABI, and platform.

If you're not sure about the file name format, learn more about wheel file names.

Copy a direct link to the current filters

File details

Details for the file pypose-0.2.2.tar.gz.

File metadata

- Download URL: pypose-0.2.2.tar.gz

- Upload date:

- Size: 88.2 kB

- Tags: Source

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/4.0.2 CPython/3.9.13

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

33aa02c2523c30f8dc02b0dc70756283e7872567864102d6acddd14eee096e8e

|

|

| MD5 |

6a678b996a1dbbf33c2874f164fc77fc

|

|

| BLAKE2b-256 |

c61f913c61acaa6109f9d48b92d9d5395d68bf2f8bab06196fb1a3fc9bc8399f

|

File details

Details for the file pypose-0.2.2-py3-none-any.whl.

File metadata

- Download URL: pypose-0.2.2-py3-none-any.whl

- Upload date:

- Size: 92.0 kB

- Tags: Python 3

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/4.0.2 CPython/3.9.13

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

f6be340d5d4496e7483221337783f2cd34c6b89e5517dd665280b726a42ec354

|

|

| MD5 |

6462acc5ee3b17591bd301505abef688

|

|

| BLAKE2b-256 |

2b4828f57ba5be515dab5c708b09c4c068106a1d40ccc5498a6d5579b4b229ad

|