A unified approach to explain the output of any machine learning model.

Project description

SHAP (SHapley Additive exPlanations) is a game theoretic approach to explain the output of any machine learning model. It connects optimal credit allocation with local explanations using the classic Shapley values from game theory and their related extensions (see papers for details and citations).

Install

SHAP can be installed from either PyPI or conda-forge:

pip install shap or conda install -c conda-forge shap

Tree ensemble example (XGBoost/LightGBM/CatBoost/scikit-learn/pyspark models)

While SHAP can explain the output of any machine learning model, we have developed a high-speed exact algorithm for tree ensemble methods (see our Nature MI paper). Fast C++ implementations are supported for XGBoost, LightGBM, CatBoost, scikit-learn and pyspark tree models:

import xgboost

import shap

# train an XGBoost model

X, y = shap.datasets.boston()

model = xgboost.XGBRegressor().fit(X, y)

# explain the model's predictions using SHAP

# (same syntax works for LightGBM, CatBoost, scikit-learn, transformers, Spark, etc.)

explainer = shap.Explainer(model)

shap_values = explainer(X)

# visualize the first prediction's explanation

shap.plots.waterfall(shap_values[0])

The above explanation shows features each contributing to push the model output from the base value (the average model output over the training dataset we passed) to the model output. Features pushing the prediction higher are shown in red, those pushing the prediction lower are in blue. Another way to visualize the same explanation is to use a force plot (these are introduced in our Nature BME paper):

# visualize the first prediction's explanation with a force plot

shap.plots.force(shap_values[0])

If we take many force plot explanations such as the one shown above, rotate them 90 degrees, and then stack them horizontally, we can see explanations for an entire dataset (in the notebook this plot is interactive):

# visualize all the training set predictions

shap.plots.force(shap_values)

To understand how a single feature effects the output of the model we can plot the SHAP value of that feature vs. the value of the feature for all the examples in a dataset. Since SHAP values represent a feature's responsibility for a change in the model output, the plot below represents the change in predicted house price as RM (the average number of rooms per house in an area) changes. Vertical dispersion at a single value of RM represents interaction effects with other features. To help reveal these interactions we can color by another feature. If we pass the whole explanation tensor to the color argument the scatter plot will pick the best feature to color by. In this case it picks RAD (index of accessibility to radial highways) since that highlights that the average number of rooms per house has less impact on home price for areas with a high RAD value.

# create a dependence scatter plot to show the effect of a single feature across the whole dataset

shap.plots.scatter(shap_values[:,"RM"], color=shap_values)

To get an overview of which features are most important for a model we can plot the SHAP values of every feature for every sample. The plot below sorts features by the sum of SHAP value magnitudes over all samples, and uses SHAP values to show the distribution of the impacts each feature has on the model output. The color represents the feature value (red high, blue low). This reveals for example that a high LSTAT (% lower status of the population) lowers the predicted home price.

# summarize the effects of all the features

shap.plots.beeswarm(shap_values)

We can also just take the mean absolute value of the SHAP values for each feature to get a standard bar plot (produces stacked bars for multi-class outputs):

shap.plots.bar(shap_values)

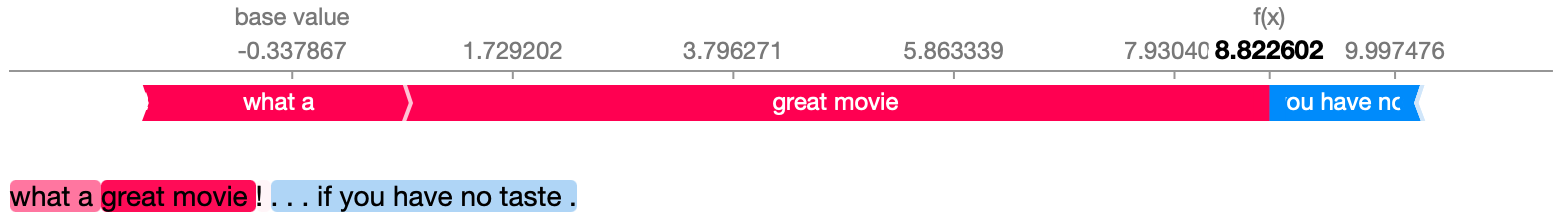

Natural language example (transformers)

SHAP has specific support for natural language models like those in the Hugging Face transformers library. By adding coalitional rules to traditional Shapley values we can form games that explain large modern NLP model using very few function evaluations. Using this functionality is as simple as passing a supported transformers pipeline to SHAP:

import transformers

import shap

# load a transformers pipeline model

model = transformers.pipeline('sentiment-analysis', return_all_scores=True)

# explain the model on two sample inputs

explainer = shap.Explainer(model)

shap_values = explainer(["What a great movie! ...if you have no taste."])

# visualize the first prediction's explanation for the POSITIVE output class

shap.plots.text(shap_values[0, :, "POSITIVE"])

Deep learning example with DeepExplainer (TensorFlow/Keras models)

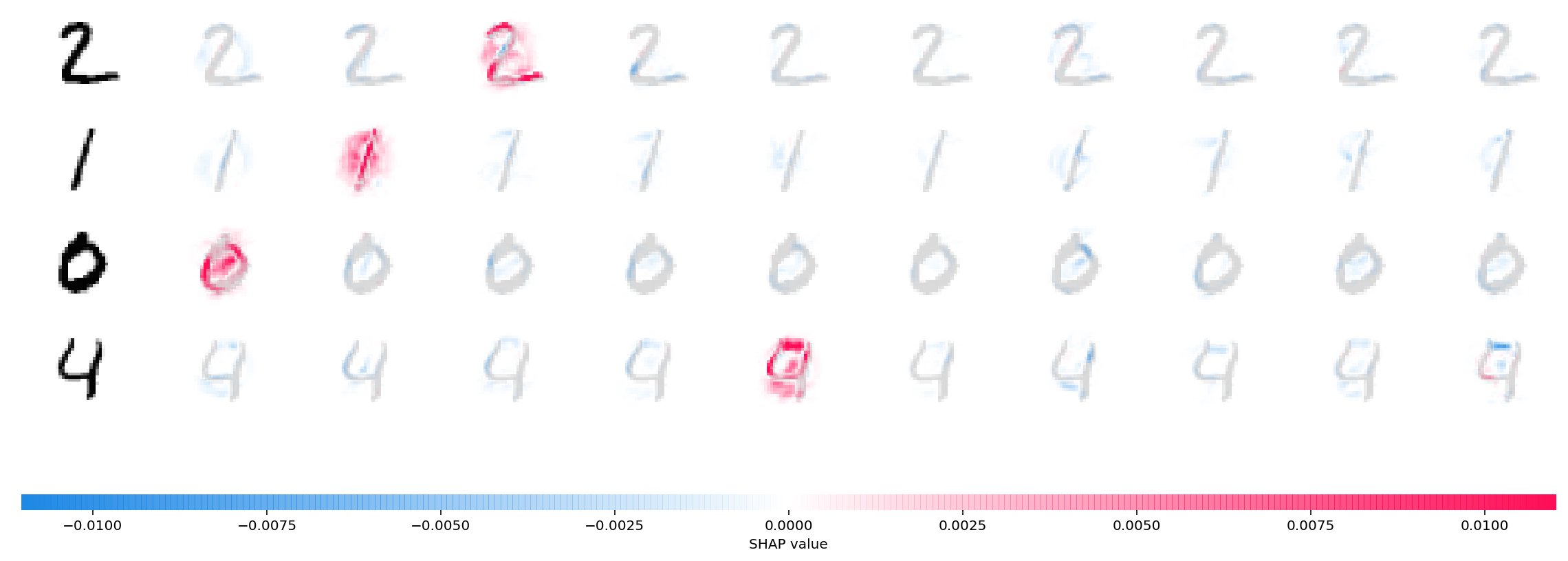

Deep SHAP is a high-speed approximation algorithm for SHAP values in deep learning models that builds on a connection with DeepLIFT described in the SHAP NIPS paper. The implementation here differs from the original DeepLIFT by using a distribution of background samples instead of a single reference value, and using Shapley equations to linearize components such as max, softmax, products, divisions, etc. Note that some of these enhancements have also been since integrated into DeepLIFT. TensorFlow models and Keras models using the TensorFlow backend are supported (there is also preliminary support for PyTorch):

# ...include code from https://github.com/keras-team/keras/blob/master/examples/mnist_cnn.py

import shap

import numpy as np

# select a set of background examples to take an expectation over

background = x_train[np.random.choice(x_train.shape[0], 100, replace=False)]

# explain predictions of the model on four images

e = shap.DeepExplainer(model, background)

# ...or pass tensors directly

# e = shap.DeepExplainer((model.layers[0].input, model.layers[-1].output), background)

shap_values = e.shap_values(x_test[1:5])

# plot the feature attributions

shap.image_plot(shap_values, -x_test[1:5])

The plot above explains ten outputs (digits 0-9) for four different images. Red pixels increase the model's output while blue pixels decrease the output. The input images are shown on the left, and as nearly transparent grayscale backings behind each of the explanations. The sum of the SHAP values equals the difference between the expected model output (averaged over the background dataset) and the current model output. Note that for the 'zero' image the blank middle is important, while for the 'four' image the lack of a connection on top makes it a four instead of a nine.

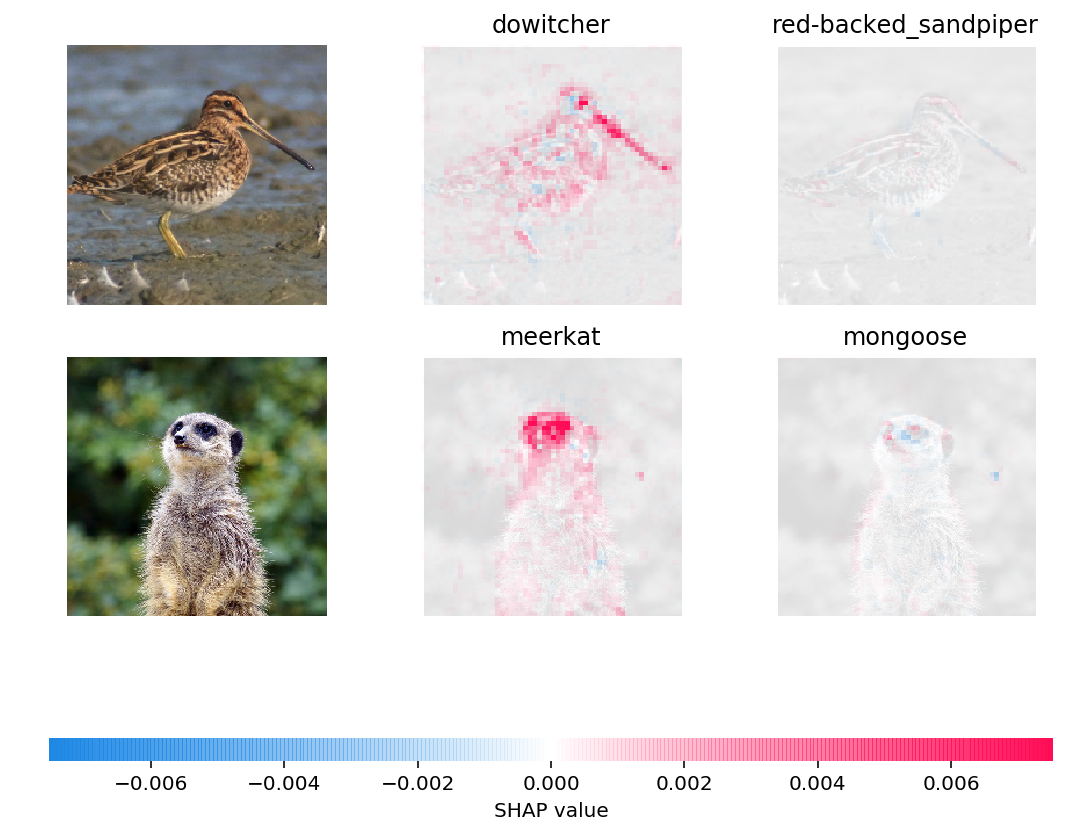

Deep learning example with GradientExplainer (TensorFlow/Keras/PyTorch models)

Expected gradients combines ideas from Integrated Gradients, SHAP, and SmoothGrad into a single expected value equation. This allows an entire dataset to be used as the background distribution (as opposed to a single reference value) and allows local smoothing. If we approximate the model with a linear function between each background data sample and the current input to be explained, and we assume the input features are independent then expected gradients will compute approximate SHAP values. In the example below we have explained how the 7th intermediate layer of the VGG16 ImageNet model impacts the output probabilities.

from keras.applications.vgg16 import VGG16

from keras.applications.vgg16 import preprocess_input

import keras.backend as K

import numpy as np

import json

import shap

# load pre-trained model and choose two images to explain

model = VGG16(weights='imagenet', include_top=True)

X,y = shap.datasets.imagenet50()

to_explain = X[[39,41]]

# load the ImageNet class names

url = "https://s3.amazonaws.com/deep-learning-models/image-models/imagenet_class_index.json"

fname = shap.datasets.cache(url)

with open(fname) as f:

class_names = json.load(f)

# explain how the input to the 7th layer of the model explains the top two classes

def map2layer(x, layer):

feed_dict = dict(zip([model.layers[0].input], [preprocess_input(x.copy())]))

return K.get_session().run(model.layers[layer].input, feed_dict)

e = shap.GradientExplainer(

(model.layers[7].input, model.layers[-1].output),

map2layer(X, 7),

local_smoothing=0 # std dev of smoothing noise

)

shap_values,indexes = e.shap_values(map2layer(to_explain, 7), ranked_outputs=2)

# get the names for the classes

index_names = np.vectorize(lambda x: class_names[str(x)][1])(indexes)

# plot the explanations

shap.image_plot(shap_values, to_explain, index_names)

Predictions for two input images are explained in the plot above. Red pixels represent positive SHAP values that increase the probability of the class, while blue pixels represent negative SHAP values the reduce the probability of the class. By using ranked_outputs=2 we explain only the two most likely classes for each input (this spares us from explaining all 1,000 classes).

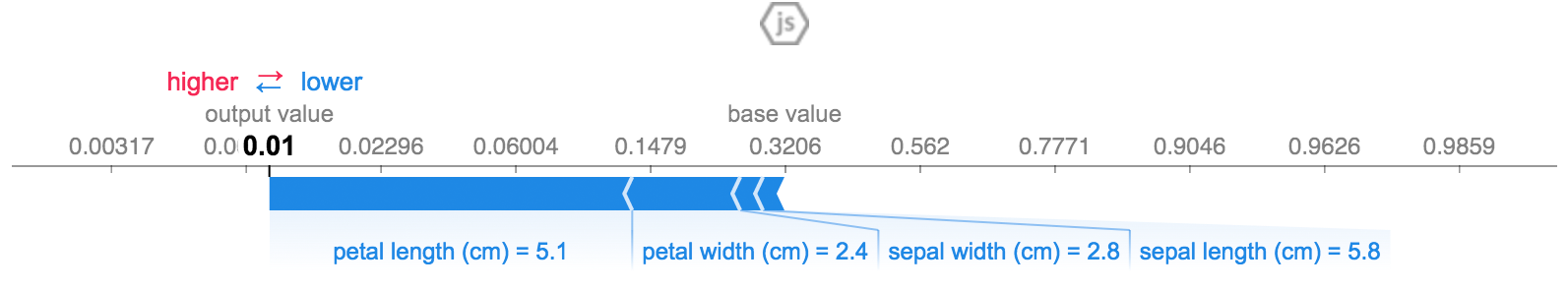

Model agnostic example with KernelExplainer (explains any function)

Kernel SHAP uses a specially-weighted local linear regression to estimate SHAP values for any model. Below is a simple example for explaining a multi-class SVM on the classic iris dataset.

import sklearn

import shap

from sklearn.model_selection import train_test_split

# print the JS visualization code to the notebook

shap.initjs()

# train a SVM classifier

X_train,X_test,Y_train,Y_test = train_test_split(*shap.datasets.iris(), test_size=0.2, random_state=0)

svm = sklearn.svm.SVC(kernel='rbf', probability=True)

svm.fit(X_train, Y_train)

# use Kernel SHAP to explain test set predictions

explainer = shap.KernelExplainer(svm.predict_proba, X_train, link="logit")

shap_values = explainer.shap_values(X_test, nsamples=100)

# plot the SHAP values for the Setosa output of the first instance

shap.force_plot(explainer.expected_value[0], shap_values[0][0,:], X_test.iloc[0,:], link="logit")

The above explanation shows four features each contributing to push the model output from the base value (the average model output over the training dataset we passed) towards zero. If there were any features pushing the class label higher they would be shown in red.

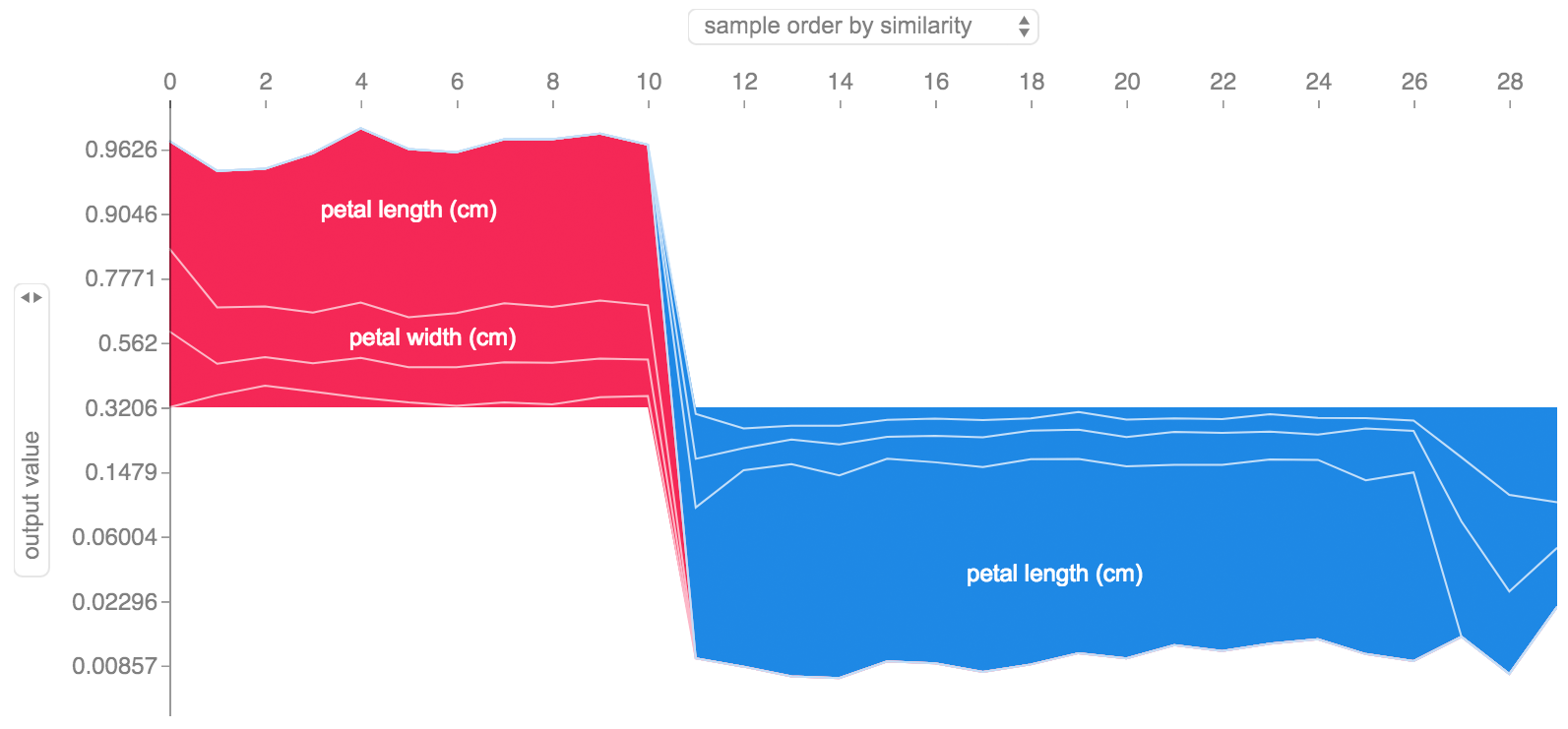

If we take many explanations such as the one shown above, rotate them 90 degrees, and then stack them horizontally, we can see explanations for an entire dataset. This is exactly what we do below for all the examples in the iris test set:

# plot the SHAP values for the Setosa output of all instances

shap.force_plot(explainer.expected_value[0], shap_values[0], X_test, link="logit")

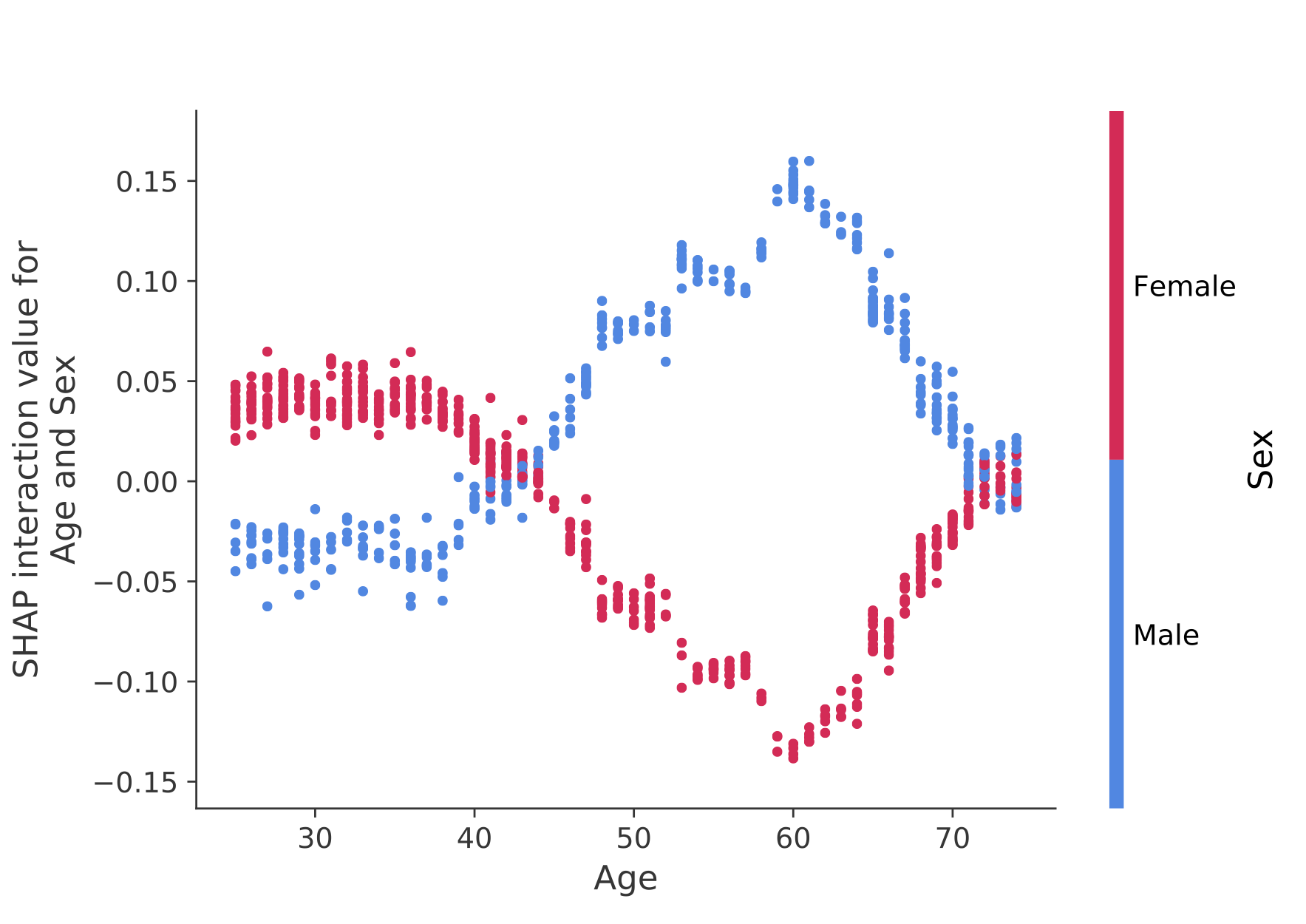

SHAP Interaction Values

SHAP interaction values are a generalization of SHAP values to higher order interactions. Fast exact computation of pairwise interactions are implemented for tree models with shap.TreeExplainer(model).shap_interaction_values(X). This returns a matrix for every prediction, where the main effects are on the diagonal and the interaction effects are off-diagonal. These values often reveal interesting hidden relationships, such as how the increased risk of death peaks for men at age 60 (see the NHANES notebook for details):

Sample notebooks

The notebooks below demonstrate different use cases for SHAP. Look inside the notebooks directory of the repository if you want to try playing with the original notebooks yourself.

TreeExplainer

An implementation of Tree SHAP, a fast and exact algorithm to compute SHAP values for trees and ensembles of trees.

-

NHANES survival model with XGBoost and SHAP interaction values - Using mortality data from 20 years of followup this notebook demonstrates how to use XGBoost and

shapto uncover complex risk factor relationships. -

Census income classification with LightGBM - Using the standard adult census income dataset, this notebook trains a gradient boosting tree model with LightGBM and then explains predictions using

shap. -

League of Legends Win Prediction with XGBoost - Using a Kaggle dataset of 180,000 ranked matches from League of Legends we train and explain a gradient boosting tree model with XGBoost to predict if a player will win their match.

DeepExplainer

An implementation of Deep SHAP, a faster (but only approximate) algorithm to compute SHAP values for deep learning models that is based on connections between SHAP and the DeepLIFT algorithm.

-

MNIST Digit classification with Keras - Using the MNIST handwriting recognition dataset, this notebook trains a neural network with Keras and then explains predictions using

shap. -

Keras LSTM for IMDB Sentiment Classification - This notebook trains an LSTM with Keras on the IMDB text sentiment analysis dataset and then explains predictions using

shap.

GradientExplainer

An implementation of expected gradients to approximate SHAP values for deep learning models. It is based on connections between SHAP and the Integrated Gradients algorithm. GradientExplainer is slower than DeepExplainer and makes different approximation assumptions.

- Explain an Intermediate Layer of VGG16 on ImageNet - This notebook demonstrates how to explain the output of a pre-trained VGG16 ImageNet model using an internal convolutional layer.

LinearExplainer

For a linear model with independent features we can analytically compute the exact SHAP values. We can also account for feature correlation if we are willing to estimate the feature covariance matrix. LinearExplainer supports both of these options.

- Sentiment Analysis with Logistic Regression - This notebook demonstrates how to explain a linear logistic regression sentiment analysis model.

KernelExplainer

An implementation of Kernel SHAP, a model agnostic method to estimate SHAP values for any model. Because it makes no assumptions about the model type, KernelExplainer is slower than the other model type specific algorithms.

-

Census income classification with scikit-learn - Using the standard adult census income dataset, this notebook trains a k-nearest neighbors classifier using scikit-learn and then explains predictions using

shap. -

ImageNet VGG16 Model with Keras - Explain the classic VGG16 convolutional neural network's predictions for an image. This works by applying the model agnostic Kernel SHAP method to a super-pixel segmented image.

-

Iris classification - A basic demonstration using the popular iris species dataset. It explains predictions from six different models in scikit-learn using

shap.

Documentation notebooks

These notebooks comprehensively demonstrate how to use specific functions and objects.

Methods Unified by SHAP

-

LIME: Ribeiro, Marco Tulio, Sameer Singh, and Carlos Guestrin. "Why should i trust you?: Explaining the predictions of any classifier." Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. ACM, 2016.

-

Shapley sampling values: Strumbelj, Erik, and Igor Kononenko. "Explaining prediction models and individual predictions with feature contributions." Knowledge and information systems 41.3 (2014): 647-665.

-

DeepLIFT: Shrikumar, Avanti, Peyton Greenside, and Anshul Kundaje. "Learning important features through propagating activation differences." arXiv preprint arXiv:1704.02685 (2017).

-

QII: Datta, Anupam, Shayak Sen, and Yair Zick. "Algorithmic transparency via quantitative input influence: Theory and experiments with learning systems." Security and Privacy (SP), 2016 IEEE Symposium on. IEEE, 2016.

-

Layer-wise relevance propagation: Bach, Sebastian, et al. "On pixel-wise explanations for non-linear classifier decisions by layer-wise relevance propagation." PloS one 10.7 (2015): e0130140.

-

Shapley regression values: Lipovetsky, Stan, and Michael Conklin. "Analysis of regression in game theory approach." Applied Stochastic Models in Business and Industry 17.4 (2001): 319-330.

-

Tree interpreter: Saabas, Ando. Interpreting random forests. http://blog.datadive.net/interpreting-random-forests/

Citations

The algorithms and visualizations used in this package came primarily out of research in Su-In Lee's lab at the University of Washington, and Microsoft Research. If you use SHAP in your research we would appreciate a citation to the appropriate paper(s):

- For general use of SHAP you can read/cite our NeurIPS paper (bibtex).

- For TreeExplainer you can read/cite our Nature Machine Intelligence paper (bibtex; free access).

- For GPUTreeExplainer you can read/cite this article.

- For

force_plotvisualizations and medical applications you can read/cite our Nature Biomedical Engineering paper (bibtex; free access).

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distributions

Filter files by name, interpreter, ABI, and platform.

If you're not sure about the file name format, learn more about wheel file names.

Copy a direct link to the current filters

File details

Details for the file shap-0.42.0.tar.gz.

File metadata

- Download URL: shap-0.42.0.tar.gz

- Upload date:

- Size: 401.9 kB

- Tags: Source

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/4.0.1 CPython/3.11.4

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

3ad942256d8f1d510eeb8cd8a98352cec08232052674b678718b57e30651abea

|

|

| MD5 |

21387ca7961d4f8399b242f2e519291e

|

|

| BLAKE2b-256 |

65002d00b3f5b6250af7f68bcdde35d872c661ddc7f9e45e927295893047ef05

|

File details

Details for the file shap-0.42.0-cp311-cp311-win_amd64.whl.

File metadata

- Download URL: shap-0.42.0-cp311-cp311-win_amd64.whl

- Upload date:

- Size: 461.5 kB

- Tags: CPython 3.11, Windows x86-64

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/4.0.1 CPython/3.11.4

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

9e8d3dd1f5e56688a39fac77f0a59aecc38b01d841a3b5b949de3611485b45bb

|

|

| MD5 |

cb3b9e0f4f023e57aed29eb0a9bb6328

|

|

| BLAKE2b-256 |

581511abd7947ffc4b9073c1416762642d05725cca6e4371dca22f86fec127c0

|

File details

Details for the file shap-0.42.0-cp311-cp311-manylinux_2_12_x86_64.manylinux2010_x86_64.manylinux_2_17_x86_64.manylinux2014_x86_64.whl.

File metadata

- Download URL: shap-0.42.0-cp311-cp311-manylinux_2_12_x86_64.manylinux2010_x86_64.manylinux_2_17_x86_64.manylinux2014_x86_64.whl

- Upload date:

- Size: 547.2 kB

- Tags: CPython 3.11, manylinux: glibc 2.12+ x86-64, manylinux: glibc 2.17+ x86-64

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/4.0.1 CPython/3.11.4

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

4d559620382c0caf5bc3eee96cacf5bf8e9029f92b3cbff15422d39f4d81bc85

|

|

| MD5 |

1d0963efe284f62d9b27e215d2c4f61a

|

|

| BLAKE2b-256 |

f5b45a16d542fe6c2f1e72885ea578479c593e19c5264f00d04bb7c9dc5f354c

|

File details

Details for the file shap-0.42.0-cp311-cp311-macosx_10_9_x86_64.whl.

File metadata

- Download URL: shap-0.42.0-cp311-cp311-macosx_10_9_x86_64.whl

- Upload date:

- Size: 464.5 kB

- Tags: CPython 3.11, macOS 10.9+ x86-64

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/4.0.1 CPython/3.11.4

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

612c0575000109cdbc7e7cc5774259ef8183c85caad4595bebe5721bdae27378

|

|

| MD5 |

ec1cd87632fe643a5ccb22a6083ae567

|

|

| BLAKE2b-256 |

43fd07af88f497005af2df6b760931ada4f6987b651c22e74eafdf976e015b6e

|

File details

Details for the file shap-0.42.0-cp310-cp310-win_amd64.whl.

File metadata

- Download URL: shap-0.42.0-cp310-cp310-win_amd64.whl

- Upload date:

- Size: 461.5 kB

- Tags: CPython 3.10, Windows x86-64

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/4.0.1 CPython/3.11.4

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

b2f516c734850fbcfeb72c49214f716556ebda12ef797ed0dddef22109ec7f1f

|

|

| MD5 |

395d291f1a6a76e0a972bddfa0ea40af

|

|

| BLAKE2b-256 |

57294efca8f22c3ce49c60145503d546d339a6ada9c5175e7c5a229ab499ab74

|

File details

Details for the file shap-0.42.0-cp310-cp310-manylinux_2_12_x86_64.manylinux2010_x86_64.manylinux_2_17_x86_64.manylinux2014_x86_64.whl.

File metadata

- Download URL: shap-0.42.0-cp310-cp310-manylinux_2_12_x86_64.manylinux2010_x86_64.manylinux_2_17_x86_64.manylinux2014_x86_64.whl

- Upload date:

- Size: 547.1 kB

- Tags: CPython 3.10, manylinux: glibc 2.12+ x86-64, manylinux: glibc 2.17+ x86-64

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/4.0.1 CPython/3.11.4

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

ea6654d78343e406e7ae0f603ef602faa6d9c94ff7fbe810810ba4c4663d8203

|

|

| MD5 |

439f97f41a55867e8bb305eb5f33b14e

|

|

| BLAKE2b-256 |

701c24ef3654778fec6bf859bdf59547bf76aa927186fc774acf86bef0600dde

|

File details

Details for the file shap-0.42.0-cp310-cp310-macosx_10_9_x86_64.whl.

File metadata

- Download URL: shap-0.42.0-cp310-cp310-macosx_10_9_x86_64.whl

- Upload date:

- Size: 464.5 kB

- Tags: CPython 3.10, macOS 10.9+ x86-64

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/4.0.1 CPython/3.11.4

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

0ea16baee8d0633db80c7e077601ccd0e12193a203676aa16a8f44524bcfa717

|

|

| MD5 |

eae74b8350ea669b38b579fdc3e10c89

|

|

| BLAKE2b-256 |

ce91bcc73ac6f2daa30079e4aeed024d482517f5637f8a863071a9e4ca17373c

|

File details

Details for the file shap-0.42.0-cp39-cp39-win_amd64.whl.

File metadata

- Download URL: shap-0.42.0-cp39-cp39-win_amd64.whl

- Upload date:

- Size: 461.5 kB

- Tags: CPython 3.9, Windows x86-64

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/4.0.1 CPython/3.11.4

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

4ea60625b4fdbb0e3d668503f6622141e869b4298bcb9a620345fcda050293d4

|

|

| MD5 |

3145aef2c24aa89b1b52f69dc880ea57

|

|

| BLAKE2b-256 |

ed9ab0760a344e0c7a36ae823c4109e22ab24186fb72ac5d485d60516bf5c12b

|

File details

Details for the file shap-0.42.0-cp39-cp39-manylinux_2_12_x86_64.manylinux2010_x86_64.manylinux_2_17_x86_64.manylinux2014_x86_64.whl.

File metadata

- Download URL: shap-0.42.0-cp39-cp39-manylinux_2_12_x86_64.manylinux2010_x86_64.manylinux_2_17_x86_64.manylinux2014_x86_64.whl

- Upload date:

- Size: 547.0 kB

- Tags: CPython 3.9, manylinux: glibc 2.12+ x86-64, manylinux: glibc 2.17+ x86-64

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/4.0.1 CPython/3.11.4

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

05230d390875ba5be5f5808c32cf0c4531919311e899e6543f5cfe3668d7be20

|

|

| MD5 |

7b33911a762f404656a077f35b89b4a1

|

|

| BLAKE2b-256 |

9c0e16d83461a33870075bb6bc0286346fe5179504c796173abb40533632ceda

|

File details

Details for the file shap-0.42.0-cp39-cp39-macosx_10_9_x86_64.whl.

File metadata

- Download URL: shap-0.42.0-cp39-cp39-macosx_10_9_x86_64.whl

- Upload date:

- Size: 464.5 kB

- Tags: CPython 3.9, macOS 10.9+ x86-64

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/4.0.1 CPython/3.11.4

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

54820e0924f05890c9f27f550425c7b02e8eca835ffc83af8030b6b75e3c8fd3

|

|

| MD5 |

3f9ae264bebc5db7e820e3d94739039c

|

|

| BLAKE2b-256 |

43bbfd7dbbf2882e1a0cfd612175ed9a200d3e1752f84bcce39cb4f3829500ee

|

File details

Details for the file shap-0.42.0-cp38-cp38-win_amd64.whl.

File metadata

- Download URL: shap-0.42.0-cp38-cp38-win_amd64.whl

- Upload date:

- Size: 461.5 kB

- Tags: CPython 3.8, Windows x86-64

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/4.0.1 CPython/3.11.4

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

278916510b9204b46dfcb5be76402a2ebad9bbe31708307a2f259e20811a70c6

|

|

| MD5 |

77ef8ccb50957208ddd4f33615808b43

|

|

| BLAKE2b-256 |

37d19339ab0de7a470300846c8702ca1ef5c21163804ad68dcef33dabb7056fb

|

File details

Details for the file shap-0.42.0-cp38-cp38-manylinux_2_12_x86_64.manylinux2010_x86_64.manylinux_2_17_x86_64.manylinux2014_x86_64.whl.

File metadata

- Download URL: shap-0.42.0-cp38-cp38-manylinux_2_12_x86_64.manylinux2010_x86_64.manylinux_2_17_x86_64.manylinux2014_x86_64.whl

- Upload date:

- Size: 550.0 kB

- Tags: CPython 3.8, manylinux: glibc 2.12+ x86-64, manylinux: glibc 2.17+ x86-64

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/4.0.1 CPython/3.11.4

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

1d08c7beeb7a391df3dba3456da8487154b835be3e2394dca2824a62d54cb72f

|

|

| MD5 |

d7c80251052404b02d12f33622b5d1b6

|

|

| BLAKE2b-256 |

dfc8f4f926841f639b880826bd97209339e758ef53566836055e1e0f9eeda598

|

File details

Details for the file shap-0.42.0-cp38-cp38-macosx_10_9_x86_64.whl.

File metadata

- Download URL: shap-0.42.0-cp38-cp38-macosx_10_9_x86_64.whl

- Upload date:

- Size: 464.5 kB

- Tags: CPython 3.8, macOS 10.9+ x86-64

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/4.0.1 CPython/3.11.4

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

596d5582ecbdd0fc9a5fc09159ed7bdcd9a298a3820c8ba5efc080d268c142e7

|

|

| MD5 |

3fc6ab7a255f7de136108b48c821cadb

|

|

| BLAKE2b-256 |

f5c7b99b7ee63e45399316441b20a900a19637225e71e1397a2ddbe6165c460e

|

File details

Details for the file shap-0.42.0-cp37-cp37m-win_amd64.whl.

File metadata

- Download URL: shap-0.42.0-cp37-cp37m-win_amd64.whl

- Upload date:

- Size: 461.3 kB

- Tags: CPython 3.7m, Windows x86-64

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/4.0.1 CPython/3.11.4

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

700adb024854090fa59d24746f36d4afeee11af2c63e955804fef52fe0de67c1

|

|

| MD5 |

1b7f532f19e991c807486e348234c2bd

|

|

| BLAKE2b-256 |

b1ca0912a2d2eeb56d6a9e5ed46d0e43325bbf554df730fccceb588823adaedd

|

File details

Details for the file shap-0.42.0-cp37-cp37m-manylinux_2_12_x86_64.manylinux2010_x86_64.manylinux_2_17_x86_64.manylinux2014_x86_64.whl.

File metadata

- Download URL: shap-0.42.0-cp37-cp37m-manylinux_2_12_x86_64.manylinux2010_x86_64.manylinux_2_17_x86_64.manylinux2014_x86_64.whl

- Upload date:

- Size: 544.9 kB

- Tags: CPython 3.7m, manylinux: glibc 2.12+ x86-64, manylinux: glibc 2.17+ x86-64

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/4.0.1 CPython/3.11.4

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

be1c6efa749c6858ace829af256ea7240afe77f866950c862d073d3933a2ac7a

|

|

| MD5 |

a36492d65639ec9cd89fe78981c80f6f

|

|

| BLAKE2b-256 |

6d3be8988502767a3dfde2961b3c46eb7616d7190f75164d1131e30f9585849d

|

File details

Details for the file shap-0.42.0-cp37-cp37m-macosx_10_9_x86_64.whl.

File metadata

- Download URL: shap-0.42.0-cp37-cp37m-macosx_10_9_x86_64.whl

- Upload date:

- Size: 464.3 kB

- Tags: CPython 3.7m, macOS 10.9+ x86-64

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/4.0.1 CPython/3.11.4

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

896c61afca71a43efb81878ab9318cf3f2f3ff3d9be3771a3878f0a0fd9741c4

|

|

| MD5 |

7107e3a70d3e8bcc509ae297daacf229

|

|

| BLAKE2b-256 |

9b94000a50b90bc100279590a4cc11764c3700e27fee38d3c92d5c1f853375b0

|