A library for augmenting text for natural language processing applications.

Project description

TextAugment: Improving Short Text Classification through Global Augmentation Methods

You have just found TextAugment.

TextAugment is a Python 3 library for augmenting text for natural language processing applications. TextAugment stands on the giant shoulders of NLTK, Gensim, and TextBlob and plays nicely with them.

Features

- Generate synthetic data for improving model performance without manual effort

- Simple, lightweight, easy-to-use library.

- Plug and play to any machine learning frameworks (e.g. PyTorch, TensorFlow, Scikit-learn)

- Support textual data

Citation Paper

Improving short text classification through global augmentation methods.

Requirements

- Python 3

The following software packages are dependencies and will be installed automatically.

$ pip install numpy nltk gensim textblob googletrans

The following code downloads NLTK corpus for wordnet.

nltk.download('wordnet')

The following code downloads NLTK tokenizer. This tokenizer divides a text into a list of sentences by using an unsupervised algorithm to build a model for abbreviation words, collocations, and words that start sentences.

nltk.download('punkt')

The following code downloads default NLTK part-of-speech tagger model. A part-of-speech tagger processes a sequence of words, and attaches a part of speech tag to each word.

nltk.download('averaged_perceptron_tagger')

Use gensim to load a pre-trained word2vec model. Like Google News from Google drive.

import gensim

model = gensim.models.Word2Vec.load_word2vec_format('./GoogleNews-vectors-negative300.bin', binary=True)

Or training one from scratch using your data or the following public dataset:

Installation

Install from pip [Recommended]

$ pip install textaugment

or install latest release

$ pip install git+git@github.com:dsfsi/textaugment.git

Install from source

$ git clone git@github.com:dsfsi/textaugment.git

$ cd textaugment

$ python setup.py install

How to use

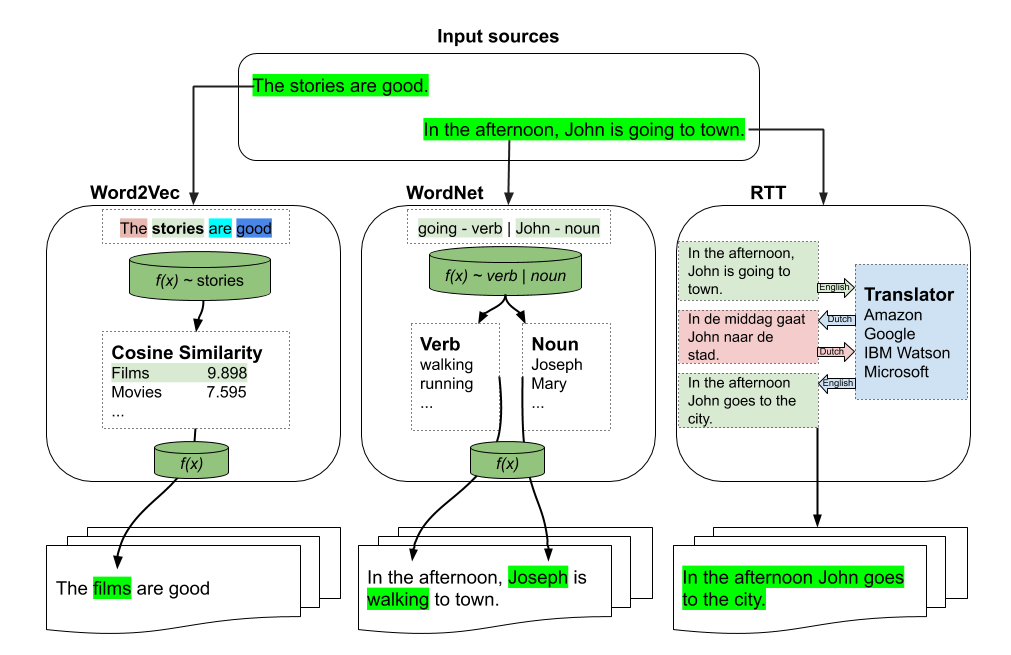

There are three types of augmentations which can be used:

- word2vec

from textaugment import Word2vec

- wordnet

from textaugment import Wordnet

- translate (This will require internet access)

from textaugment import Translate

Word2vec-based augmentation

Basic example

>>> from textaugment import Word2vec

>>> t = Word2vec(model='path/to/gensim/model'or 'gensim model itself')

>>> t.augment('The stories are good')

The films are good

Advanced example

>>> runs = 1 # By default.

>>> v = False # verbose mode to replace all the words. If enabled runs is not effective. Used in this paper (https://www.cs.cmu.edu/~diyiy/docs/emnlp_wang_2015.pdf)

>>> p = 0.5 # The probability of success of an individual trial. (0.1<p<1.0), default is 0.5. Used by Geometric distribution to selects words from a sentence.

>>> t = Word2vec(model='path/to/gensim/model'or'gensim model itself', runs=5, v=False, p=0.5)

>>> t.augment('The stories are good')

The movies are excellent

WordNet-based augmentation

Basic example

>>> import nltk

>>> nltk.download('punkt')

>>> nltk.download('wordnet')

>>> from textaugment import Wordnet

>>> t = Wordnet()

>>> t.augment('In the afternoon, John is going to town')

In the afternoon, John is walking to town

Advanced example

>>> v = True # enable verbs augmentation. By default is True.

>>> n = False # enable nouns augmentation. By default is False.

>>> runs = 1 # number of times to augment a sentence. By default is 1.

>>> p = 0.5 # The probability of success of an individual trial. (0.1<p<1.0), default is 0.5. Used by Geometric distribution to selects words from a sentence.

>>> t = Wordnet(v=False ,n=True, p=0.5)

>>> t.augment('In the afternoon, John is going to town')

In the afternoon, Joseph is going to town.

RTT-based augmentation

Example

>>> src = "en" # source language of the sentence

>>> to = "fr" # target language

>>> from textaugment import Translate

>>> t = Translate(src="en", to="fr")

>>> t.augment('In the afternoon, John is going to town')

In the afternoon John goes to town

EDA: Easy data augmentation techniques for boosting performance on text classification tasks

This is the implementation of EDA by Jason Wei and Kai Zou.

Synonym Replacement

Randomly choose n words from the sentence that are not stop words. Replace each of these words with one of its synonyms chosen at random.

Basic example

>>> from textaugment import EDA

>>> t = EDA()

>>> t.synonym_replacement("John is going to town")

John is give out to town

Random Deletion

Randomly remove each word in the sentence with probability p.

Basic example

>>> from textaugment import EDA

>>> t = EDA()

>>> t.random_deletion("John is going to town", p=0.2)

is going to town

Random Swap

Randomly choose two words in the sentence and swap their positions. Do this n times.

Basic example

>>> from textaugment import EDA

>>> t = EDA()

>>> t.random_swap("John is going to town")

John town going to is

Random Insertion

Find a random synonym of a random word in the sentence that is not a stop word. Insert that synonym into a random position in the sentence. Do this n times

Basic example

>>> from textaugment import EDA

>>> t = EDA()

>>> t.random_insertion("John is going to town")

John is going to make up town

Built with ❤ on

Authors

Acknowledgements

Cite this paper when using this library.

@article{marivate2019improving,

title={Improving short text classification through global augmentation methods},

author={Marivate, Vukosi and Sefara, Tshephisho},

journal={arXiv preprint arXiv:1907.03752},

year={2019}

}

Licence

MIT licensed. See the bundled LICENCE file for more details.

Project details

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

Filter files by name, interpreter, ABI, and platform.

If you're not sure about the file name format, learn more about wheel file names.

Copy a direct link to the current filters

File details

Details for the file textaugment-1.3.tar.gz.

File metadata

- Download URL: textaugment-1.3.tar.gz

- Upload date:

- Size: 15.1 kB

- Tags: Source

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/3.1.1 pkginfo/1.5.0.1 requests/2.22.0 setuptools/46.4.0.post20200518 requests-toolbelt/0.9.1 tqdm/4.46.0 CPython/3.7.7

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

76c3942e1d78a6ebe52bc1df678b7a88470e34be2719c0ffdae4ada59b9fce7e

|

|

| MD5 |

7db512a8eca306c4ee1149e4095c806c

|

|

| BLAKE2b-256 |

220d77d7723bd10d4cf657aae9d7846aac9a9d2adb9c80d4a91a340ab058d932

|

File details

Details for the file textaugment-1.3-py3-none-any.whl.

File metadata

- Download URL: textaugment-1.3-py3-none-any.whl

- Upload date:

- Size: 15.8 kB

- Tags: Python 3

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/3.1.1 pkginfo/1.5.0.1 requests/2.22.0 setuptools/46.4.0.post20200518 requests-toolbelt/0.9.1 tqdm/4.46.0 CPython/3.7.7

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

a4c95a01e2d212c0bda9ae11339c04608c28cf33d19b8f6c4f76e1b33fb283cd

|

|

| MD5 |

897ed7ea12b26e0d60dec6cfdf2c0ba6

|

|

| BLAKE2b-256 |

d587906c855827f99a65ab91b22afbfa91731bd4397b5e3ca344de571e5c7651

|