Tomoto, The Topic Modeling Tool for Python

Project description

What is tomotopy?

tomotopy is a Python extension of tomoto (Topic Modeling Tool) which is a Gibbs-sampling based topic model library written in C++. It utilizes a vectorization of modern CPUs for maximizing speed. The current version of tomoto supports several major topic models including

Latent Dirichlet Allocation (tomotopy.LDAModel)

Labeled LDA (tomotopy.LLDAModel)

Partially Labeled LDA (tomotopy.PLDAModel)

Supervised LDA (tomotopy.SLDAModel)

Dirichlet Multinomial Regression (tomotopy.DMRModel)

Generalized Dirichlet Multinomial Regression (tomotopy.GDMRModel)

Hierarchical Dirichlet Process (tomotopy.HDPModel)

Hierarchical LDA (tomotopy.HLDAModel)

Multi Grain LDA (tomotopy.MGLDAModel)

Pachinko Allocation (tomotopy.PAModel)

Hierarchical PA (tomotopy.HPAModel)

Correlated Topic Model (tomotopy.CTModel)

Dynamic Topic Model (tomotopy.DTModel).

The most recent version of tomotopy is 0.10.1.

Getting Started

You can install tomotopy easily using pip. (https://pypi.org/project/tomotopy/)

$ pip install --upgrade pip $ pip install tomotopy

The supported OS and Python versions are:

Linux (x86-64) with Python >= 3.5

macOS >= 10.13 with Python >= 3.5

Windows 7 or later (x86, x86-64) with Python >= 3.5

Other OS with Python >= 3.5: Compilation from source code required (with c++11 compatible compiler)

After installing, you can start tomotopy by just importing.

import tomotopy as tp print(tp.isa) # prints 'avx2', 'avx', 'sse2' or 'none'

Currently, tomotopy can exploits AVX2, AVX or SSE2 SIMD instruction set for maximizing performance. When the package is imported, it will check available instruction sets and select the best option. If tp.isa tells none, iterations of training may take a long time. But, since most of modern Intel or AMD CPUs provide SIMD instruction set, the SIMD acceleration could show a big improvement.

Here is a sample code for simple LDA training of texts from ‘sample.txt’ file.

import tomotopy as tp

mdl = tp.LDAModel(k=20)

for line in open('sample.txt'):

mdl.add_doc(line.strip().split())

for i in range(0, 100, 10):

mdl.train(10)

print('Iteration: {}\tLog-likelihood: {}'.format(i, mdl.ll_per_word))

for k in range(mdl.k):

print('Top 10 words of topic #{}'.format(k))

print(mdl.get_topic_words(k, top_n=10))

mdl.summary()

Performance of tomotopy

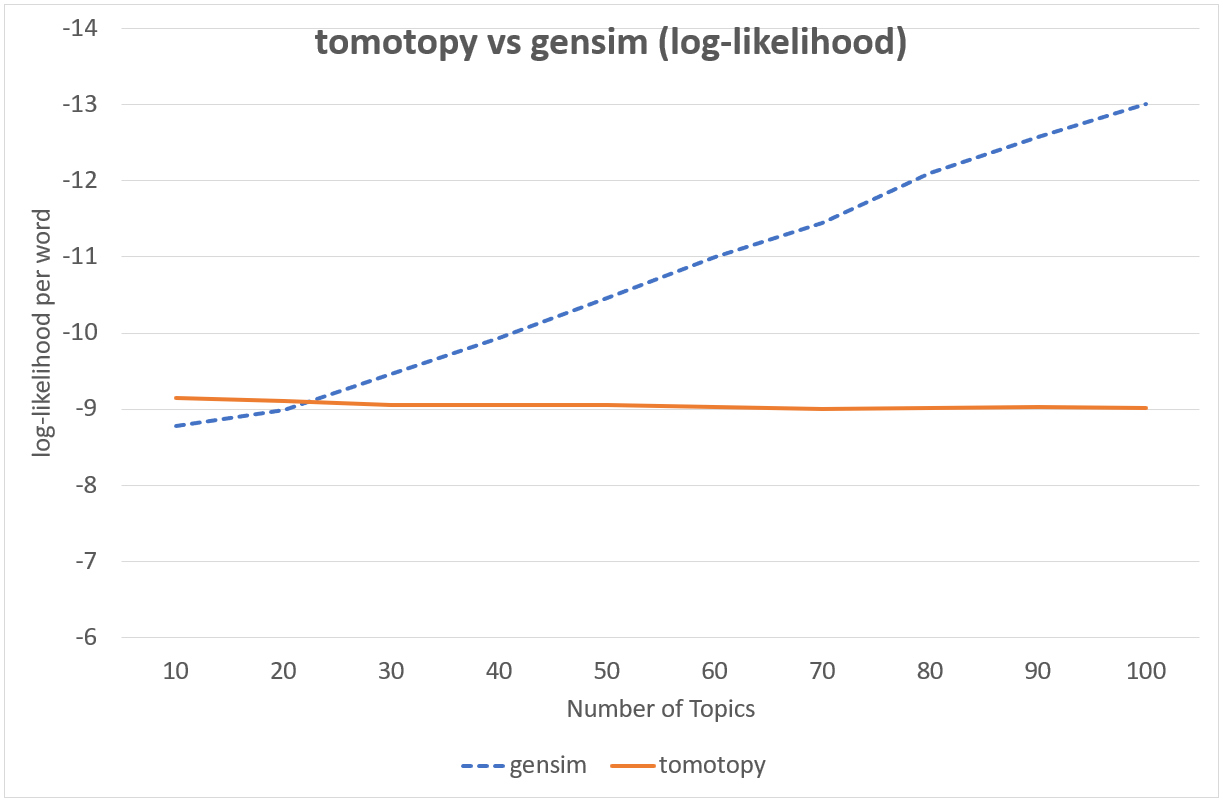

tomotopy uses Collapsed Gibbs-Sampling(CGS) to infer the distribution of topics and the distribution of words. Generally CGS converges more slowly than Variational Bayes(VB) that [gensim’s LdaModel] uses, but its iteration can be computed much faster. In addition, tomotopy can take advantage of multicore CPUs with a SIMD instruction set, which can result in faster iterations.

[gensim’s LdaModel]: https://radimrehurek.com/gensim/models/ldamodel.html

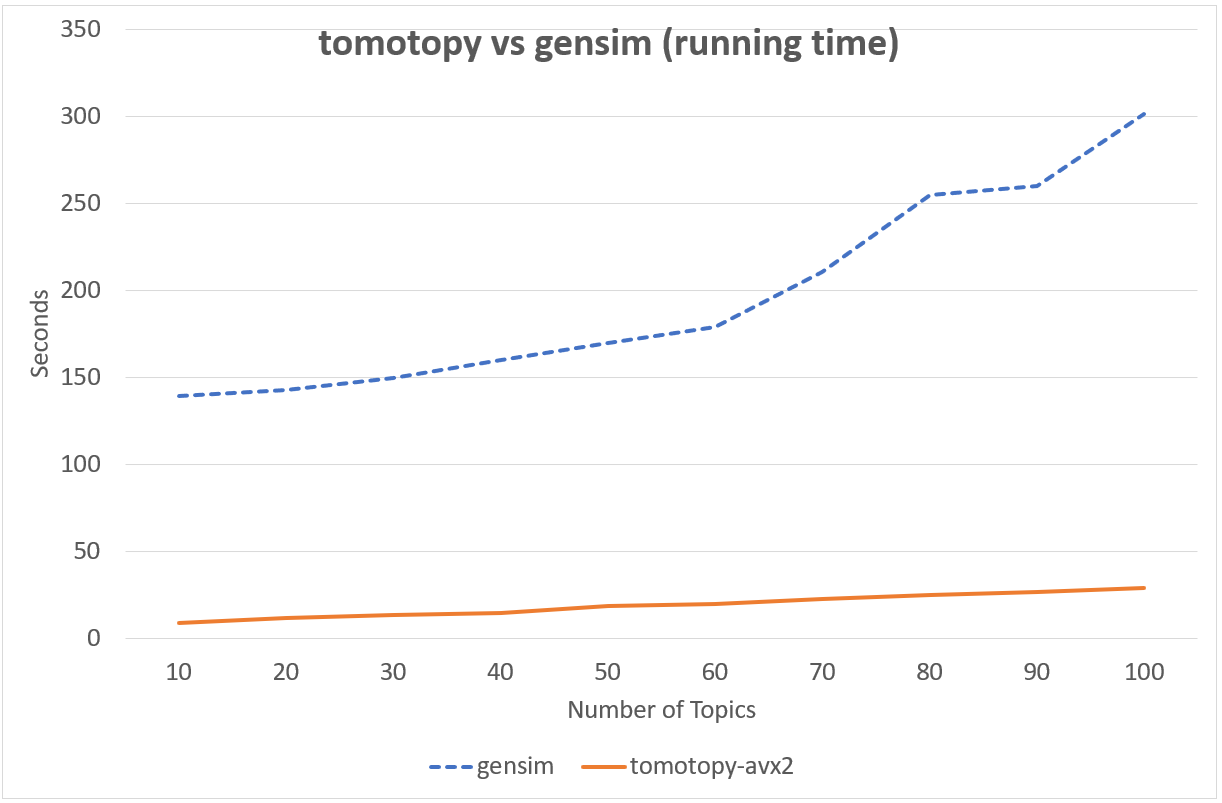

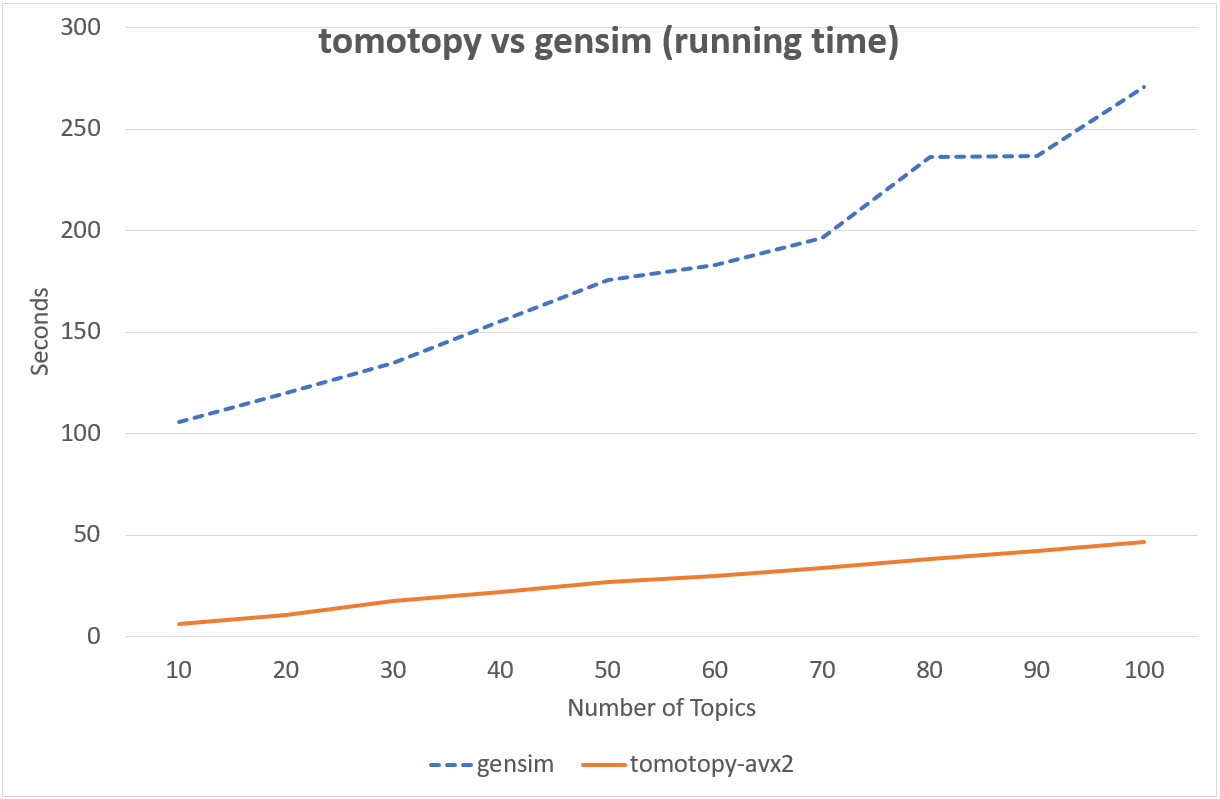

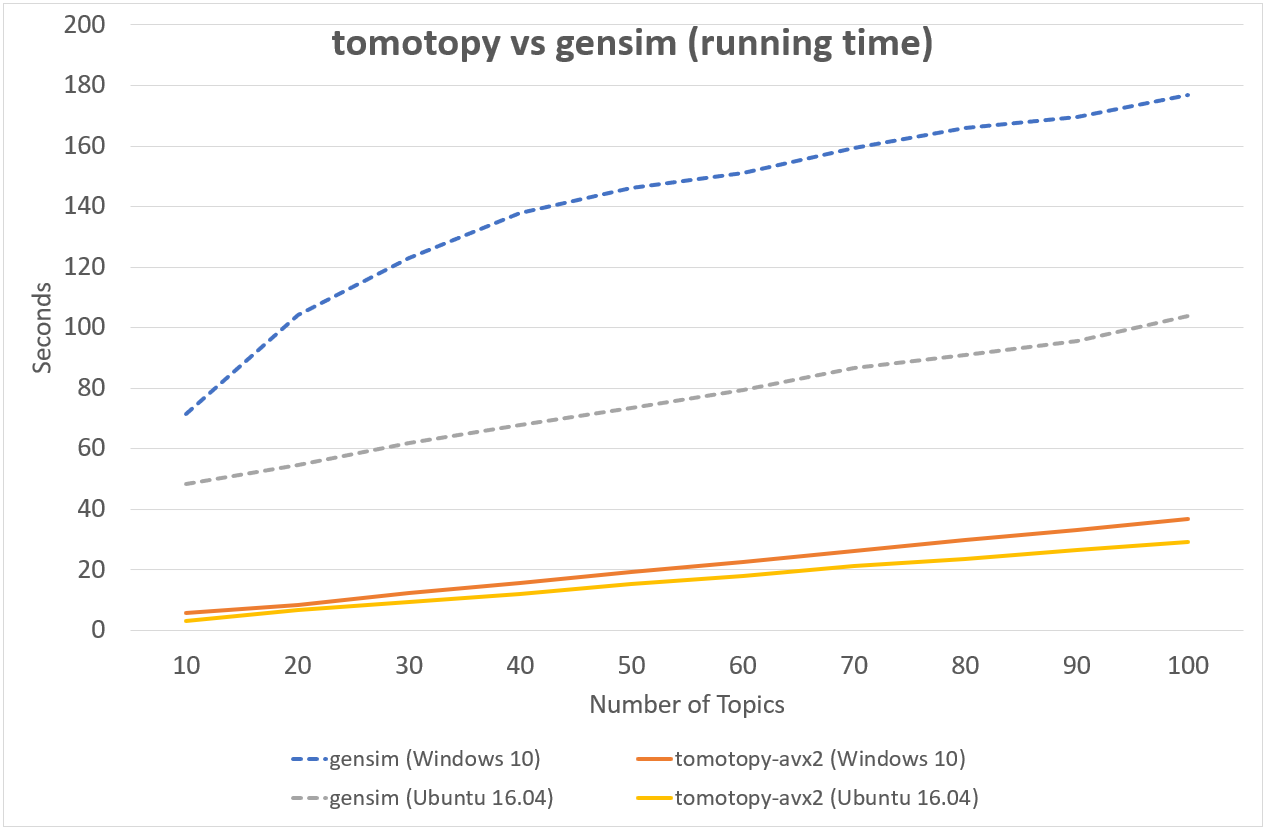

Following chart shows the comparison of LDA model’s running time between tomotopy and gensim. The input data consists of 1000 random documents from English Wikipedia with 1,506,966 words (about 10.1 MB). tomotopy trains 200 iterations and gensim trains 10 iterations.

↑ Performance in Intel i5-6600, x86-64 (4 cores)

↑ Performance in Intel Xeon E5-2620 v4, x86-64 (8 cores, 16 threads)

↑ Performance in AMD Ryzen7 3700X, x86-64 (8 cores, 16 threads)

Although tomotopy iterated 20 times more, the overall running time was 5~10 times faster than gensim. And it yields a stable result.

It is difficult to compare CGS and VB directly because they are totaly different techniques. But from a practical point of view, we can compare the speed and the result between them. The following chart shows the log-likelihood per word of two models’ result.

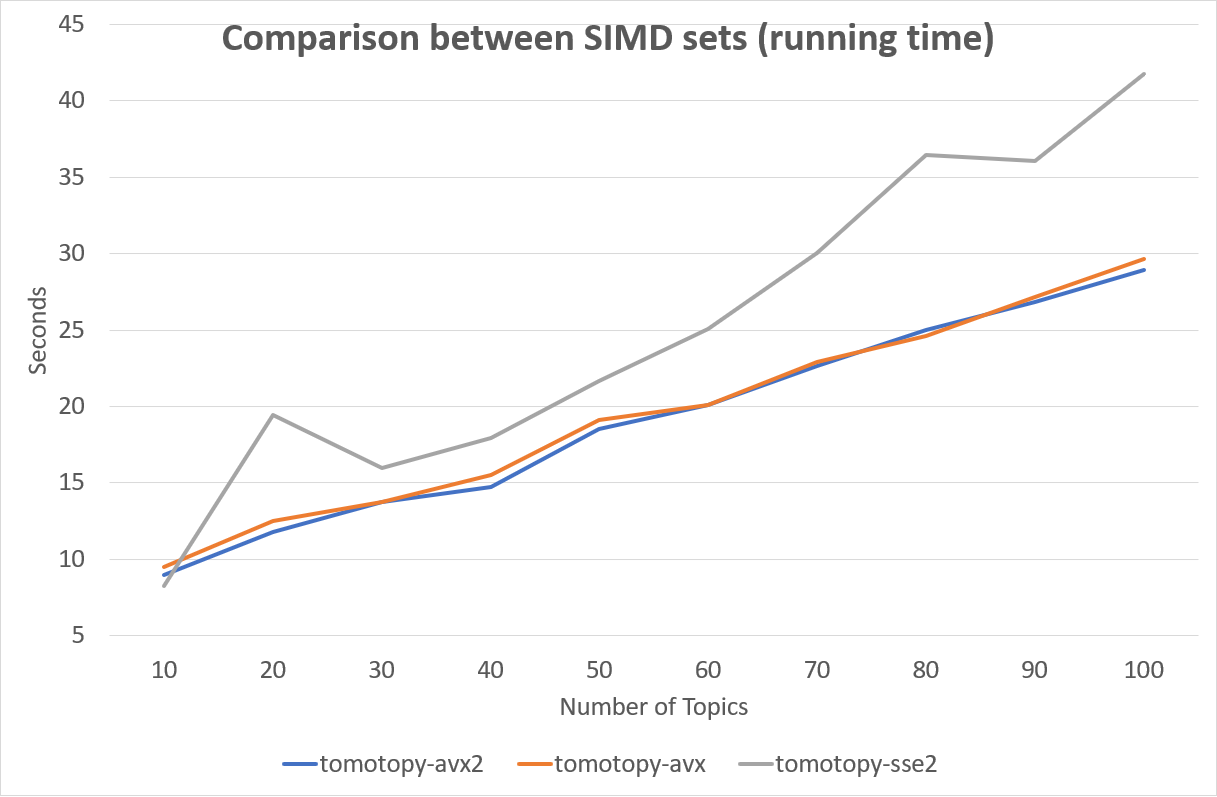

The SIMD instruction set has a great effect on performance. Following is a comparison between SIMD instruction sets.

Fortunately, most of recent x86-64 CPUs provide AVX2 instruction set, so we can enjoy the performance of AVX2.

Vocabulary controlling using CF and DF

CF(collection frequency) and DF(document frequency) are concepts used in information retreival, and each represents the total number of times the word appears in the corpus and the number of documents in which the word appears within the corpus, respectively. tomotopy provides these two measures under the parameters of min_cf and min_df to trim low frequency words when building the corpus.

For example, let’s say we have 5 documents #0 ~ #4 which are composed of the following words:

#0 : a, b, c, d, e, c #1 : a, b, e, f #2 : c, d, c #3 : a, e, f, g #4 : a, b, g

Both CF of a and CF of c are 4 because it appears 4 times in the entire corpus. But DF of a is 4 and DF of c is 2 because a appears in #0, #1, #3 and #4 and c only appears in #0 and #2. So if we trim low frequency words using min_cf=3, the result becomes follows:

(d, f and g are removed.) #0 : a, b, c, e, c #1 : a, b, e #2 : c, c #3 : a, e #4 : a, b

However when min_df=3 the result is like :

(c, d, f and g are removed.) #0 : a, b, e #1 : a, b, e #2 : (empty doc) #3 : a, e #4 : a, b

As we can see, min_df is a stronger criterion than min_cf. In performing topic modeling, words that appear repeatedly in only one document do not contribute to estimating the topic-word distribution. So, removing words with low df is a good way to reduce model size while preserving the results of the final model. In short, please prefer using min_df to min_cf.

Model Save and Load

tomotopy provides save and load method for each topic model class, so you can save the model into the file whenever you want, and re-load it from the file.

import tomotopy as tp

mdl = tp.HDPModel()

for line in open('sample.txt'):

mdl.add_doc(line.strip().split())

for i in range(0, 100, 10):

mdl.train(10)

print('Iteration: {}\tLog-likelihood: {}'.format(i, mdl.ll_per_word))

# save into file

mdl.save('sample_hdp_model.bin')

# load from file

mdl = tp.HDPModel.load('sample_hdp_model.bin')

for k in range(mdl.k):

if not mdl.is_live_topic(k): continue

print('Top 10 words of topic #{}'.format(k))

print(mdl.get_topic_words(k, top_n=10))

# the saved model is HDP model,

# so when you load it by LDA model, it will raise an exception

mdl = tp.LDAModel.load('sample_hdp_model.bin')

When you load the model from a file, a model type in the file should match the class of methods.

See more at tomotopy.LDAModel.save and tomotopy.LDAModel.load methods.

Documents in the Model and out of the Model

We can use Topic Model for two major purposes. The basic one is to discover topics from a set of documents as a result of trained model, and the more advanced one is to infer topic distributions for unseen documents by using trained model.

We named the document in the former purpose (used for model training) as document in the model, and the document in the later purpose (unseen document during training) as document out of the model.

In tomotopy, these two different kinds of document are generated differently. A document in the model can be created by tomotopy.LDAModel.add_doc method. add_doc can be called before tomotopy.LDAModel.train starts. In other words, after train called, add_doc cannot add a document into the model because the set of document used for training has become fixed.

To acquire the instance of the created document, you should use tomotopy.LDAModel.docs like:

mdl = tp.LDAModel(k=20)

idx = mdl.add_doc(words)

if idx < 0: raise RuntimeError("Failed to add doc")

doc_inst = mdl.docs[idx]

# doc_inst is an instance of the added document

A document out of the model is generated by tomotopy.LDAModel.make_doc method. make_doc can be called only after train starts. If you use make_doc before the set of document used for training has become fixed, you may get wrong results. Since make_doc returns the instance directly, you can use its return value for other manipulations.

mdl = tp.LDAModel(k=20) # add_doc ... mdl.train(100) doc_inst = mdl.make_doc(unseen_doc) # doc_inst is an instance of the unseen document

Inference for Unseen Documents

If a new document is created by tomotopy.LDAModel.make_doc, its topic distribution can be inferred by the model. Inference for unseen document should be performed using tomotopy.LDAModel.infer method.

mdl = tp.LDAModel(k=20)

# add_doc ...

mdl.train(100)

doc_inst = mdl.make_doc(unseen_doc)

topic_dist, ll = mdl.infer(doc_inst)

print("Topic Distribution for Unseen Docs: ", topic_dist)

print("Log-likelihood of inference: ", ll)

The infer method can infer only one instance of tomotopy.Document or a list of instances of tomotopy.Document. See more at tomotopy.LDAModel.infer.

Parallel Sampling Algorithms

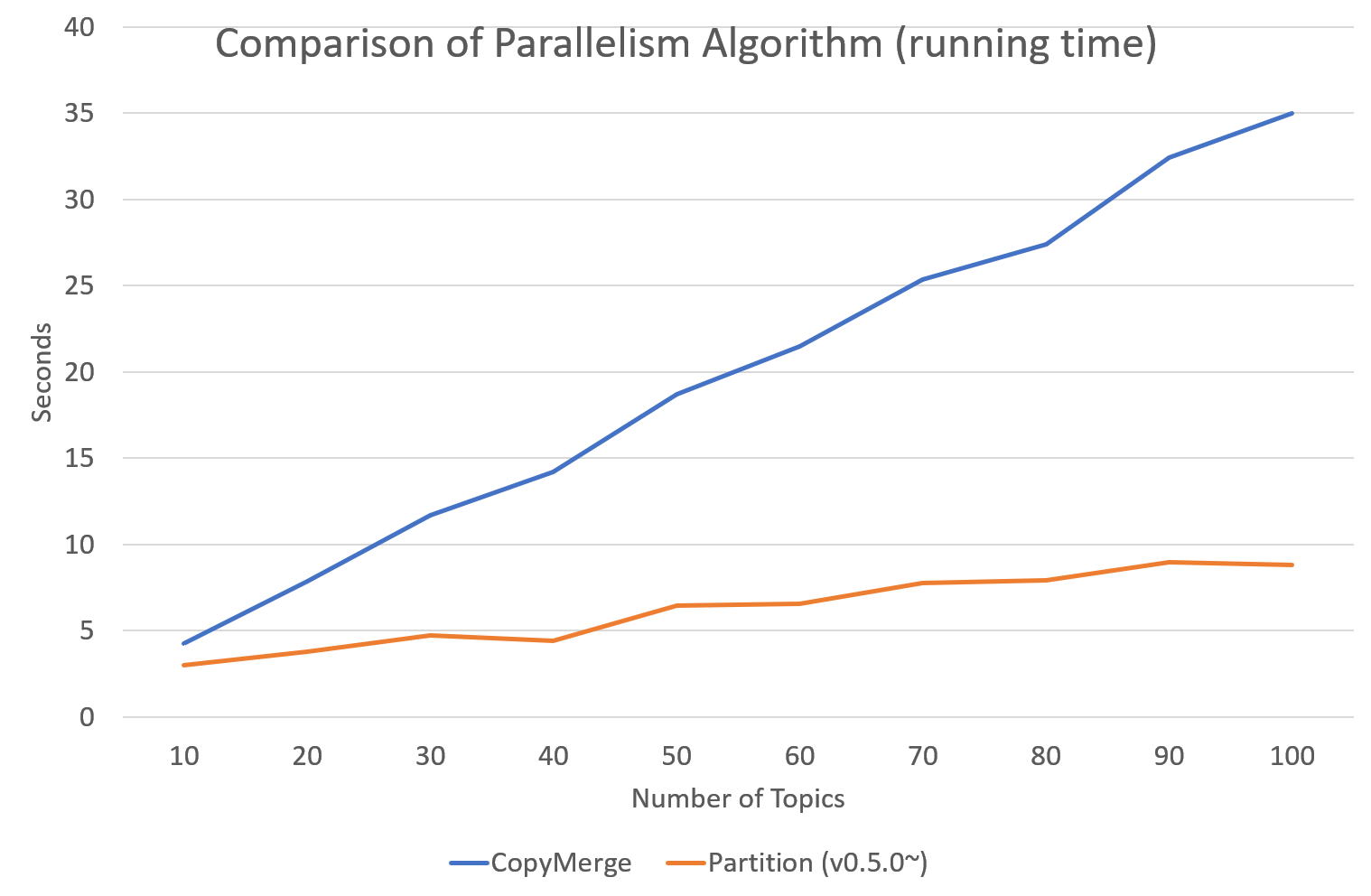

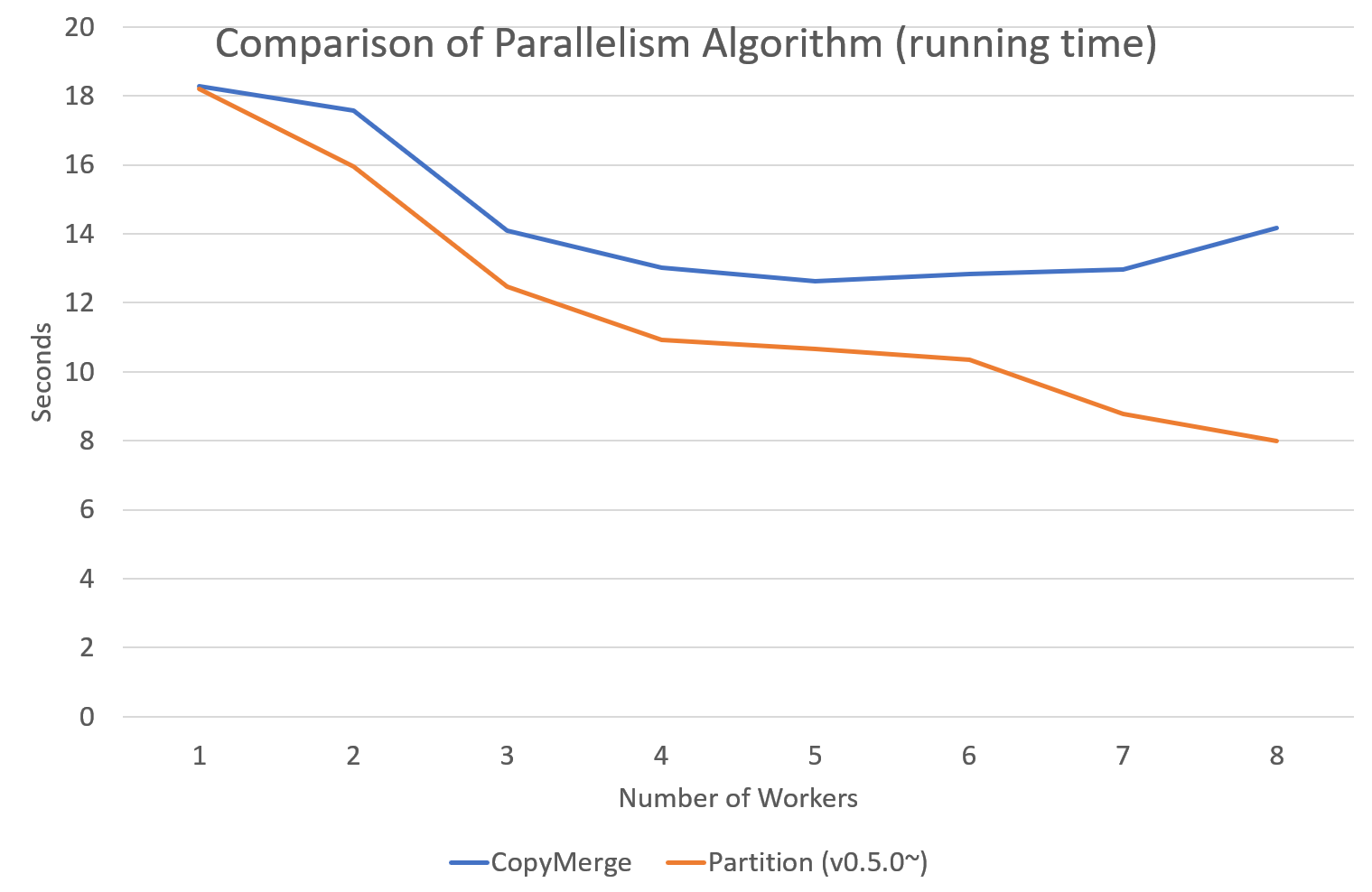

Since version 0.5.0, tomotopy allows you to choose a parallelism algorithm. The algorithm provided in versions prior to 0.4.2 is COPY_MERGE, which is provided for all topic models. The new algorithm PARTITION, available since 0.5.0, makes training generally faster and more memory-efficient, but it is available at not all topic models.

The following chart shows the speed difference between the two algorithms based on the number of topics and the number of workers.

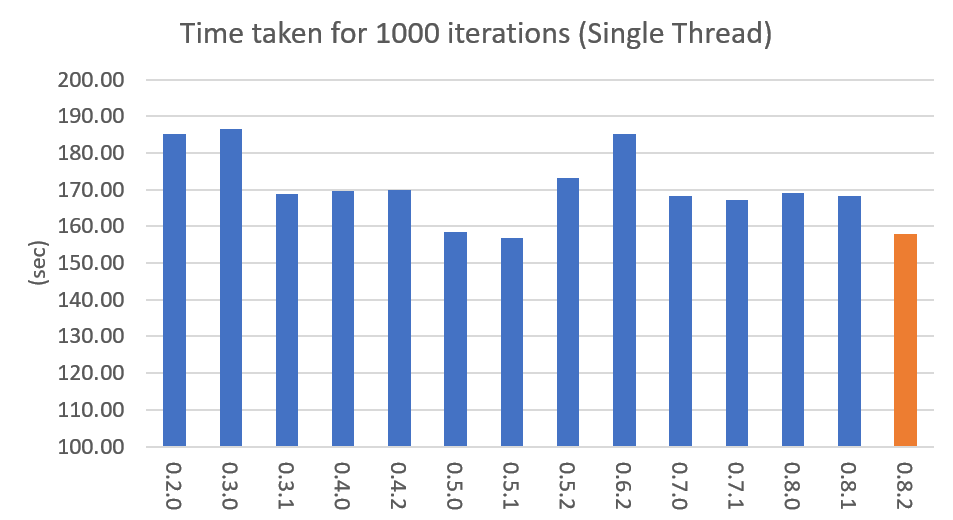

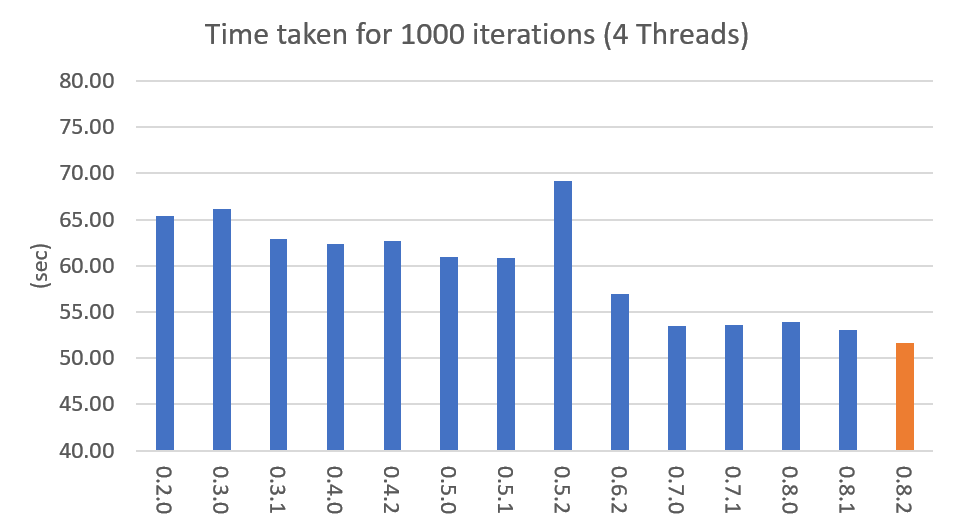

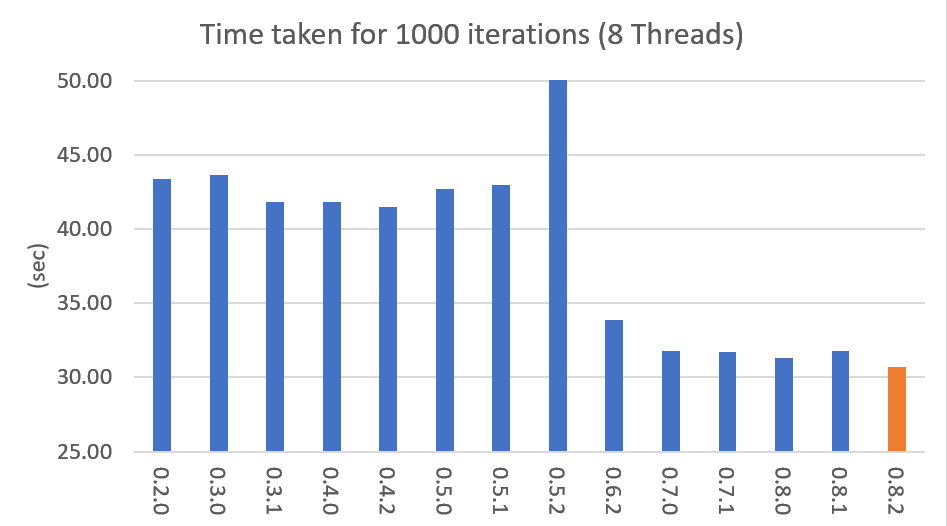

Performance by Version

Performance changes by version are shown in the following graph. The time it takes to run the LDA model train with 1000 iteration was measured. (Docs: 11314, Vocab: 60382, Words: 2364724, Intel Xeon Gold 5120 @2.2GHz)

Pining Topics using Word Priors

Since version 0.6.0, a new method tomotopy.LDAModel.set_word_prior has been added. It allows you to control word prior for each topic. For example, we can set the weight of the word ‘church’ to 1.0 in topic 0, and the weight to 0.1 in the rest of the topics by following codes. This means that the probability that the word ‘church’ is assigned to topic 0 is 10 times higher than the probability of being assigned to another topic. Therefore, most of ‘church’ is assigned to topic 0, so topic 0 contains many words related to ‘church’. This allows to manipulate some topics to be placed at a specific topic number.

import tomotopy as tp

mdl = tp.LDAModel(k=20)

# add documents into `mdl`

# setting word prior

mdl.set_word_prior('church', [1.0 if k == 0 else 0.1 for k in range(20)])

See word_prior_example in example.py for more details.

Examples

You can find an example python code of tomotopy at https://github.com/bab2min/tomotopy/blob/master/examples/ .

You can also get the data file used in the example code at https://drive.google.com/file/d/18OpNijd4iwPyYZ2O7pQoPyeTAKEXa71J/view .

License

tomotopy is licensed under the terms of MIT License, meaning you can use it for any reasonable purpose and remain in complete ownership of all the documentation you produce.

History

- 0.10.1 (2021-02-14)

An issue was fixed where tomotopy.utils.Corpus.extract_ngrams craches with empty input.

An issue was fixed where tomotopy.LDAModel.infer raises exception with valid input.

An issue was fixed where tomotopy.HLDAModel.infer generates wrong tomotopy.Document.path.

Since a new parameter freeze_topics for tomotopy.HLDAModel.train was added, you can control whether to create a new topic or not when training.

- 0.10.0 (2020-12-19)

The interface of tomotopy.utils.Corpus and of tomotopy.LDAModel.docs were unified. Now you can access the document in corpus with the same manner.

__getitem__ of tomotopy.utils.Corpus was improved. Not only indexing by int, but also by Iterable[int], slicing are supported. Also indexing by uid is supported.

New methods tomotopy.utils.Corpus.extract_ngrams and tomotopy.utils.Corpus.concat_ngrams were added. They extracts n-gram collocations using PMI and concatenates them into a single words.

A new method tomotopy.LDAModel.add_corpus was added, and tomotopy.LDAModel.infer can receive corpus as input.

A new module tomotopy.coherence was added. It provides the way to calculate coherence of the model.

A paramter window_size was added to tomotopy.label.FoRelevance.

An issue was fixed where NaN often occurs when training tomotopy.HDPModel.

Now Python3.9 is supported.

A dependency to py-cpuinfo was removed and the initializing of the module was improved.

- 0.9.1 (2020-08-08)

Memory leaks of version 0.9.0 was fixed.

tomotopy.CTModel.summary() was fixed.

- 0.9.0 (2020-08-04)

The tomotopy.LDAModel.summary() method, which prints human-readable summary of the model, has been added.

The random number generator of package has been replaced with [EigenRand]. It speeds up the random number generation and solves the result difference between platforms.

Due to above, even if seed is the same, the model training result may be different from the version before 0.9.0.

Fixed a training error in tomotopy.HDPModel.

tomotopy.DMRModel.alpha now shows Dirichlet prior of per-document topic distribution by metadata.

tomotopy.DTModel.get_count_by_topics() has been modified to return a 2-dimensional ndarray.

tomotopy.DTModel.alpha has been modified to return the same value as tomotopy.DTModel.get_alpha().

Fixed an issue where the metadata value could not be obtained for the document of tomotopy.GDMRModel.

tomotopy.HLDAModel.alpha now shows Dirichlet prior of per-document depth distribution.

tomotopy.LDAModel.global_step has been added.

tomotopy.MGLDAModel.get_count_by_topics() now returns the word count for both global and local topics.

tomotopy.PAModel.alpha, tomotopy.PAModel.subalpha, and tomotopy.PAModel.get_count_by_super_topic() have been added.

[EigenRand]: https://github.com/bab2min/EigenRand

- 0.8.2 (2020-07-14)

New properties tomotopy.DTModel.num_timepoints and tomotopy.DTModel.num_docs_by_timepoint have been added.

A bug which causes different results with the different platform even if seeds were the same was partially fixed. As a result of this fix, now tomotopy in 32 bit yields different training results from earlier version.

- 0.8.1 (2020-06-08)

A bug where tomotopy.LDAModel.used_vocabs returned an incorrect value was fixed.

Now tomotopy.CTModel.prior_cov returns a covariance matrix with shape [k, k].

Now tomotopy.CTModel.get_correlations with empty arguments returns a correlation matrix with shape [k, k].

- 0.8.0 (2020-06-06)

Since NumPy was introduced in tomotopy, many methods and properties of tomotopy return not just list, but numpy.ndarray now.

Tomotopy has a new dependency NumPy >= 1.10.0.

A wrong estimation of tomotopy.HDPModel.infer was fixed.

A new method about converting HDPModel to LDAModel was added.

New properties including tomotopy.LDAModel.used_vocabs, tomotopy.LDAModel.used_vocab_freq and tomotopy.LDAModel.used_vocab_df were added into topic models.

A new g-DMR topic model(tomotopy.GDMRModel) was added.

An error at initializing tomotopy.label.FoRelevance in macOS was fixed.

An error that occured when using tomotopy.utils.Corpus created without raw parameters was fixed.

- 0.7.1 (2020-05-08)

tomotopy.Document.path was added for tomotopy.HLDAModel.

A memory corruption bug in tomotopy.label.PMIExtractor was fixed.

A compile error in gcc 7 was fixed.

- 0.7.0 (2020-04-18)

tomotopy.DTModel was added into the package.

A bug in tomotopy.utils.Corpus.save was fixed.

A new method tomotopy.Document.get_count_vector was added into Document class.

Now linux distributions use manylinux2010 and an additional optimization is applied.

- 0.6.2 (2020-03-28)

A critical bug related to save and load was fixed. Version 0.6.0 and 0.6.1 have been removed from releases.

- 0.6.1 (2020-03-22) (removed)

A bug related to module loading was fixed.

- 0.6.0 (2020-03-22) (removed)

tomotopy.utils.Corpus class that manages multiple documents easily was added.

tomotopy.LDAModel.set_word_prior method that controls word-topic priors of topic models was added.

A new argument min_df that filters words based on document frequency was added into every topic model’s __init__.

tomotopy.label, the submodule about topic labeling was added. Currently, only tomotopy.label.FoRelevance is provided.

- 0.5.2 (2020-03-01)

A segmentation fault problem was fixed in tomotopy.LLDAModel.add_doc.

A bug was fixed that infer of tomotopy.HDPModel sometimes crashes the program.

A crash issue was fixed of tomotopy.LDAModel.infer with ps=tomotopy.ParallelScheme.PARTITION, together=True.

- 0.5.1 (2020-01-11)

A bug was fixed that tomotopy.SLDAModel.make_doc doesn’t support missing values for y.

Now tomotopy.SLDAModel fully supports missing values for response variables y. Documents with missing values (NaN) are included in modeling topic, but excluded from regression of response variables.

- 0.5.0 (2019-12-30)

Now tomotopy.PAModel.infer returns both topic distribution nd sub-topic distribution.

New methods get_sub_topics and get_sub_topic_dist were added into tomotopy.Document. (for PAModel)

New parameter parallel was added for tomotopy.LDAModel.train and tomotopy.LDAModel.infer method. You can select parallelism algorithm by changing this parameter.

tomotopy.ParallelScheme.PARTITION, a new algorithm, was added. It works efficiently when the number of workers is large, the number of topics or the size of vocabulary is big.

A bug where rm_top didn’t work at min_cf < 2 was fixed.

- 0.4.2 (2019-11-30)

Wrong topic assignments of tomotopy.LLDAModel and tomotopy.PLDAModel were fixed.

Readable __repr__ of tomotopy.Document and tomotopy.Dictionary was implemented.

- 0.4.1 (2019-11-27)

A bug at init function of tomotopy.PLDAModel was fixed.

- 0.4.0 (2019-11-18)

New models including tomotopy.PLDAModel and tomotopy.HLDAModel were added into the package.

- 0.3.1 (2019-11-05)

An issue where get_topic_dist() returns incorrect value when min_cf or rm_top is set was fixed.

The return value of get_topic_dist() of tomotopy.MGLDAModel document was fixed to include local topics.

The estimation speed with tw=ONE was improved.

- 0.3.0 (2019-10-06)

A new model, tomotopy.LLDAModel was added into the package.

A crashing issue of HDPModel was fixed.

- Since hyperparameter estimation for HDPModel was implemented, the result of HDPModel may differ from previous versions.

If you want to turn off hyperparameter estimation of HDPModel, set optim_interval to zero.

- 0.2.0 (2019-08-18)

New models including tomotopy.CTModel and tomotopy.SLDAModel were added into the package.

A new parameter option rm_top was added for all topic models.

The problems in save and load method for PAModel and HPAModel were fixed.

An occassional crash in loading HDPModel was fixed.

The problem that ll_per_word was calculated incorrectly when min_cf > 0 was fixed.

- 0.1.6 (2019-08-09)

Compiling errors at clang with macOS environment were fixed.

- 0.1.4 (2019-08-05)

The issue when add_doc receives an empty list as input was fixed.

The issue that tomotopy.PAModel.get_topic_words doesn’t extract the word distribution of subtopic was fixed.

- 0.1.3 (2019-05-19)

The parameter min_cf and its stopword-removing function were added for all topic models.

- 0.1.0 (2019-05-12)

First version of tomotopy

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distributions

Filter files by name, interpreter, ABI, and platform.

If you're not sure about the file name format, learn more about wheel file names.

Copy a direct link to the current filters

File details

Details for the file tomotopy-0.10.1.tar.gz.

File metadata

- Download URL: tomotopy-0.10.1.tar.gz

- Upload date:

- Size: 1.1 MB

- Tags: Source

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/1.15.0 pkginfo/1.7.0 requests/2.25.1 setuptools/50.3.2 requests-toolbelt/0.9.1 tqdm/4.56.2 CPython/3.5.10

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

6883d9c7079609c2c98f56aa611480d9b2a39ce455d9cf985d0a9618ce155a10

|

|

| MD5 |

96d2144be6ae6a58bc9f1d1ccdf4ac52

|

|

| BLAKE2b-256 |

6d87d6fb4b3005c47a6d09ae9ba6ef3374203114ef70854459a6b0081f9326b8

|

File details

Details for the file tomotopy-0.10.1-cp39-cp39-win_amd64.whl.

File metadata

- Download URL: tomotopy-0.10.1-cp39-cp39-win_amd64.whl

- Upload date:

- Size: 5.0 MB

- Tags: CPython 3.9, Windows x86-64

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/3.3.0 pkginfo/1.7.0 requests/2.25.1 setuptools/53.0.0 requests-toolbelt/0.9.1 tqdm/4.56.2 CPython/3.9.1

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

3e9befece4392965a73b3b237c46bf5df8f55ea50f2033f6b97c18938f478ad8

|

|

| MD5 |

85c995197605efa5efb1564a1e086868

|

|

| BLAKE2b-256 |

529902e09deb948d3971e4bd017382d7ad739f97ff33ca2ea842add48b013bad

|

File details

Details for the file tomotopy-0.10.1-cp39-cp39-win32.whl.

File metadata

- Download URL: tomotopy-0.10.1-cp39-cp39-win32.whl

- Upload date:

- Size: 3.0 MB

- Tags: CPython 3.9, Windows x86

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/3.3.0 pkginfo/1.7.0 requests/2.25.1 setuptools/53.0.0 requests-toolbelt/0.9.1 tqdm/4.56.2 CPython/3.9.1

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

11a824d33ae3cb7579d762e7b14bd5bd4bcb8d148aaec47e8e223b5dac51cf49

|

|

| MD5 |

a96756a2c919f9d45879028c6cd918d5

|

|

| BLAKE2b-256 |

458a3dd1b9c2e3537a943dbe6ef2476009d2e682c605ed43fc6eda52d664c680

|

File details

Details for the file tomotopy-0.10.1-cp39-cp39-manylinux2010_x86_64.whl.

File metadata

- Download URL: tomotopy-0.10.1-cp39-cp39-manylinux2010_x86_64.whl

- Upload date:

- Size: 15.1 MB

- Tags: CPython 3.9, manylinux: glibc 2.12+ x86-64

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/3.3.0 pkginfo/1.7.0 requests/2.25.1 setuptools/47.1.1 requests-toolbelt/0.9.1 tqdm/4.56.2 CPython/3.8.3

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

1930292a61767ef83d8e11f3e07ad5816a8c5a4f1a21d4dd18a842977797bb00

|

|

| MD5 |

3cd5ef5d053bf784eb4fe05bec69afcf

|

|

| BLAKE2b-256 |

58cde6737d95d6a3dd87a01a4976699471953255c0d8ef261d145070bec0fcbe

|

File details

Details for the file tomotopy-0.10.1-cp39-cp39-macosx_10_14_x86_64.whl.

File metadata

- Download URL: tomotopy-0.10.1-cp39-cp39-macosx_10_14_x86_64.whl

- Upload date:

- Size: 13.0 MB

- Tags: CPython 3.9, macOS 10.14+ x86-64

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/3.3.0 pkginfo/1.7.0 requests/2.25.1 setuptools/49.2.1 requests-toolbelt/0.9.1 tqdm/4.56.2 CPython/3.9.1

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

05abf2b753e51356fcc281c3fe5d1c8f5d9e0cb8650d0dc993abdf56687a90c6

|

|

| MD5 |

78e0c55a52f0be0263c309a671aa2cab

|

|

| BLAKE2b-256 |

e2e31ad0bbe26ae57399e7efedff753f0cdd8a5a686314e17bde8fc8bd3a18a3

|

File details

Details for the file tomotopy-0.10.1-cp38-cp38-win_amd64.whl.

File metadata

- Download URL: tomotopy-0.10.1-cp38-cp38-win_amd64.whl

- Upload date:

- Size: 5.0 MB

- Tags: CPython 3.8, Windows x86-64

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/3.3.0 pkginfo/1.7.0 requests/2.25.1 setuptools/53.0.0 requests-toolbelt/0.9.1 tqdm/4.56.2 CPython/3.8.7

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

8737bbe962f5309079df13c753d29e24c92fb55134b8ac95cc63a5947638c729

|

|

| MD5 |

9b8cbaff65adf9ab1d65ee02ac336494

|

|

| BLAKE2b-256 |

fe995e31e78bfe99a673969f6c969ee551471a520929ea24fbbd45970a5d2734

|

File details

Details for the file tomotopy-0.10.1-cp38-cp38-win32.whl.

File metadata

- Download URL: tomotopy-0.10.1-cp38-cp38-win32.whl

- Upload date:

- Size: 3.0 MB

- Tags: CPython 3.8, Windows x86

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/3.3.0 pkginfo/1.7.0 requests/2.25.1 setuptools/53.0.0 requests-toolbelt/0.9.1 tqdm/4.56.2 CPython/3.8.7

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

a86f652bab600343455432294f4544f031170ed14a4d4ea1f320eea2dbd9595b

|

|

| MD5 |

9b15f7eb6d2dd7325d300a9759725f26

|

|

| BLAKE2b-256 |

30b3f55764d9670fa67f4aa2b36ccfa757dc808e48f6898a6751c9d479e729e7

|

File details

Details for the file tomotopy-0.10.1-cp38-cp38-manylinux2010_x86_64.whl.

File metadata

- Download URL: tomotopy-0.10.1-cp38-cp38-manylinux2010_x86_64.whl

- Upload date:

- Size: 15.1 MB

- Tags: CPython 3.8, manylinux: glibc 2.12+ x86-64

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/3.3.0 pkginfo/1.7.0 requests/2.25.1 setuptools/47.1.1 requests-toolbelt/0.9.1 tqdm/4.56.2 CPython/3.8.3

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

2865ab1f4e78f553a151b6233ed297325449b3c1694b0657f87ab6ab9920d198

|

|

| MD5 |

3e001b6ac2f393528205a2cfa929cc3a

|

|

| BLAKE2b-256 |

558dc3e1473925f5184f4834628408050a35126d9bffe2adcf77fc405e527206

|

File details

Details for the file tomotopy-0.10.1-cp38-cp38-macosx_10_14_x86_64.whl.

File metadata

- Download URL: tomotopy-0.10.1-cp38-cp38-macosx_10_14_x86_64.whl

- Upload date:

- Size: 13.0 MB

- Tags: CPython 3.8, macOS 10.14+ x86-64

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/3.3.0 pkginfo/1.7.0 requests/2.25.1 setuptools/49.2.1 requests-toolbelt/0.9.1 tqdm/4.56.2 CPython/3.8.7

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

c4b7266346f0b67c6adbb62e051b1980c9ce28b3605cd8788ef26890c03266ab

|

|

| MD5 |

f10e00436f16caf38d077b0b06806958

|

|

| BLAKE2b-256 |

d49671f340ae2ab44fbfb0c91c0024496278e39d2c3479c2533716b7991fe5c7

|

File details

Details for the file tomotopy-0.10.1-cp37-cp37m-win_amd64.whl.

File metadata

- Download URL: tomotopy-0.10.1-cp37-cp37m-win_amd64.whl

- Upload date:

- Size: 5.0 MB

- Tags: CPython 3.7m, Windows x86-64

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/3.3.0 pkginfo/1.7.0 requests/2.25.1 setuptools/53.0.0 requests-toolbelt/0.9.1 tqdm/4.56.2 CPython/3.7.9

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

d1387b7b5a789304ea7b522b60f40292f781f639719d9c251620b5cd8ffeab6c

|

|

| MD5 |

f0d3f9c316f50a15ac0a80d7fc4ab404

|

|

| BLAKE2b-256 |

64906f9151eb3d214609e9662513797ee56e34046919746b47db979b4cffd12a

|

File details

Details for the file tomotopy-0.10.1-cp37-cp37m-win32.whl.

File metadata

- Download URL: tomotopy-0.10.1-cp37-cp37m-win32.whl

- Upload date:

- Size: 3.0 MB

- Tags: CPython 3.7m, Windows x86

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/3.3.0 pkginfo/1.7.0 requests/2.25.1 setuptools/53.0.0 requests-toolbelt/0.9.1 tqdm/4.56.2 CPython/3.7.9

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

6046e1da6b4d5cff56a1bee57443618fc9dc6ec84bbaebb92dd4aa540d2c9c9d

|

|

| MD5 |

fef536d60e4389dd4685214948907047

|

|

| BLAKE2b-256 |

0baa8f45d4e368f6e0d703bd6a4f3c127f5cb7298d6c4438e6cdfe04fb5bbe1b

|

File details

Details for the file tomotopy-0.10.1-cp37-cp37m-manylinux2010_x86_64.whl.

File metadata

- Download URL: tomotopy-0.10.1-cp37-cp37m-manylinux2010_x86_64.whl

- Upload date:

- Size: 15.1 MB

- Tags: CPython 3.7m, manylinux: glibc 2.12+ x86-64

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/3.3.0 pkginfo/1.7.0 requests/2.25.1 setuptools/47.1.1 requests-toolbelt/0.9.1 tqdm/4.56.2 CPython/3.8.3

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

063f4f38daa7fe14797661b748002a92ec7d2de1ddb794ad3b56a9823af09c3d

|

|

| MD5 |

0ce3c14414a023a877709b5aef2ff44d

|

|

| BLAKE2b-256 |

d43544494c5d1a51b0eaded6f09e586471d87eabc397972d22f66f13d7c419ef

|

File details

Details for the file tomotopy-0.10.1-cp37-cp37m-macosx_10_14_x86_64.whl.

File metadata

- Download URL: tomotopy-0.10.1-cp37-cp37m-macosx_10_14_x86_64.whl

- Upload date:

- Size: 13.0 MB

- Tags: CPython 3.7m, macOS 10.14+ x86-64

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/3.3.0 pkginfo/1.7.0 requests/2.25.1 setuptools/47.1.0 requests-toolbelt/0.9.1 tqdm/4.56.2 CPython/3.7.9

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

951ae94d9f588aff03f92338061c10e6b7835ce1e95ba2f2bb8d9ef0b5fa797f

|

|

| MD5 |

91e730395248a807fd86951b48b1f09a

|

|

| BLAKE2b-256 |

a370a4f037eb838199121ebafca179f9f87d5bef40307b7b74acd915b3a4c97b

|

File details

Details for the file tomotopy-0.10.1-cp36-cp36m-win_amd64.whl.

File metadata

- Download URL: tomotopy-0.10.1-cp36-cp36m-win_amd64.whl

- Upload date:

- Size: 5.0 MB

- Tags: CPython 3.6m, Windows x86-64

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/3.3.0 pkginfo/1.7.0 requests/2.25.1 setuptools/53.0.0 requests-toolbelt/0.9.1 tqdm/4.56.2 CPython/3.6.8

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

d39517b94e2f3964d3b7ea829c98ee7dca84ddffbb87c012b31da46f24290b96

|

|

| MD5 |

6e0831850e099b4ba125ce68db277411

|

|

| BLAKE2b-256 |

07ce93914aa08583f9dab3fcec261897f68f7313ed499df2ee76cdb7d7c3b7ff

|

File details

Details for the file tomotopy-0.10.1-cp36-cp36m-win32.whl.

File metadata

- Download URL: tomotopy-0.10.1-cp36-cp36m-win32.whl

- Upload date:

- Size: 3.0 MB

- Tags: CPython 3.6m, Windows x86

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/3.3.0 pkginfo/1.7.0 requests/2.25.1 setuptools/53.0.0 requests-toolbelt/0.9.1 tqdm/4.56.2 CPython/3.6.8

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

a141a9c010cc52655dba8cd5b91cc057b5a7eaca266745457daef2e8a2a72a87

|

|

| MD5 |

5895152535a277218216a85b54793c31

|

|

| BLAKE2b-256 |

145b01c9b36c5d88ebb3c02001df64cf1bd9538d259283358620471874bff3c2

|

File details

Details for the file tomotopy-0.10.1-cp36-cp36m-manylinux2010_x86_64.whl.

File metadata

- Download URL: tomotopy-0.10.1-cp36-cp36m-manylinux2010_x86_64.whl

- Upload date:

- Size: 15.1 MB

- Tags: CPython 3.6m, manylinux: glibc 2.12+ x86-64

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/3.3.0 pkginfo/1.7.0 requests/2.25.1 setuptools/47.1.1 requests-toolbelt/0.9.1 tqdm/4.56.2 CPython/3.8.3

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

57190b808e952072ed255dc2fe67a2b33de3f40903ec10198f711755a5b260e2

|

|

| MD5 |

5e947e6d305d5acc7320130f85da00fe

|

|

| BLAKE2b-256 |

fa3a5d06ab881b6b1e8b963cfe1f5f79001d7e30a80e3b511464191e622895de

|

File details

Details for the file tomotopy-0.10.1-cp36-cp36m-macosx_10_14_x86_64.whl.

File metadata

- Download URL: tomotopy-0.10.1-cp36-cp36m-macosx_10_14_x86_64.whl

- Upload date:

- Size: 13.0 MB

- Tags: CPython 3.6m, macOS 10.14+ x86-64

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/3.3.0 pkginfo/1.7.0 requests/2.25.1 setuptools/40.6.2 requests-toolbelt/0.9.1 tqdm/4.56.2 CPython/3.6.12

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

2bc90cd319ead4d716020bea6696bbaae0a40bd96dfc0459b8db93c5a1eb701a

|

|

| MD5 |

1a397f887ff72b8387c59bf8f6cd0206

|

|

| BLAKE2b-256 |

77ab23d5dce79d2585c211dadccbf612f561766ef56d915380d3d82e18f691cf

|

File details

Details for the file tomotopy-0.10.1-cp35-cp35m-win_amd64.whl.

File metadata

- Download URL: tomotopy-0.10.1-cp35-cp35m-win_amd64.whl

- Upload date:

- Size: 5.0 MB

- Tags: CPython 3.5m, Windows x86-64

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/1.15.0 pkginfo/1.7.0 requests/2.25.1 setuptools/50.3.2 requests-toolbelt/0.9.1 tqdm/4.56.2 CPython/3.5.4

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

7b4ac980936fa42ae5cbe620ab492e3e7e2ac9511b99790be5ee3b54b1de8bea

|

|

| MD5 |

3f29841d3bf459ee103be134a22a1b9f

|

|

| BLAKE2b-256 |

934d04aac30035df202a79b09a783d0fa6a4550b254901ccf051a433046dc2f6

|

File details

Details for the file tomotopy-0.10.1-cp35-cp35m-win32.whl.

File metadata

- Download URL: tomotopy-0.10.1-cp35-cp35m-win32.whl

- Upload date:

- Size: 3.0 MB

- Tags: CPython 3.5m, Windows x86

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/1.15.0 pkginfo/1.7.0 requests/2.25.1 setuptools/50.3.2 requests-toolbelt/0.9.1 tqdm/4.56.2 CPython/3.5.4

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

4c1d7c64314426f7b072360ed4b643a2a8accb32d6f1fc0fad95543d3e7022eb

|

|

| MD5 |

7545706224bae2661d2ab3f90aee0a8d

|

|

| BLAKE2b-256 |

3b9970f8db299f81bb9e4b04be8121f3fee54619dd2fb032134df124966e8768

|

File details

Details for the file tomotopy-0.10.1-cp35-cp35m-manylinux2010_x86_64.whl.

File metadata

- Download URL: tomotopy-0.10.1-cp35-cp35m-manylinux2010_x86_64.whl

- Upload date:

- Size: 15.1 MB

- Tags: CPython 3.5m, manylinux: glibc 2.12+ x86-64

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/3.3.0 pkginfo/1.7.0 requests/2.25.1 setuptools/47.1.1 requests-toolbelt/0.9.1 tqdm/4.56.2 CPython/3.8.3

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

77d5d9d4a2ff3d06dff47c6bc7b65c10dc5dec3cb57db16a28f5409fd8cc7f10

|

|

| MD5 |

356cd01b9c7f43796b5d1799c241993b

|

|

| BLAKE2b-256 |

17815b342941c90d8e7345a98529b931d49405dd0a29ef4813d9e7b38102b19b

|

File details

Details for the file tomotopy-0.10.1-cp35-cp35m-macosx_10_14_x86_64.whl.

File metadata

- Download URL: tomotopy-0.10.1-cp35-cp35m-macosx_10_14_x86_64.whl

- Upload date:

- Size: 13.0 MB

- Tags: CPython 3.5m, macOS 10.14+ x86-64

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/1.15.0 pkginfo/1.7.0 requests/2.25.1 setuptools/28.8.0 requests-toolbelt/0.9.1 tqdm/4.56.2 CPython/3.5.10

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

17e049fb9b921d1febb672167e26c7501413911e05f303829405bda88dded2cd

|

|

| MD5 |

5e0824c2d6dc000cd83a3d46375a3e1b

|

|

| BLAKE2b-256 |

22ceedf67d0740499049ff5848edbcf3004a96f0875ade62b5abc51752e2da9a

|