Scrapers for extracting articles from Finnish journalistic media websites.

Project description

Finnish Media Scrapers

Scrapers for extracting articles from Finnish journalistic media websites by the University of Helsinki Human Sciences – Computing Interaction research group.

Included are scrapers for YLE, Helsingin Sanomat, Iltalehti and Iltasanomat. See below for limitations relating to individual sources.

Installation

Install the scripts (and Python module) using pip install finnish-media-scrapers. After this, the scripts should be useable from the command line, and the functionality importable from Python. Or, if you have pipx and just want the command line scripts, use pipx install finnish-media-scrapers instead.

General workflow

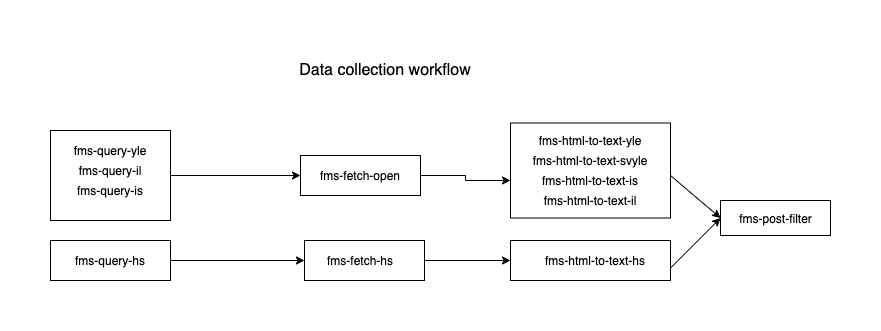

The general workflow for using the scrapers is as follows:

- Query YLE/HS/IL/IS APIs for matching articles using the scripts

fms-query-{yle|hs|il|is}, which output all matching articles with links into CSVs. - Fetch the matching articles using

fms-fetch-{hs|open}. These save the articles as HTML files in a specified directory. - Extract the plain text from the article HMTL using

fms-html-to-text-{yle|svyle|hs|il|is}. - Optionally refilter the results using

fms-post-filter.

Important to know when applying the workflow is that due to the fact that all the sources use some kind of stemming for their search, they can often return also spurious hits. Further, if searching for multiple words, the engines often perform a search for either word instead of the complete phrase. The post-filtering script above exists to counteract this by allowing the refiltering of the results more rigorously and uniformly locally.

At the same time and equally importantly, the stemming for a particular media may not cover e.g. all inflectional forms of words. Thus, it often makes sense to query for at least all common inflected variants and merge the results. For a complete worked up example of this kind of use, see the members_of_parliament folder, which demonstrates how one can collect and count how many articles in each media mention the members of the Finnish Parliament.

Apart from using the scripts, the functionality of the package is also provided as a python module that you may use programmatically from within Python. For the functionalities thus provided, see the module documentation

Media-specific instructions and caveats

Helsingin Sanomat

First, query the articles you want using fms-query-hs. For example, fms-query-hs -f 2020-02-16 -t 2020-02-18 -o hs-sdp.csv -q SDP.

For downloading articles, use fms-fetch-hs. This scraper requires a user id and password for Helsingin Sanomat. Technically, it uses pyppeteer to control a headless Chromium browser to log in and ensure the dynamically rendered content in HS articles is captured. To ensure a compatible Chromium, when first running the tool, pyppeteer will download an isolated version of Chromium for itself, causing some ~150MB of network traffic and disk space usage. For example fms-fetch-hs -i hs-sdp.csv -o hs-sdp -u username -p password.

After fetching the articles, extract texts with e.g. fms-html-to-text-hs -o hs-sdp-output hs-sdp.

Known special considerations:

- The search engine used seems to be employing some sort of stemming/lemmatization, so e.g. the query string

kokseems to matchkokki,kokoandkoki. - A single query can return at most 9,950 hits. This can be sidestepped by invoking the script multiple times with smaller query time spans.

Yle

example: fms-query-yle -f 2020-02-16 -t 2020-02-18 -o yle-sdp.csv -q SDP + fms-fetch-open -i yle-sdp.csv -o yle-sdp + fms-html-to-text-yle -o yle-sdp-output yle-sdp (or fms-html-to-text-svyle -o svyle-sdp-output svyle-sdp if articles come from Svenska YLE)

Known special considerations:

- A single query can return at most 10,000 hits. This can be sidestepped by invoking the script multiple times with smaller query time spans.

Iltalehti

example: fms-query-il -f 2020-02-16 -t 2020-02-18 -o il-sdp.csv -q SDP + fms-fetch-open -i il-sdp.csv -o il-sdp + fms-html-to-text-il -o il-sdp-output il-sdp

Iltasanomat

example: fms-query-is -f 2020-02-16 -t 2020-02-18 -o is-sdp.csv -q SDP + fms-fetch-open -i is-sdp.csv -o is-sdp + fms-html-to-text-is -o is-sdp-output is-sdp

Known special considerations:

- The search engine used seems to be employing some sort of stemming/lemmatization, so e.g. the query string

kokseems to matchkokki,kokoandkoki. - A single query can return at most 9,950 hits. This can be sidestepped by invoking the script multiple times with smaller query time spans.

Using the fms-post-filter script

For example, after collecting texts from Helsingin Sanomat with the example above, run:

fms-post-filter -i hs-sdp.csv -t hs-sdp-output/ -o hs-sdp-filtered.csv -q SDP

where -i parameter specifies the query output file, -t the folder name to search extracted texts, -o the output filename and -q search word to filter.

There is also an option -ci for configuring the case-insensitiveness (default false).

Contact

For more information on the scrapers, please contact associate professor Eetu Mäkelä.

Development

To set up a development environment, you need poetry. Then, use poetry to install and manage the dependencies and build process (poetry install).

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

Hashes for finnish-media-scrapers-1.1.0.tar.gz

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | 235233f55d663d04c9c2038494bc21744c5b6dd8991b44a31b08bd4e06db0a87 |

|

| MD5 | f4635264dbbc0d061bbdaff87d4a1cec |

|

| BLAKE2b-256 | 10cb16f87764790a8b5e038ae72d3afe80cce76b2da59cdf4ea32c646528d209 |

Hashes for finnish_media_scrapers-1.1.0-py3-none-any.whl

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | 3b6ab7684484d8fa152c2817781fa1089ea82f52bac624d39768d5a9fdf8044c |

|

| MD5 | aef5bffec8b347f08f2fc3752858a730 |

|

| BLAKE2b-256 | ac28e1add56bfbabc1eb6e6416f70a4d8cea67b266b0bddea1e075866e34a6ed |