Unique tool to convert ONNX files (NCHW) to TensorFlow format (NHWC). The purpose of this tool is to solve the massive Transpose extrapolation problem in onnx-tensorflow (onnx-tf).

Project description

[WIP] onnx2tf

Self-Created Tools to convert ONNX files (NCHW) to TensorFlow format (NHWC). The purpose of this tool is to solve the massive Transpose extrapolation problem in onnx-tensorflow (onnx-tf).

Key concept

- onnx-tensorflow is a very useful tool, but the performance of the generated TensorFlow models is significantly degraded due to the extrapolation of a large number of

TransposeOPs before and after each OP during the format conversion fromNCHWtoNHWC. Therefore, I will make this tool myself as a derivative tool of onnx-tensorflow without extrapolatingTranspose. - Not only does it handle conversions of 4-dimensional inputs, such as

NCHWtoNHWC, but also the number of input dimensions in 3, 5, or even more dimensions. For example,NCDHWtoNDHWC, etc. However, since 1-D, 2-D, 3-D and 6-D input may produce patterns that are mechanically difficult to convert, it should be possible to give parameters to externally modify the tool's behavior. - Immediately following a

ReshapeOP with dimensional compression and dimensional decompression, there is a 95% probability that the model transformation operation will be disrupted and errors will occur. For example, patterns such as[1,200,200,5]->[1,200,-1]or[10,20,30,40,50]->[10,2,10,30,10,4,50]. - TensorFlow's Convolution does not have an equivalent operation to ONNX's Padding operation. Therefore, a

PadOP is inserted immediately before a Convolution with Padding of size greater than 1. - Support conversion to TensorFlow saved model and TFLite (Float32/Float16).

- Does not support quantization to INT8. For quantization, use the official TensorFlow converter to convert from saved_model to your own.

- Files exceeding the Protocol Buffers file size limit of 2GB are not supported. Therefore, the external format is not supported at the initial stage of tool creation.

- If there are ONNX OPs that are not supported by TensorFlow, use simple-onnx-processing-tools to replace them with harmless OPs in advance and then use this tool to convert them. In other words, you can convert any model with your efforts.

-

BatchNormalizationsupports only inference mode. - Only for

opset=11or higher - If you do not like the generated TFLite OP name, edit it using tflite2json2tflite.

- The generated Keras models cannot be used for retraining. If you want to train, you must build your own model.

- Implement the

Resizeprocess for the 5D tensor. - Add process to replace

Asinwithpseudo-Asin. - Add process to replace

Acoswithpseudo-Acos. - Add process to replace

GatherNDwithpseudo-GatherND. - Add process to replace

HardSwishwithpseudo-HardSwish. - Add process to replace

GridSamplewithpseudo-GridSample. - Add process to replace

GridSamplewithpseudo-LeakyRelu.

Demo

Sample Usage

$ git clone https://github.com/PINTO0309/onnx2tf.git && cd onnx2tf

$ docker run --rm -it \

-v `pwd`:/workdir \

-w /workdir \

ghcr.io/pinto0309/onn2tf:0.0.9

$ pip install -e . -U

or

$ pip install -U onnx2tf

$ wget https://github.com/PINTO0309/onnx2tf/releases/download/0.0.2/resnet18-v1-7.onnx

$ onnx2tf -i resnet18-v1-7.onnx -o saved_model

CLI Parameter

$ onnx2tf -h

usage: onnx2tf

[-h]

-i INPUT_ONNX_FILE_PATH

[-o OUTPUT_FOLDER_PATH]

[-k KEEP_NCW_OR_NCHW_OR_NCDHW_INPUT_NAMES [KEEP_NCW_OR_NCHW_OR_NCDHW_INPUT_NAMES ...]]

[-rari64 | -rarf32]

[-rasin]

[-racos]

[-n]

optional arguments:

-h, --help

show this help message and exit

-i INPUT_ONNX_FILE_PATH, --input_onnx_file_path INPUT_ONNX_FILE_PATH

Input onnx file path.

-o OUTPUT_FOLDER_PATH, --output_folder_path OUTPUT_FOLDER_PATH

Output folder path. Default: "saved_model"

-k KEEP_NCW_OR_NCHW_OR_NCDHW_INPUT_NAMES [KEEP_NCW_OR_NCHW_OR_NCDHW_INPUT_NAMES ...], \

--keep_ncw_or_nchw_or_ncdhw_input_names KEEP_NCW_OR_NCHW_OR_NCDHW_INPUT_NAMES \

[KEEP_NCW_OR_NCHW_OR_NCDHW_INPUT_NAMES ...]

Holds the NCW or NCHW or NCDHW of the input shape for the specified INPUT OP names.

If a nonexistent INPUT OP name is specified, it is ignored.

Valid only for 3D, 4D and 5D input tensors.

e.g. --keep_ncw_or_nchw_or_ncdhw_input_names "input0" "input1" "input2"

-rari64, --replace_argmax_to_reducemax_and_indicies_is_int64

Replace ArgMax with a ReduceMax. The returned indicies are int64.

Only one of replace_argmax_to_reducemax_and_indicies_is_int64

and replace_argmax_to_reducemax_and_indicies_is_float32 can be specified.

-rarf32, --replace_argmax_to_reducemax_and_indicies_is_float32

Replace ArgMax with a ReduceMax. The returned indicies are float32.

Only one of replace_argmax_to_reducemax_and_indicies_is_int64

and replace_argmax_to_reducemax_and_indicies_is_float32 can be specified.

-rasin, --replace_asin_to_pseudo_asin

Replace Asin with a pseudo Asin.

-racos, --replace_acos_to_pseudo_acos

Replace Acos with a pseudo Acos.

-n, --non_verbose

Do not show all information logs. Only error logs are displayed.

In-script Usage

>>> from onnx2tf import convert

>>> help(convert)

Help on function convert in module onnx2tf:

convert(

input_onnx_file_path: Union[str, NoneType] = '',

onnx_graph: Union[onnx.onnx_ml_pb2.ModelProto, NoneType] = None,

output_folder_path: Union[str, NoneType] = 'saved_model',

keep_ncw_or_nchw_or_ncdhw_input_names: Union[List[str], NoneType] = None,

replace_argmax_to_reducemax_and_indicies_is_int64: Union[bool, NoneType] = False,

replace_argmax_to_reducemax_and_indicies_is_float32: Union[bool, NoneType] = False,

replace_asin_to_pseudo_asin: Union[bool, NoneType] = False,

replace_acos_to_pseudo_acos: Union[bool, NoneType] = False,

non_verbose: Union[bool, NoneType] = False

) -> keras.engine.training.Model

Convert ONNX to TensorFlow models.

Parameters

----------

input_onnx_file_path: Optional[str]

Input onnx file path.

Either input_onnx_file_path or onnx_graph must be specified.

onnx_graph: Optional[onnx.ModelProto]

onnx.ModelProto.

Either input_onnx_file_path or onnx_graph must be specified.

onnx_graph If specified, ignore input_onnx_file_path and process onnx_graph.

output_folder_path: Optional[str]

Output tensorflow model folder path.

Default: "saved_model"

keep_ncw_or_nchw_or_ncdhw_input_names: Optional[List[str]]

Holds the NCW or NCHW or NCDHW of the input shape for the specified INPUT OP names.

If a nonexistent INPUT OP name is specified, it is ignored.

Valid only for 3D, 4D and 5D input tensors.

e.g.

--keep_ncw_or_nchw_or_ncdhw_input_names=['input0', 'input1', 'input2']

replace_argmax_to_reducemax_and_indicies_is_int64: Optional[bool]

Replace ArgMax with a ReduceMax. The returned indicies are int64.

Only one of replace_argmax_to_reducemax_and_indicies_is_int64 and

replace_argmax_to_reducemax_and_indicies_is_float32 can be specified.

Default: False

replace_argmax_to_reducemax_and_indicies_is_float32: Optional[bool]

Replace ArgMax with a ReduceMax. The returned indicies are float32.

Only one of replace_argmax_to_reducemax_and_indicies_is_int64 and

replace_argmax_to_reducemax_and_indicies_is_float32 can be specified.

Default: False

replace_asin_to_pseudo_asin: Optional[bool]

Replace Asin with a pseudo Asin.

replace_acos_to_pseudo_acos: Optional[bool]

Replace Acos with a pseudo Acos.

non_verbose: Optional[bool]

Do not show all information logs. Only error logs are displayed.

Only one of replace_argmax_to_reducemax_and_indicies_is_int64 and

replace_argmax_to_reducemax_and_indicies_is_float32 can be specified.

Default: False

Returns

----------

model: tf.keras.Model

Model

[WIP] Parameter replacement

This tool is used to convert NCW to NWC, NCHW to NHWC, NCDHW to NDHWC, NCDDHW to NDDHWC, NCDDDDDDHW to NDDDDDDHWC. Therefore, as stated in the Key Concepts, the conversion will inevitably break down at some point in the model. You need to look at the entire conversion log to see which OP transpositions are failing and correct them yourself. I dare to explain very little because I know that no matter how much detail I put in the README, you guys will not read it at all.

"A conversion error occurs." Please don't post such low level questions as issues.

- param_replacement.json

{

"format_version": 1,

"operations": [

{

"op_name": "StatefulPartitionedCall/Tile_4",

"param_target": "inputs", # attributes or inputs

"param_name": "const_fold_opt__677",

"values": [1,1,17] # Disable parameter transposition or overwrite parameters

},

{

"op_name": "StatefulPartitionedCall/Sum_3",

"param_target": "attributes", # attributes or inputs

"param_name": "axes",

"values": [2] # Disable parameter transposition or overwrite parameters

}

]

}

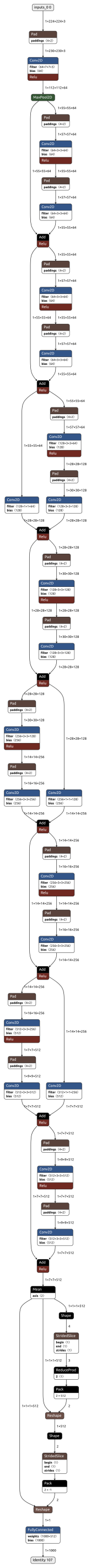

Generated Model

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

File details

Details for the file onnx2tf-0.0.10.tar.gz.

File metadata

- Download URL: onnx2tf-0.0.10.tar.gz

- Upload date:

- Size: 39.3 kB

- Tags: Source

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/4.0.1 CPython/3.9.14

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | 2730d9c05c9502f352d4c7f10c2bef1b515c8abd911e00edd082002bf50f4140 |

|

| MD5 | f3909a2b470c9f938ba02ea5463b7d3b |

|

| BLAKE2b-256 | 1e5761232e353560215e97e6f539e95801468f64b9ecd0b488f52b4faa688d23 |

File details

Details for the file onnx2tf-0.0.10-py3-none-any.whl.

File metadata

- Download URL: onnx2tf-0.0.10-py3-none-any.whl

- Upload date:

- Size: 112.8 kB

- Tags: Python 3

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/4.0.1 CPython/3.9.14

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | 4e0d36e44f514498e971d0bf988d5fc0b6c58d26823dc60cb534492cddc5dd41 |

|

| MD5 | e673871f5e816224b33ed535b68b830f |

|

| BLAKE2b-256 | 49d8292b6469759f0afaba1487f938efa5ab00f7cfa4c45a659a4a4f676f5cec |