A ``py.test`` fixture for benchmarking code.

Project description

docs |

|

|---|---|

tests |

|

package |

A py.test fixture for benchmarking code. It will group the tests into rounds that are calibrated to the chosen timer. See calibration and FAQ.

Free software: BSD license

Installation

pip install pytest-benchmark

Documentation

Examples

This plugin provides a benchmark fixture. This fixture is a callable object that will benchmark any function passed to it.

Example:

def something(duration=0.000001):

# Code to be measured

return time.sleep(duration)

def test_my_stuff(benchmark):

# benchmark something

result = benchmark(something)

# Extra code, to verify that the run completed correctly.

# Note: this code is not measured.

assert result is NoneYou can also pass extra arguments:

def test_my_stuff(benchmark):

result = benchmark(time.sleep, 0.02)Screenshots

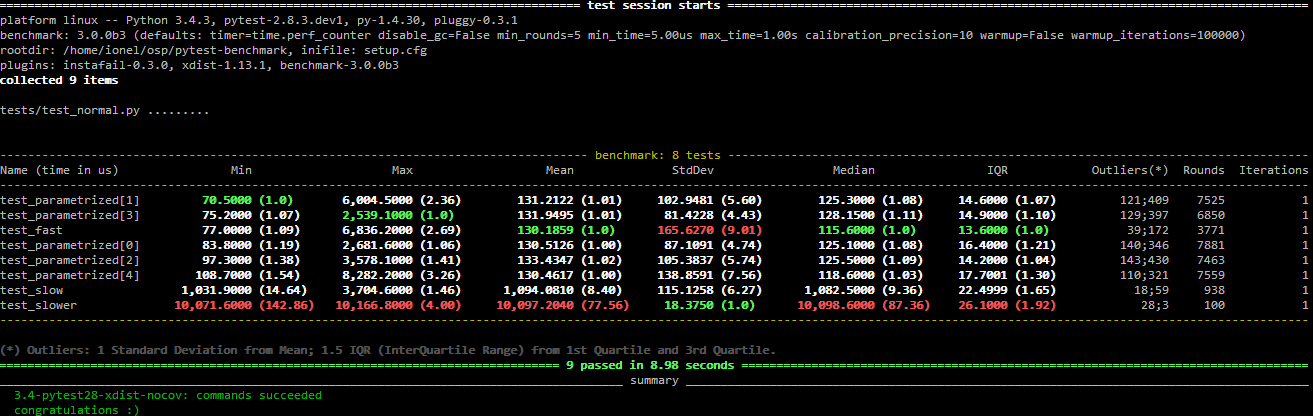

Normal run:

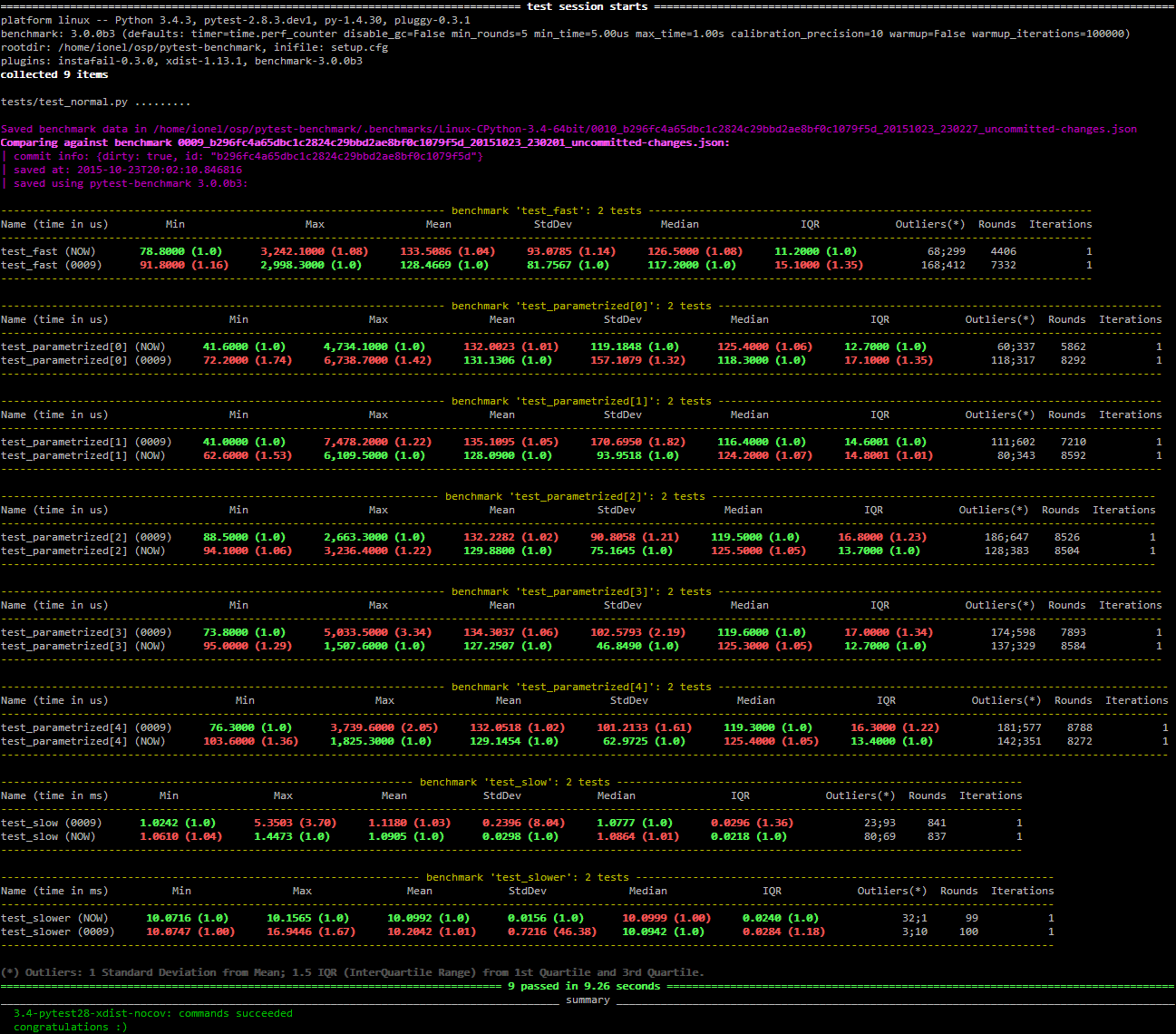

Compare mode (--benchmark-compare):

Histogram (--benchmark-histogram):

Also, it has nice tooltips.

Development

To run the all tests run:

tox

Credits

Timing code and ideas taken from: https://bitbucket.org/haypo/misc/src/tip/python/benchmark.py

Changelog

3.0.0a2 (2015-09-30)

Fixed accidental output capturing caused by capturemanager misuse.

3.0.0a1 (2015-09-13)

Added JSON report saving (the --benchmark-json command line arguments).

Added benchmark data storage(the --benchmark-save and --benchmark-autosave command line arguments).

Added comparison to previous runs (the --benchmark-compare command line argument).

Added performance regression checks (the --benchmark-compare-fail command line argument).

Added possibility to group by various parts of test name (the –benchmark-compare-group-by` command line argument).

Added historical plotting (the --benchmark-histogram command line argument).

Added option to fine tune the calibration (the --benchmark-calibration-precision command line argument and calibration_precision marker option).

Changed benchmark_weave to no longer be a context manager. Cleanup is performed automatically. BACKWARDS INCOMPATIBLE

Added benchmark.weave method (alternative to benchmark_weave fixture).

Added new hooks to allow customization:

pytest_benchmark_generate_machine_info(config)

pytest_benchmark_update_machine_info(config, info)

pytest_benchmark_generate_commit_info(config)

pytest_benchmark_update_commit_info(config, info)

pytest_benchmark_group_stats(config, benchmarks, group_by)

pytest_benchmark_generate_json(config, benchmarks, include_data)

pytest_benchmark_update_json(config, benchmarks, output_json)

pytest_benchmark_compare_machine_info(config, benchmarksession, machine_info, compared_benchmark)

Changed the timing code to:

Tracers are automatically disabled when running the test function (like coverage tracers).

Fixed an issue with calibration code getting stuck.

Added pedantic mode via benchmark.pedantic(). This mode disables calibration and allows a setup function.

2.5.0 (2015-06-20)

Improved test suite a bit (not using cram anymore).

Improved help text on the --benchmark-warmup option.

Made warmup_iterations available as a marker argument (eg: @pytest.mark.benchmark(warmup_iterations=1234)).

Fixed --benchmark-verbose’s printouts to work properly with output capturing.

Changed how warmup iterations are computed (now number of total iterations is used, instead of just the rounds).

Fixed a bug where calibration would run forever.

Disabled red/green coloring (it was kinda random) when there’s a single test in the results table.

2.4.1 (2015-03-16)

Fix regression, plugin was raising ValueError: no option named 'dist' when xdist wasn’t installed.

2.4.0 (2015-03-12)

Add a benchmark_weave experimental fixture.

Fix internal failures when xdist plugin is active.

Automatically disable benchmarks if xdist is active.

2.3.0 (2014-12-27)

Moved the warmup in the calibration phase. Solves issues with benchmarking on PyPy.

Added a --benchmark-warmup-iterations option to fine-tune that.

2.2.0 (2014-12-26)

Make the default rounds smaller (so that variance is more accurate).

Show the defaults in the --help section.

2.1.0 (2014-12-20)

Simplify the calibration code so that the round is smaller.

Add diagnostic output for calibration code (--benchmark-verbose).

2.0.0 (2014-12-19)

Replace the context-manager based API with a simple callback interface. BACKWARDS INCOMPATIBLE

Implement timer calibration for precise measurements.

1.0.0 (2014-12-15)

Use a precise default timer for PyPy.

? (?)

Readme and styling fixes (contributed by Marc Abramowitz)

Lots of wild changes.

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

Filter files by name, interpreter, ABI, and platform.

If you're not sure about the file name format, learn more about wheel file names.

Copy a direct link to the current filters

File details

Details for the file pytest-benchmark-3.0.0a2.tar.gz.

File metadata

- Download URL: pytest-benchmark-3.0.0a2.tar.gz

- Upload date:

- Size: 209.3 kB

- Tags: Source

- Uploaded using Trusted Publishing? No

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

11d8c32a05b8268db82868361d1f96a70ac2f5759a07f02a1997bd3abc2fff38

|

|

| MD5 |

6b12d27b8b540f00109843c55b6c15a6

|

|

| BLAKE2b-256 |

236b47755e721d55b8be58437cb9d4d056b7ac2fb0d4c3b6fe814076b0beb4d7

|

File details

Details for the file pytest_benchmark-3.0.0a2-py2.py3-none-any.whl.

File metadata

- Download URL: pytest_benchmark-3.0.0a2-py2.py3-none-any.whl

- Upload date:

- Size: 31.2 kB

- Tags: Python 2, Python 3

- Uploaded using Trusted Publishing? No

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

46a9e2b6d7bbe057344a8a0cd0abdb14610da7db4b4569b73c022224190b7bc3

|

|

| MD5 |

34fdea1a25f0b5e36ccc43648be7f31f

|

|

| BLAKE2b-256 |

63cf66948ea0cd78edddaf53cc55577554df8a5b91a4f5adeb2b559045865dc2

|