Whisper command line client that uses CTranslate2 and faster-whisper

Project description

Introduction

Whisper command line client compatible with original OpenAI client based on CTranslate2.

It uses CTranslate2 and Faster-whisper Whisper implementation that is up to 4 times faster than openai/whisper for the same accuracy while using less memory.

Key features:

- ✅ 4x faster than OpenAI's Whisper at the same accuracy (up to 16X faster with batched inference)

- ✅ OpenAI Whisper CLI compatibility (easier migration)

- ✅ Docker image with pre-loaded models

- ✅ Speaker Diarization (Speaker Identification)

- ✅ Voice Activity Detection (VAD) Filter to improve quality

- ✅ Load your own fine-tuned Whisper models

- ✅ Live transcription from microphone

- ✅ Color-coded confidence visualization

Installation

Python package

To install the latest stable version, just type:

pip install whisper-ctranslate2

Using prebuild Docker image

You can use build docker image. First pull the image:

docker pull ghcr.io/softcatala/whisper-ctranslate2:latest

The Docker image includes the small, medium" and large-v2.

To run it:

docker run --gpus "device=0" \

-v "$(pwd)":/srv/files/ \

-it ghcr.io/softcatala/whisper-ctranslate2:latest \

/srv/files/e2e-tests/gossos.mp3 \

--output_dir /srv/files/

Notes:

- --gpus "device=0" gives access to the GPU. If you do not have a GPU, remove this.

- "$(pwd)":/srv/files/ maps your current directory to /srv/files/ inside the container

If you always need to use a model that is not in the image, you can create a derived Docker image with the model preloaded or use Docker volumes to persist and share the model files.

CPU and GPU support

GPU and CPU support is provided by CTranslate2.

It has compatibility with x86-64 and AArch64/ARM64 CPU and integrates multiple backends that are optimized for these platforms: Intel MKL, oneDNN, OpenBLAS, Ruy, and Apple Accelerate.

GPU execution requires the NVIDIA libraries cuBLAS 11.x to be installed on the system. Please refer to the CTranslate2 documentation

By default the best hardware available is selected for inference. You can use the options --device and --device_index to control manually the selection.

Usage

Same command line as OpenAI Whisper.

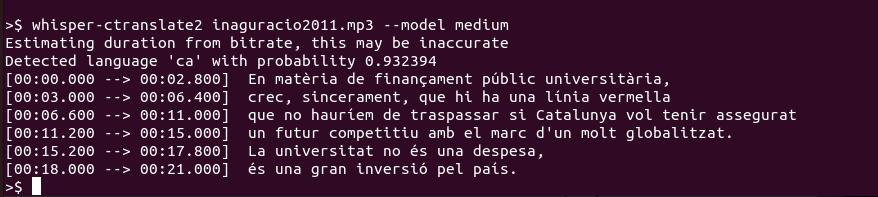

To transcribe:

whisper-ctranslate2 inaguracio2011.mp3 --model medium

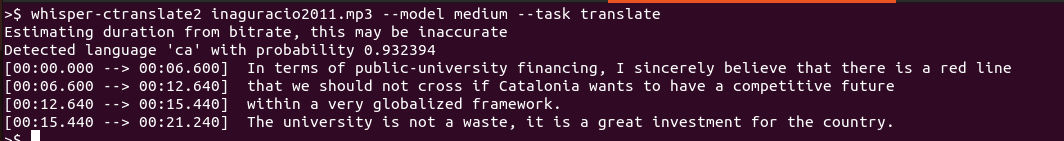

To translate:

whisper-ctranslate2 inaguracio2011.mp3 --model medium --task translate

Whisper translate task translates the transcription from the source language to English (the only target language supported).

Additionally using:

whisper-ctranslate2 --help

All the supported options with their help are shown.

CTranslate2 specific options

On top of the OpenAI Whisper command line options, there are some specific options provided by CTranslate2 or whiper-ctranslate2.

Batched inference

Batched inference transcribes each segment in-dependently which can provide an additional 2x-4x speed increase:

whisper-ctranslate2 inaguracio2011.mp3 --batched True

You can additionally use the --batch_size to specify the maximum number of parallel requests to model for decoding.

Batched inference uses Voice Activity Detection (VAD) filter and ignores the following parameters: compression_ratio_threshold, logprob_threshold, no_speech_threshold, condition_on_previous_text, prompt_reset_on_temperature, prefix, hallucination_silence_threshold.

Quantization

--compute_type option which accepts default,auto,int8,int8_float16,int16,float16,float32 values indicates the type of quantization to use. On CPU int8 will give the best performance:

whisper-ctranslate2 myfile.mp3 --compute_type int8

Loading the model from a directory

--model_directory option allows to specify the directory from which you want to load a CTranslate2 Whisper model. For example, if you want to load your own quantified Whisper model version or using your own Whisper fine-tuned version. The model must be in CTranslate2 format.

Using Voice Activity Detection (VAD) filter

--vad_filter option enables the voice activity detection (VAD) to filter out parts of the audio without speech. This step uses the Silero VAD model:

whisper-ctranslate2 myfile.mp3 --vad_filter True

The VAD filter accepts multiple additional options to determine the filter behavior:

--vad_onset VALUE (float)

Probabilities above this value are considered as speech.

--vad_min_speech_duration_ms (int)

Final speech chunks shorter min_speech_duration_ms are thrown out.

--vad_max_speech_duration_s VALUE (int)

Maximum duration of speech chunks in seconds. Longer will be split at the timestamp of the last silence.

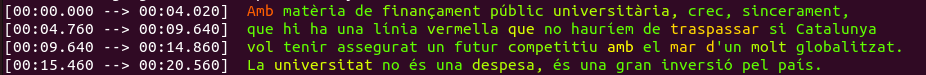

Print colors

--print_colors True options prints the transcribed text using an experimental color coding strategy based on whisper.cpp to highlight words with high or low confidence:

whisper-ctranslate2 myfile.mp3 --print_colors True

Live transcribe from your microphone

--live_transcribe True option activates the live transcription mode from your microphone:

whisper-ctranslate2 --live_transcribe True --language en

https://user-images.githubusercontent.com/309265/231533784-e58c4b92-e9fb-4256-b4cd-12f1864131d9.mov

Diarization (speaker identification)

There is experimental diarization support using pyannote.audio to identify speakers. At the moment, the support is at segment level.

To enable diarization you need to follow these steps:

- Install

pyannote.audiowithpip install "pyannote.audio==4.0" - Accept

pyannote/speaker-diarization-community-1user conditions - Create an access token at

hf.co/settings/tokens.

And then execute passing the HuggingFace API token as parameter to enable diarization:

whisper-ctranslate2 --hf_token YOUR_HF_TOKEN

and then the name of the speaker is added in the output files (e.g. JSON, VTT and STR files):

[SPEAKER_00]: There is a lot of people in this room

The option --speaker_name SPEAKER_NAME allows to use your own string to identify the speaker.

Need help?

Check our frequently asked questions for common questions.

Contact

Jordi Mas jmas@softcatala.org

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

Filter files by name, interpreter, ABI, and platform.

If you're not sure about the file name format, learn more about wheel file names.

Copy a direct link to the current filters

File details

Details for the file whisper_ctranslate2-0.5.7.tar.gz.

File metadata

- Download URL: whisper_ctranslate2-0.5.7.tar.gz

- Upload date:

- Size: 24.9 kB

- Tags: Source

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/6.2.0 CPython/3.13.7

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

e6d5d813e033a573d049bd3c0e6d3802a124404bf8421b81c507f0b3bfd9cfa3

|

|

| MD5 |

9e7d5eff1b815f40acac90d8b059f3e8

|

|

| BLAKE2b-256 |

33bd8d32c984b1f16fa3de39c85113827751a16a30aff7bd84c37024028e10dd

|

File details

Details for the file whisper_ctranslate2-0.5.7-py3-none-any.whl.

File metadata

- Download URL: whisper_ctranslate2-0.5.7-py3-none-any.whl

- Upload date:

- Size: 46.1 kB

- Tags: Python 3

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/6.2.0 CPython/3.13.7

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

e4b713bbe95f8025774206a1b6ec4bbaf50f6bd651a2b08a478a7c85b167e7c6

|

|

| MD5 |

6a377833bab8eac10bded3d1e248f7b5

|

|

| BLAKE2b-256 |

90da6336c0826a0ea9ba7f94fca34f45c85d56b64b3fe6707091385eb070f188

|