A toolset for compressing, deploying and serving LLM

Project description

👋 join us on Twitter, Discord and WeChat

Latest News 🎉

2024

- [2024/01] Support for multi-model, multi-machine, multi-card inference services. For usage instructions, please refer to here

- [2024/01] Support PyTorch inference engine, developed entirely in Python, helping to lower the barriers for developers and enable rapid experimentation with new features and technologies.

2023

- [2023/12] Turbomind supports multimodal input. Gradio Demo

- [2023/11] Turbomind supports loading hf model directly. Click here for details.

- [2023/11] TurboMind major upgrades, including: Paged Attention, faster attention kernels without sequence length limitation, 2x faster KV8 kernels, Split-K decoding (Flash Decoding), and W4A16 inference for sm_75

- [2023/09] TurboMind supports Qwen-14B

- [2023/09] TurboMind supports InternLM-20B

- [2023/09] TurboMind supports all features of Code Llama: code completion, infilling, chat / instruct, and python specialist. Click here for deployment guide

- [2023/09] TurboMind supports Baichuan2-7B

- [2023/08] TurboMind supports flash-attention2.

- [2023/08] TurboMind supports Qwen-7B, dynamic NTK-RoPE scaling and dynamic logN scaling

- [2023/08] TurboMind supports Windows (tp=1)

- [2023/08] TurboMind supports 4-bit inference, 2.4x faster than FP16, the fastest open-source implementation. Check this guide for detailed info

- [2023/08] LMDeploy has launched on the HuggingFace Hub, providing ready-to-use 4-bit models.

- [2023/08] LMDeploy supports 4-bit quantization using the AWQ algorithm.

- [2023/07] TurboMind supports Llama-2 70B with GQA.

- [2023/07] TurboMind supports Llama-2 7B/13B.

- [2023/07] TurboMind supports tensor-parallel inference of InternLM.

Introduction

LMDeploy is a toolkit for compressing, deploying, and serving LLM, developed by the MMRazor and MMDeploy teams. It has the following core features:

-

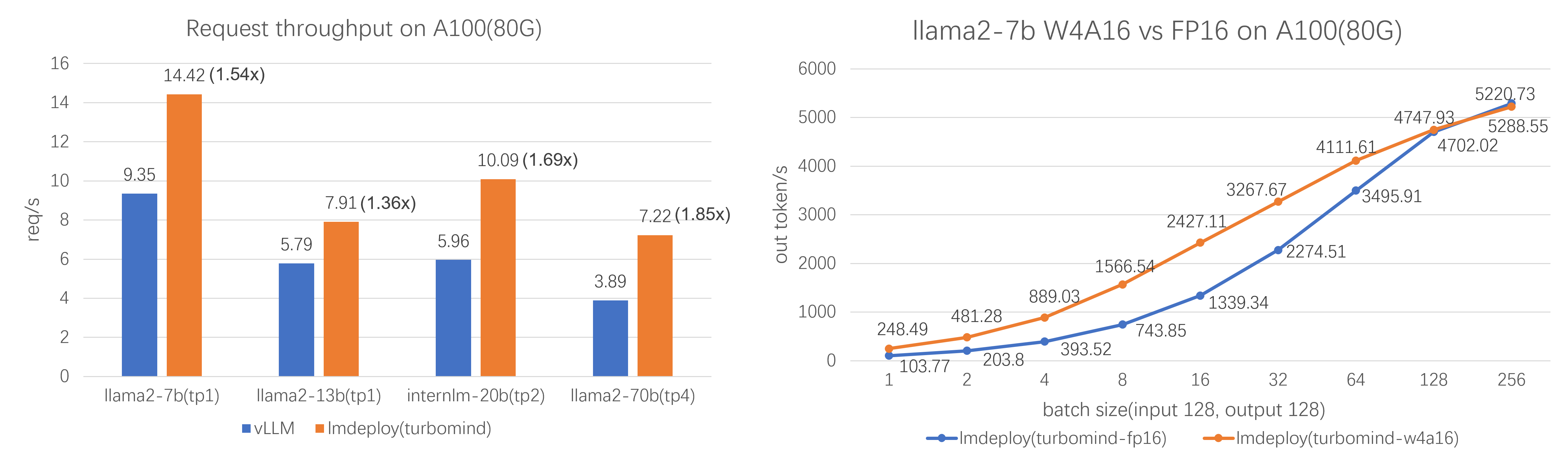

Efficient Inference: LMDeploy delivers up to 1.8x higher request throughput than vLLM, by introducing key features like persistent batch(a.k.a. continuous batching), blocked KV cache, dynamic split&fuse, tensor parallelism, high-performance CUDA kernels and so on.

-

Effective Quantization: LMDeploy supports weight-only and k/v quantization, and the 4-bit inference performance is 2.4x higher than FP16. The quantization quality has been confirmed via OpenCompass evaluation.

-

Effortless Distribution Server: Leveraging the request distribution service, LMDeploy facilitates an easy and efficient deployment of multi-model services across multiple machines and cards.

-

Interactive Inference Mode: By caching the k/v of attention during multi-round dialogue processes, the engine remembers dialogue history, thus avoiding repetitive processing of historical sessions.

Performance

For detailed inference benchmarks in more devices and more settings, please refer to the following link:

- A100

- V100

- 4090

- 3090

- 2080

Supported Models

| Model | Size |

|---|---|

| Llama | 7B - 65B |

| Llama2 | 7B - 70B |

| InternLM | 7B - 20B |

| InternLM2 | 7B - 20B |

| InternLM-XComposer | 7B |

| QWen | 7B - 72B |

| QWen-VL | 7B |

| Baichuan | 7B - 13B |

| Baichuan2 | 7B - 13B |

| Code Llama | 7B - 34B |

| ChatGLM2 | 6B |

| Falcon | 7B - 180B |

LMDeploy has developed two inference engines - TurboMind and PyTorch, each with a different focus. The former strives for ultimate optimization of inference performance, while the latter, developed purely in Python, aims to decrease the barriers for developers.

They differ in the types of supported models and the inference data type. Please refer to this table for each engine's capability and choose the proper one that best fits your actual needs.

Quick Start

Installation

Install lmdeploy with pip ( python 3.8+) or from source

pip install lmdeploy

Offline Batch Inference

import lmdeploy

pipe = lmdeploy.pipeline("internlm/internlm-chat-7b")

response = pipe(["Hi, pls intro yourself", "Shanghai is"])

print(response)

For more information about inference pipeline, please refer to here.

Tutorials

Please overview getting_started section for the basic usage of LMDeploy.

For detailed user guides and advanced guides, please refer to our tutorials:

- User Guide

- Advance Guide

- Add chat template

- Add a new model

- gemm tuning

- Long context inference

- Multi-model inference service

Contributing

We appreciate all contributions to LMDeploy. Please refer to CONTRIBUTING.md for the contributing guideline.

Acknowledgement

License

This project is released under the Apache 2.0 license.

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distributions

Built Distributions

Filter files by name, interpreter, ABI, and platform.

If you're not sure about the file name format, learn more about wheel file names.

Copy a direct link to the current filters

File details

Details for the file lmdeploy-0.2.0-cp311-cp311-win_amd64.whl.

File metadata

- Download URL: lmdeploy-0.2.0-cp311-cp311-win_amd64.whl

- Upload date:

- Size: 64.0 MB

- Tags: CPython 3.11, Windows x86-64

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/4.0.2 CPython/3.8.18

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

478cdb10709c0398607c25bd2782e6f9f62f2614e5b79b506edccb8938e5044e

|

|

| MD5 |

c5f0a79a7ac926b5256544eb53ddf8a6

|

|

| BLAKE2b-256 |

6a4ff3a7660e8570e868ed63aa6aa8ce3f6fe699b698b478f737e53367228f84

|

File details

Details for the file lmdeploy-0.2.0-cp311-cp311-manylinux2014_x86_64.whl.

File metadata

- Download URL: lmdeploy-0.2.0-cp311-cp311-manylinux2014_x86_64.whl

- Upload date:

- Size: 94.8 MB

- Tags: CPython 3.11

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/4.0.2 CPython/3.8.18

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

a06cf7b53fcc7a7311b566f73f9b9dfe01eccf73ad1c5f74a6ac0a9e6ae3ffdd

|

|

| MD5 |

2b2893e250687d8c32ef840180b69ae6

|

|

| BLAKE2b-256 |

8dd7b4d6fced26213d9d015d3482b94c2727e3108d6b8c995550137147b4b35e

|

File details

Details for the file lmdeploy-0.2.0-cp310-cp310-win_amd64.whl.

File metadata

- Download URL: lmdeploy-0.2.0-cp310-cp310-win_amd64.whl

- Upload date:

- Size: 64.0 MB

- Tags: CPython 3.10, Windows x86-64

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/4.0.2 CPython/3.8.18

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

0c4a8647268ccf9db899f3d344f7fae5eedeef1fb9f4fb89fd4281186b29331e

|

|

| MD5 |

7b61a07d147d71f3a933a212c98ff3a8

|

|

| BLAKE2b-256 |

5a1f83f8de54050288c0ff64ba38a5cd9b92c6b56c86b017b2acb16b87c9f05c

|

File details

Details for the file lmdeploy-0.2.0-cp310-cp310-manylinux2014_x86_64.whl.

File metadata

- Download URL: lmdeploy-0.2.0-cp310-cp310-manylinux2014_x86_64.whl

- Upload date:

- Size: 94.8 MB

- Tags: CPython 3.10

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/4.0.2 CPython/3.8.18

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

919230eb2f7151e2f571ecdb62b6ff132b045e8da2845be76e808b527eb86dcd

|

|

| MD5 |

e94635381050ea8e5273dd2f8b3488ed

|

|

| BLAKE2b-256 |

ee1313c968af3f6230e625fcb55f4d10ed98ed8eb310b10e38b360569286847e

|

File details

Details for the file lmdeploy-0.2.0-cp39-cp39-win_amd64.whl.

File metadata

- Download URL: lmdeploy-0.2.0-cp39-cp39-win_amd64.whl

- Upload date:

- Size: 63.9 MB

- Tags: CPython 3.9, Windows x86-64

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/4.0.2 CPython/3.8.18

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

839e0248724fc253dd70609093b762f6f23c78db7f12b966ebb15e92e863afc6

|

|

| MD5 |

9c2e8d1b9a176462871a30635f7f669b

|

|

| BLAKE2b-256 |

7f23b279438ac9f346431ffc45cabea57e40a55f2f1f976e673244bc51abf13e

|

File details

Details for the file lmdeploy-0.2.0-cp39-cp39-manylinux2014_x86_64.whl.

File metadata

- Download URL: lmdeploy-0.2.0-cp39-cp39-manylinux2014_x86_64.whl

- Upload date:

- Size: 94.8 MB

- Tags: CPython 3.9

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/4.0.2 CPython/3.8.18

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

6b4c6c86dce79d0973ffab03e9257e2d7598acb137d734afface9c95f14dd8cf

|

|

| MD5 |

5ba019b3ee4252e0a5d7ed22094a46d8

|

|

| BLAKE2b-256 |

aae5b58242a9c2030fd0fb5a11ddb05bf079bf06b2cb29d8560e90cc1ad599b2

|

File details

Details for the file lmdeploy-0.2.0-cp38-cp38-win_amd64.whl.

File metadata

- Download URL: lmdeploy-0.2.0-cp38-cp38-win_amd64.whl

- Upload date:

- Size: 64.0 MB

- Tags: CPython 3.8, Windows x86-64

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/4.0.2 CPython/3.8.18

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

679265ead78eeeba976e3965aaac93e3237a64d64639125d3762af2dd3ab9802

|

|

| MD5 |

6070f4f73c581d43b0a71cd1bbf798f5

|

|

| BLAKE2b-256 |

4ecef930b2cef148447cc57e53aaea2eaee05e2c333085a505c01b69c0c8a113

|

File details

Details for the file lmdeploy-0.2.0-cp38-cp38-manylinux2014_x86_64.whl.

File metadata

- Download URL: lmdeploy-0.2.0-cp38-cp38-manylinux2014_x86_64.whl

- Upload date:

- Size: 94.8 MB

- Tags: CPython 3.8

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/4.0.2 CPython/3.8.18

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

0dbd090f4a3f2daa6a9f7669ad93c6a98d5c8c5b09608487a612cdd251ba336b

|

|

| MD5 |

72f407fae59e3e7648e5294e3cff89bc

|

|

| BLAKE2b-256 |

32f15e557d6b2b020b357b847670b43fbd3a579de776a3af755c33ea05d7849f

|